This entry-level Hands-On Lab exercise is designed to help you master the basics of Coalesce. In this lab, you'll explore the Coalesce interface, learn how to easily transform and model your data with our core capabilities, understand how to deploy and refresh version-controlled data pipelines, and build a ML Forecast node that forecasts sales values for a fiction food truck company.

What you'll need

- A Snowflake trial account

- A Coalesce trial account created via Snowflake Partner Connect

- Basic knowledge of SQL, database concepts, and objects

- The Google Chrome browser

What you'll build

- A Directed Acyclic Graph (DAG) representing a basic star schema in Snowflake

What you'll learn

- How to navigate the Coalesce interface

- How to add data sources to your graph

- How to prepare your data for transformations with Stage nodes

- How to join tables

- How to apply transformations to individual and multiple columns at once

- How to build out Dimension and Fact nodes

- How to make changes to your data and propagate changes across pipelines

- How to configure and build an ML Forecast node

By completing the steps we've outlined in this guide, you'll have mastered the basics of Coalesce and can venture into our more advanced features.

Coalesce is the first cloud-native, visual data transformation platform built for Snowflake. Coalesce enables data teams to develop and manage data pipelines in a sustainable way at enterprise scale and collaboratively transform data without the traditional headaches of manual, code-only approaches.

What can I do with Coalesce?

With Coalesce, you can:

- Develop data pipelines and transform data as efficiently as possible by coding as you like and automating the rest, with the help of an easy-to-learn visual interface

- Work more productively with customizable templates for frequently-used transformations, auto-generated and standardized SQL, and full support for Snowflake functionality

- Analyze the impact of changes to pipelines with built-in data lineage down to the column level

- Build the foundation for predictable DataOps through automated CI/CD workflows and full git integration

- Ensure consistent data standards and governance across pipelines, with data never leaving your Snowflake instance

How is Coalesce different?

Coalesce's unique architecture is built on the concept of column-aware metadata, meaning that the platform collects, manages, and uses column- and table-level information to help users design and deploy data warehouses more effectively. This architectural difference gives data teams the best that legacy ETL and code-first solutions have to offer in terms of flexibility, scalability and efficiency.

Data teams can define data warehouses with column-level understanding, standardize transformations with data patterns (templates) and model data at the column level.

Coalesce also uses column metadata to track past, current, and desired deployment states of data warehouses over time. This provides unparalleled visibility and control of change management workflows, allowing data teams to build and review plans before deploying changes to data warehouses.

Core Concepts in Coalesce

Snowflake

Coalesce currently only supports Snowflake as its target database, As you will be using a trial Coalesce account created via Partner Connect, your basic database settings will be configured automatically and you can instantly build code.

Organization

A Coalesce organization is a single instance of the UI, set up specifically for a single prospect or customer. It is set up by a Coalesce administrator and is accessed via a username and password. By default, an organization will contain a single Project and a single user with administrative rights to create further users.

Projects

Projects provide a way of completely segregating elements of a build, including the source and target locations of data, the individual pipelines and ultimately the git repository where the code is committed. Therefore teams of users can work completely independently from other teams who are working in a different Coalesce Project.

Each Project requires access to a git repository and Snowflake account to be fully functional. A Project will default to containing a single Workspace, but will ultimately contain several when code is branched.

Workspaces vs Environments

A Coalesce Workspace is an area where data pipelines are developed that point to a single git branch and a development set of Snowflake schemas. One or more users can access a single Workspace. Typically there are several Workspaces within a Project, each with a specific purpose (such as building different features). Workspaces can be duplicated (branched) or merged together.

A Coalesce Environment is a target area where code and job definitions are deployed to. Examples of an environment would include QA, PreProd and Production.

It isn't possible to directly develop code in an Environment, only deploy to there from a particular Workspace (branch). Job definitions in environments can only be run via the CLI or API (not the UI). Environments are shared across an entire project, therefore the definitions are accessible from all workspaces. Environments should always point to different target schemas (and ideally different databases), than any Workspaces.

As the lead Data & Analytics manager for TastyBytes Food Trucks, you're responsible for building and managing data pipelines that deliver insights to the rest of the company. There customer-related questions that the business needs to answer that will help with inventory planning and marketing. Included in this, is building a machine learning forecast that will allow management to determine sales volume for each item on the menu.

In order to help your extended team answer these questions, you'll need to build a customer data pipeline first.

To complete this lab, please create free trial accounts with Snowflake and Coalesce by following the steps below. You have the option of setting up Git-based version control for your lab, but this is not required to perform the following exercises. Please note that none of your work will be committed to a repository unless you set Git up before developing.

We recommend using Google Chrome as your browser for the best experience.

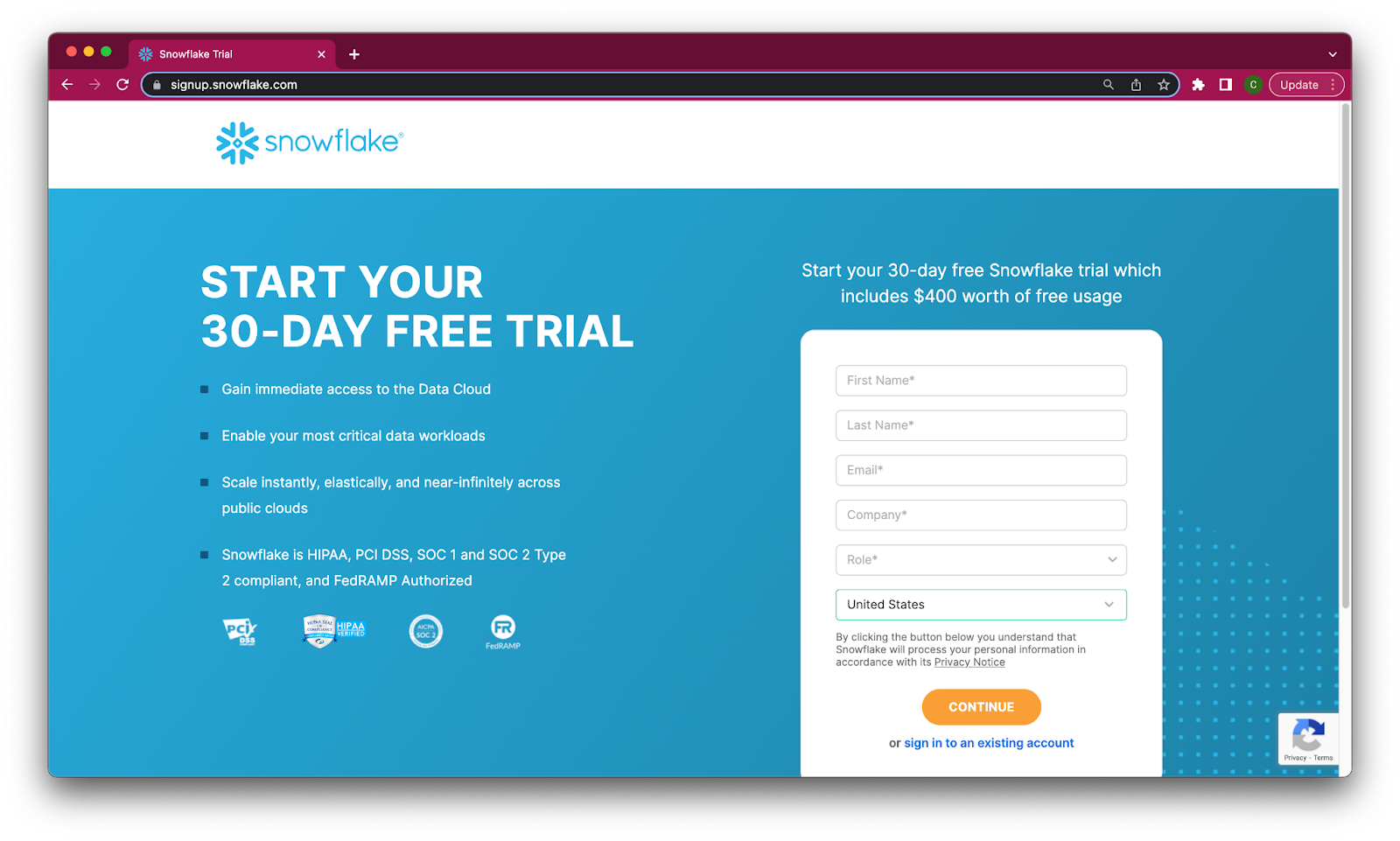

Step 1: Create a Snowflake Trial Account

- Fill out the Snowflake trial account form here. Use an email address that is not associated with an existing Snowflake account.

- When signing up for your Snowflake account, select the region that is physically closest to you and choose Enterprise as your Snowflake edition. Please note that the Snowflake edition, cloud provider, and region used when following this guide do not matter.

- After registering, you will receive an email from Snowflake with an activation link and URL for accessing your trial account. Finish setting up your account following the instructions in the email.

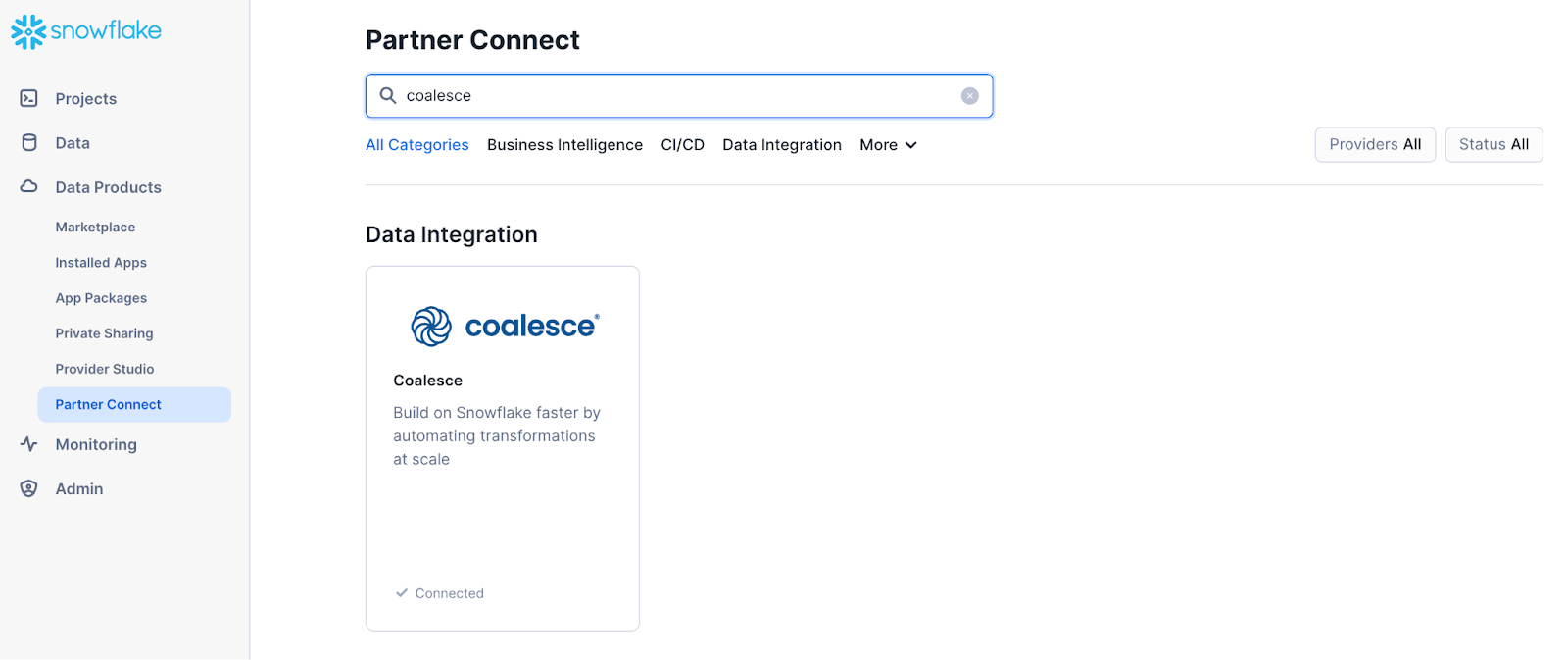

Step 2: Create a Coalesce Trial Account with Snowflake Partner Connect

Once you are logged into your Snowflake account, sign up for a free Coalesce trial account using Snowflake Partner Connect. Check your Snowflake account profile to make sure that it contains your fist and last name.

Once you are logged into your Snowflake account, sign up for a free Coalesce trial account using Snowflake Partner Connect. Check your Snowflake account profile to make sure that it contains your fist and last name.

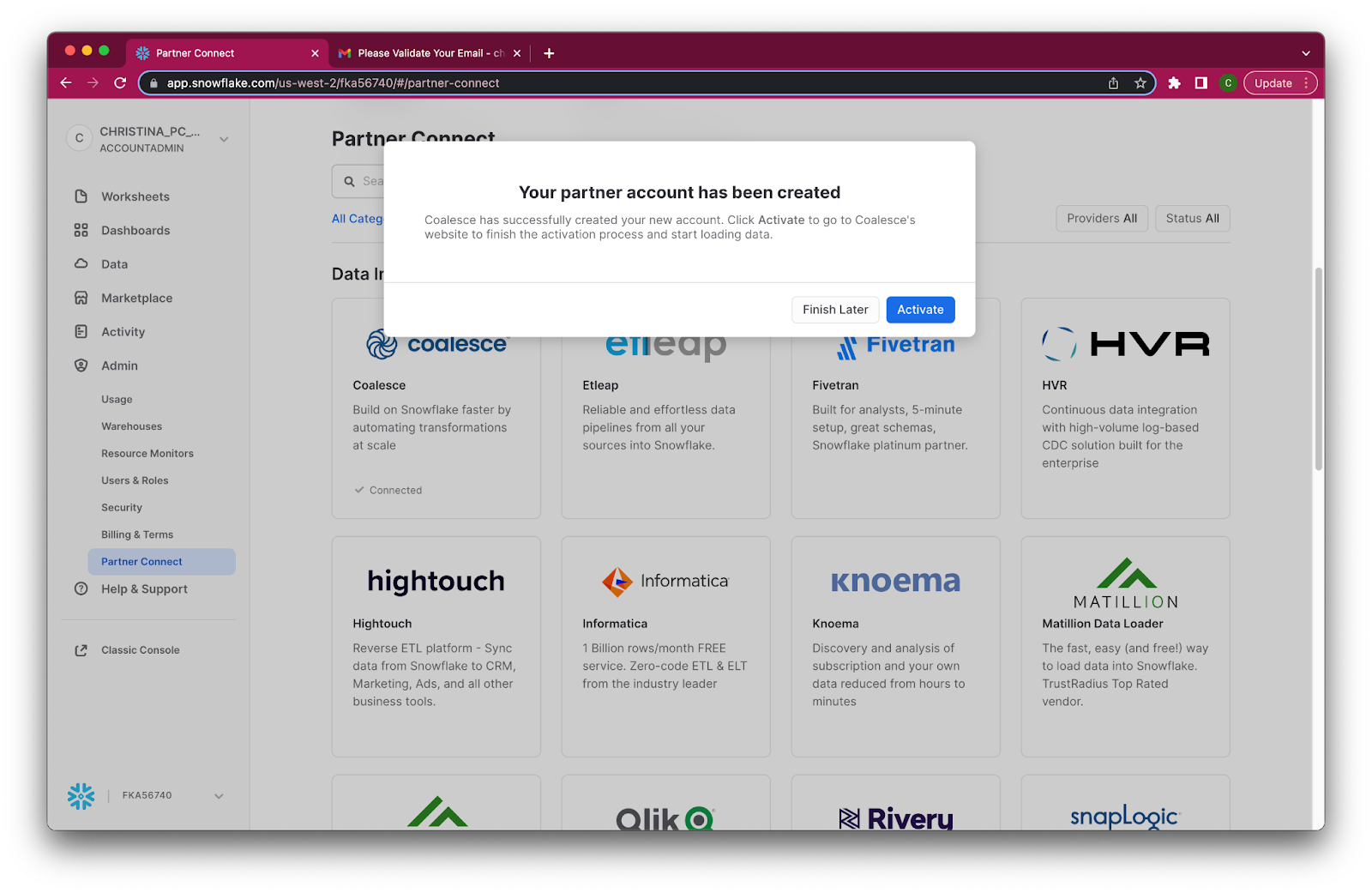

- Select Data Products > Partner Connect in the navigation bar on the left hand side of your screen and search for Coalesce in the search bar.

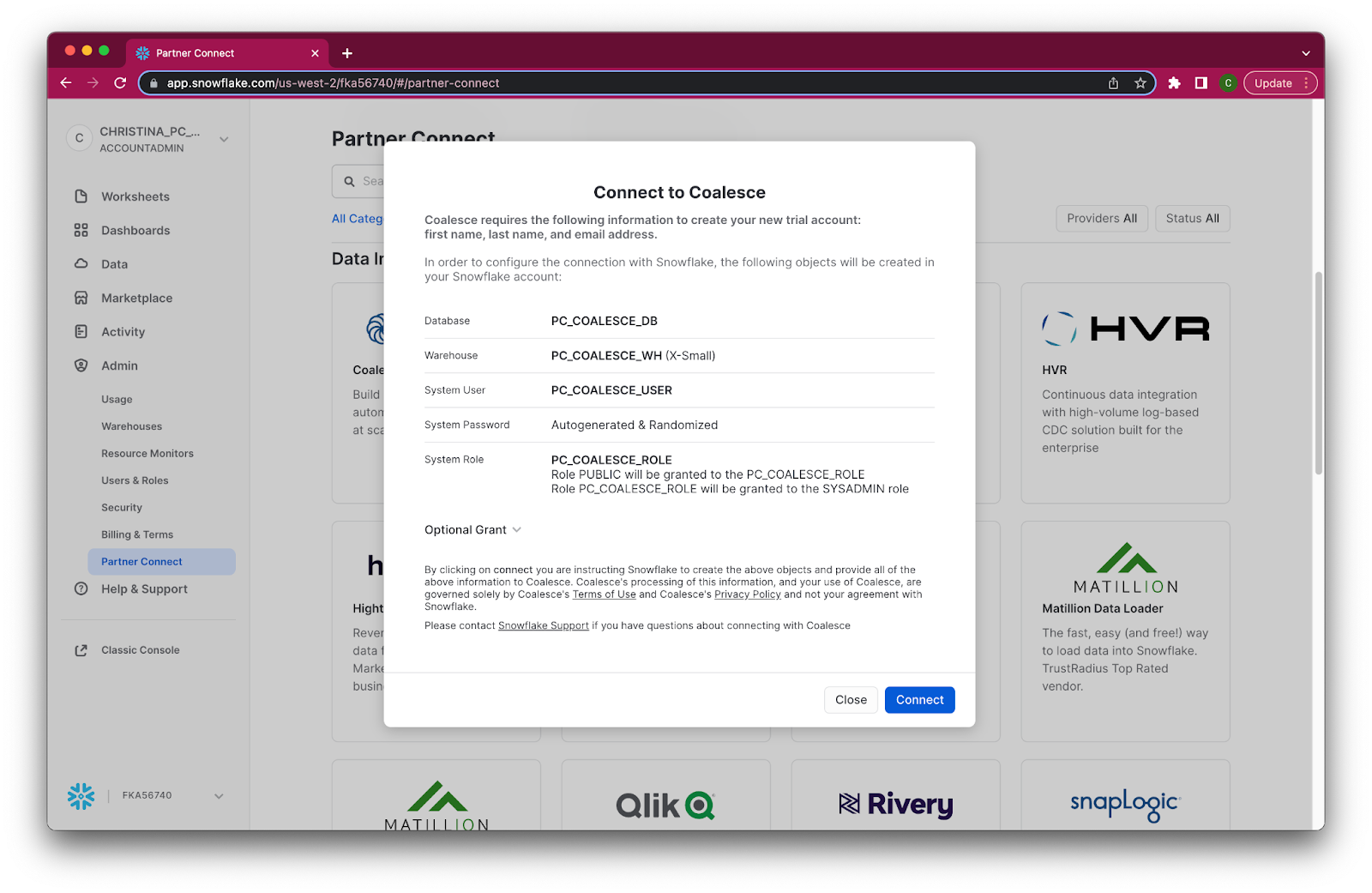

- Review the connection information and then click Connect.

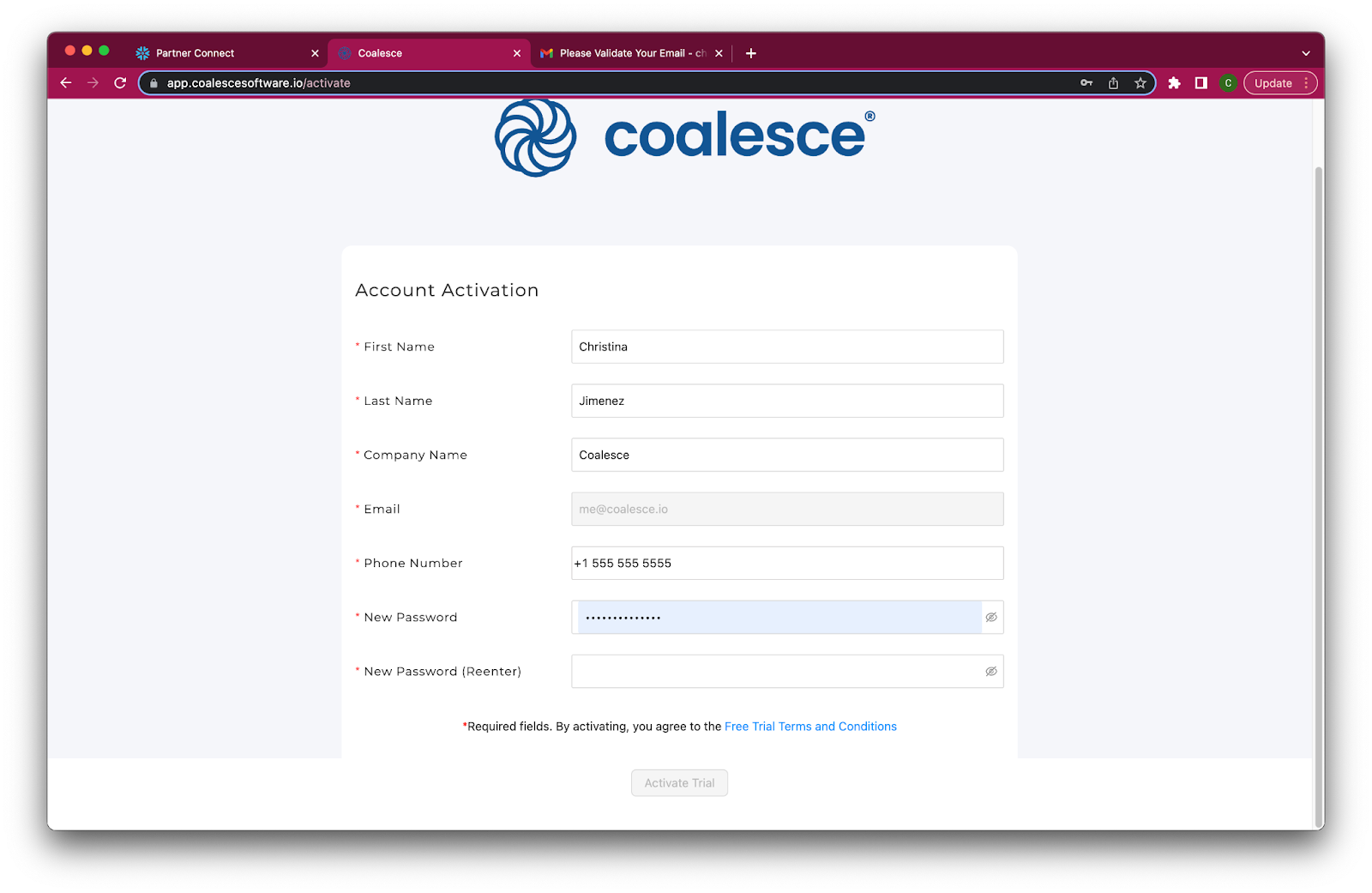

- When prompted, click Activate to activate your account. You can also activate your account later using the activation link emailed to your address.

- Once you've activated your account, fill in your information to complete the activation process.

Congratulations! You've successfully created your Coalesce trial account.

Step 3: Set Up TastyBytes Dataset

- We will be using a M Warehouse size within Snowflake for this lab. You can upgrade this within the admin tab of your Snowflake account.

- In your Snowflake account, click on the Worksheets Tab in the left-hand navigation bar.

- Within Worksheets, click the "+" button in the top-right corner of Snowsight and choose "SQL Worksheet."

- Rename the Worksheet to "Tasty Bytes - Setup."

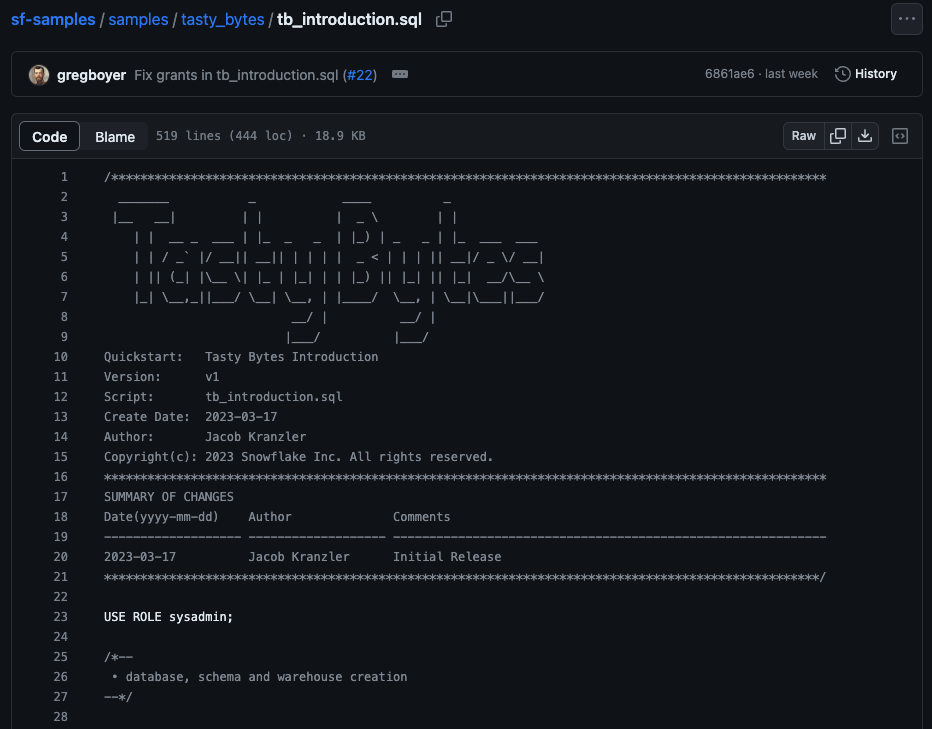

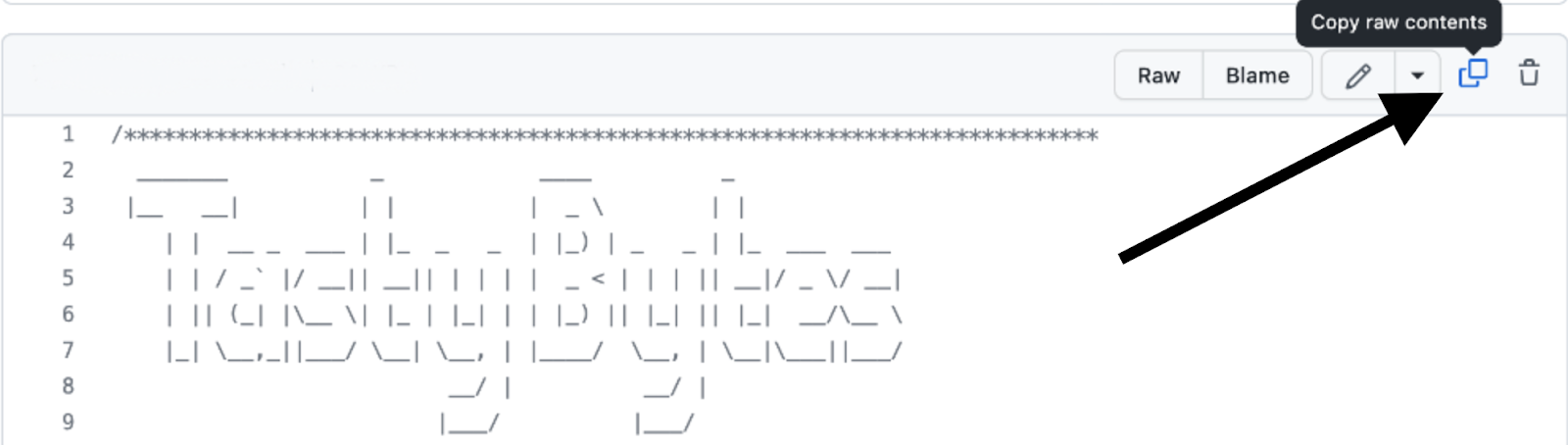

- Navigate to the Tasty Bytes SQL Setup file that is hosted on GitHub.

- Within GitHub, navigate to the right side and click "Copy raw contents". This will copy all of the required SQL into your clipboard.

- Paste the setup SQL from GitHub into your Snowflake Worksheet. Then click inside the worksheet and select All (CMD + A for Mac or CTRL + A for Windows) and Click "► Run".

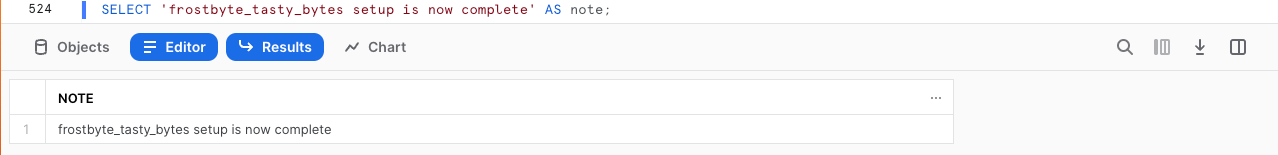

- After clicking "► Run" you will see queries begin to execute. These queries will run one after another with the entire worksheet taking around 5 minutes. Upon completion you will see a message that states stating

frostbyte_tasty_bytes setup is now complete.

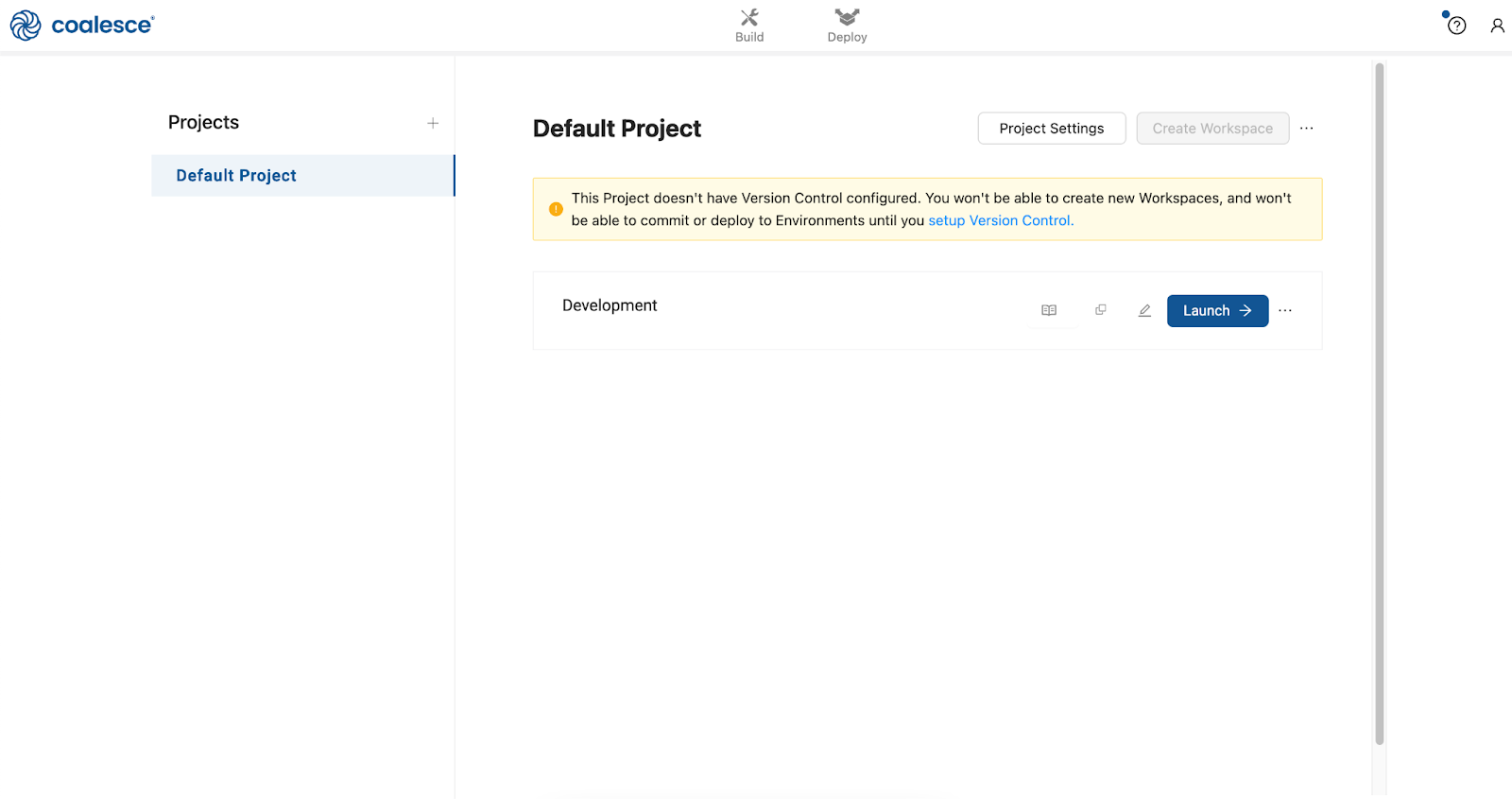

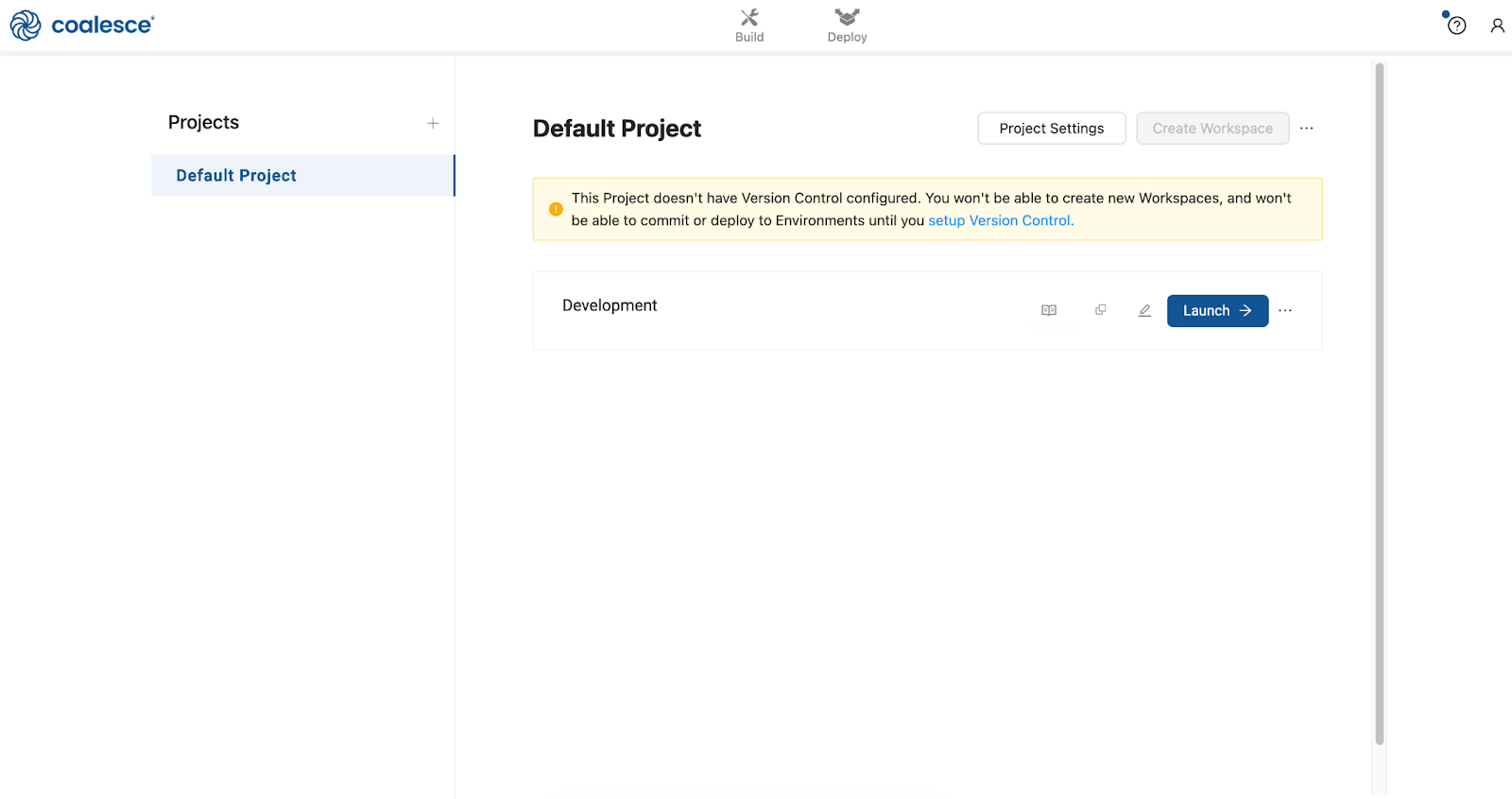

Once you've activated your Coalesce trial account and logged in, you will land in your Projects dashboard. Projects are a useful way of organizing your development work by a specific purpose or team goal, similar to how folders help you organize documents in Google Drive.

Your trial account includes a default Project to help you get started. Click on the Launch button next to your Development Workspace to get started.

Step 4: Add the Cortex Package from the Coalesce Marketplace

You will need to add the ML Forecast node into your Coalesce workspace in order to complete this lab.

- Launch your workspace within your Coalesce account

- Navigate to the build settings in the lower left hand corner of the left sidebar

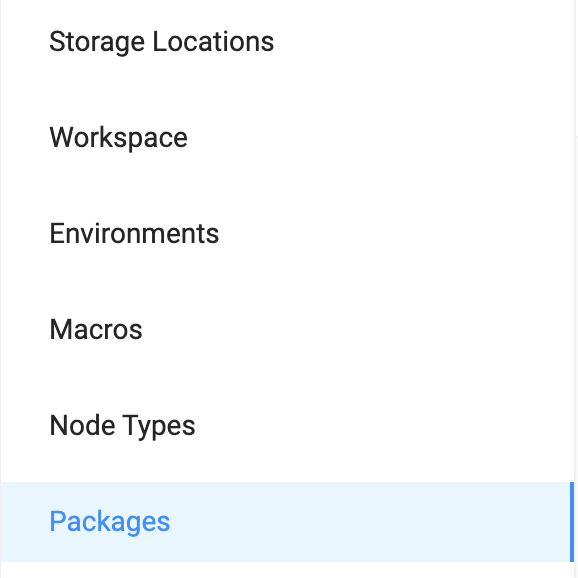

- Select Packages from the Build Settings options

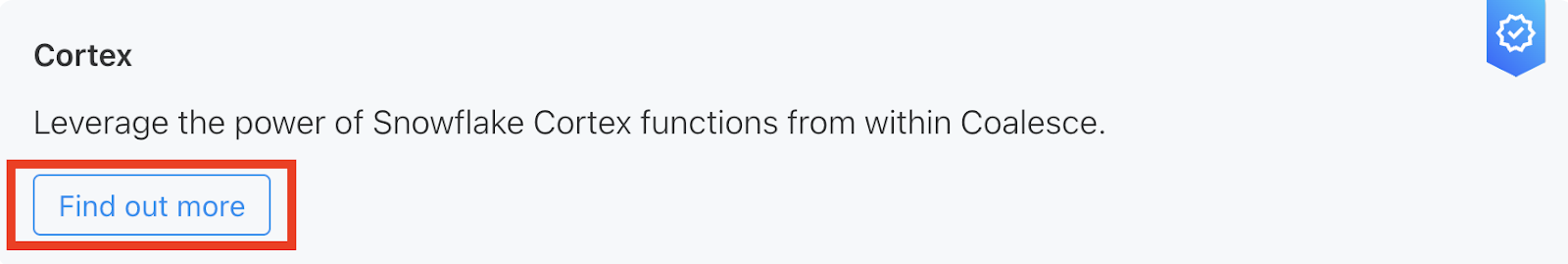

- Select Browse to Launch the Coalesce Marketplace

- Select Find out more within the Cortex package

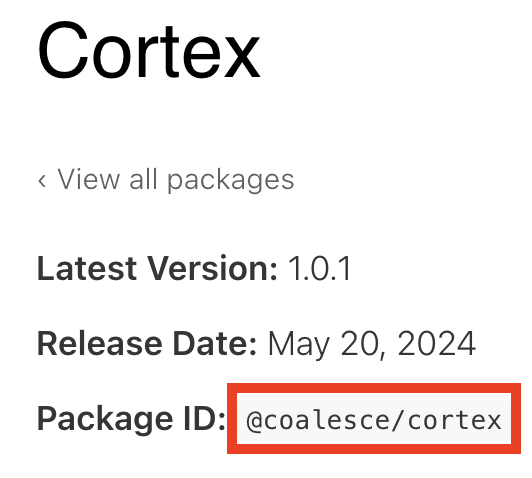

- Copy the package ID from the Cortex page

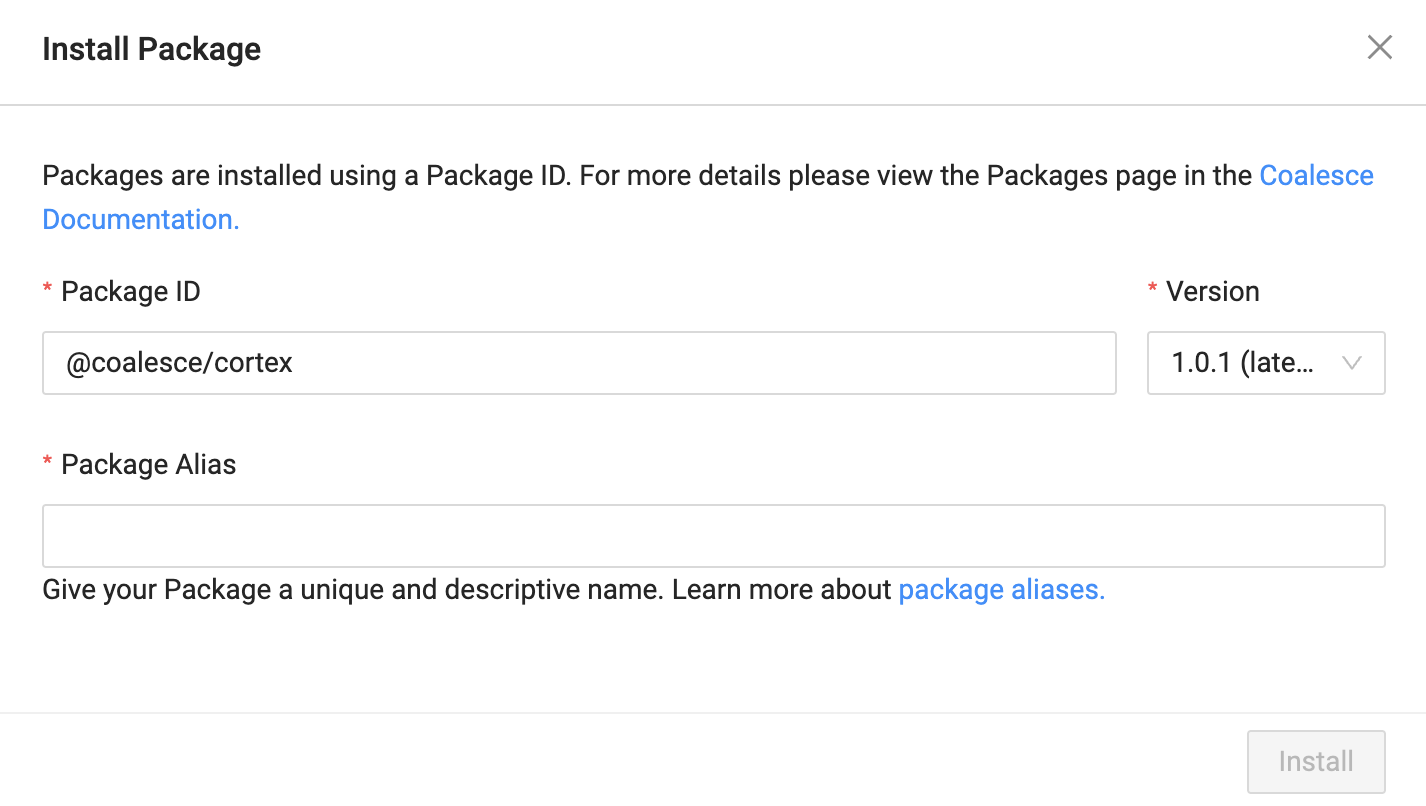

- Back in Coalesce, select the Install button:

- Paste the Package ID into the corresponding input box:

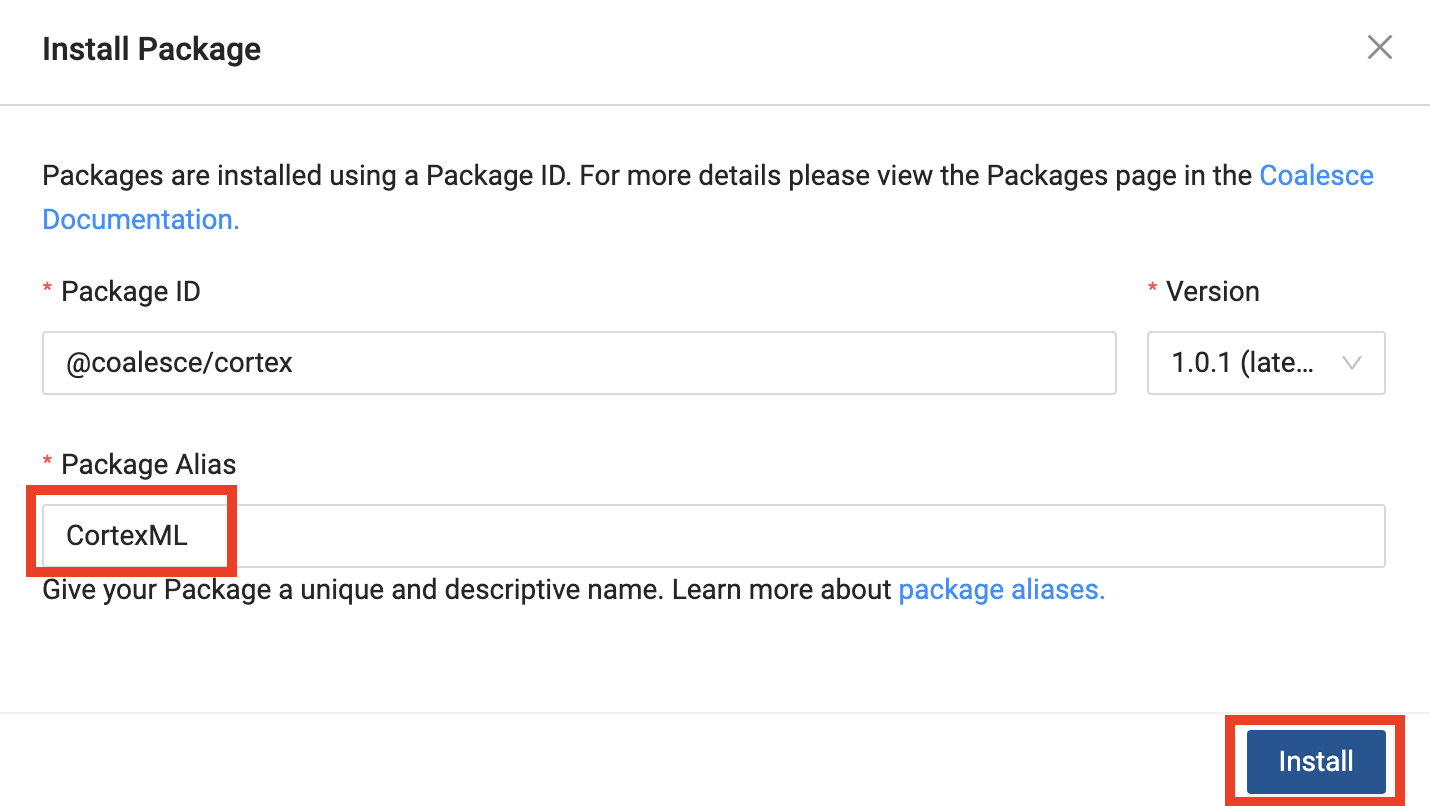

- Give the package an Alias, which is the name of the package that will appear within the Build Interface of Coalesce. And finish by clicking Install.

Let's get familiar with Coalesce by walking through the basic components of the user interface.

Once you've activated your Coalesce trial account and logged in, you will land in your Projects dashboard. Projects are a useful way of organizing your development work by a specific purpose or team goal, similar to how folders help you organize documents in Google Drive.

Your trial account includes a default Project to help you get started.

- Launch your Development Workspace by clicking the Launch button and navigate to the Build Interface of the Coalesce UI. This is where you can create, modify and publish nodes that will transform your data.

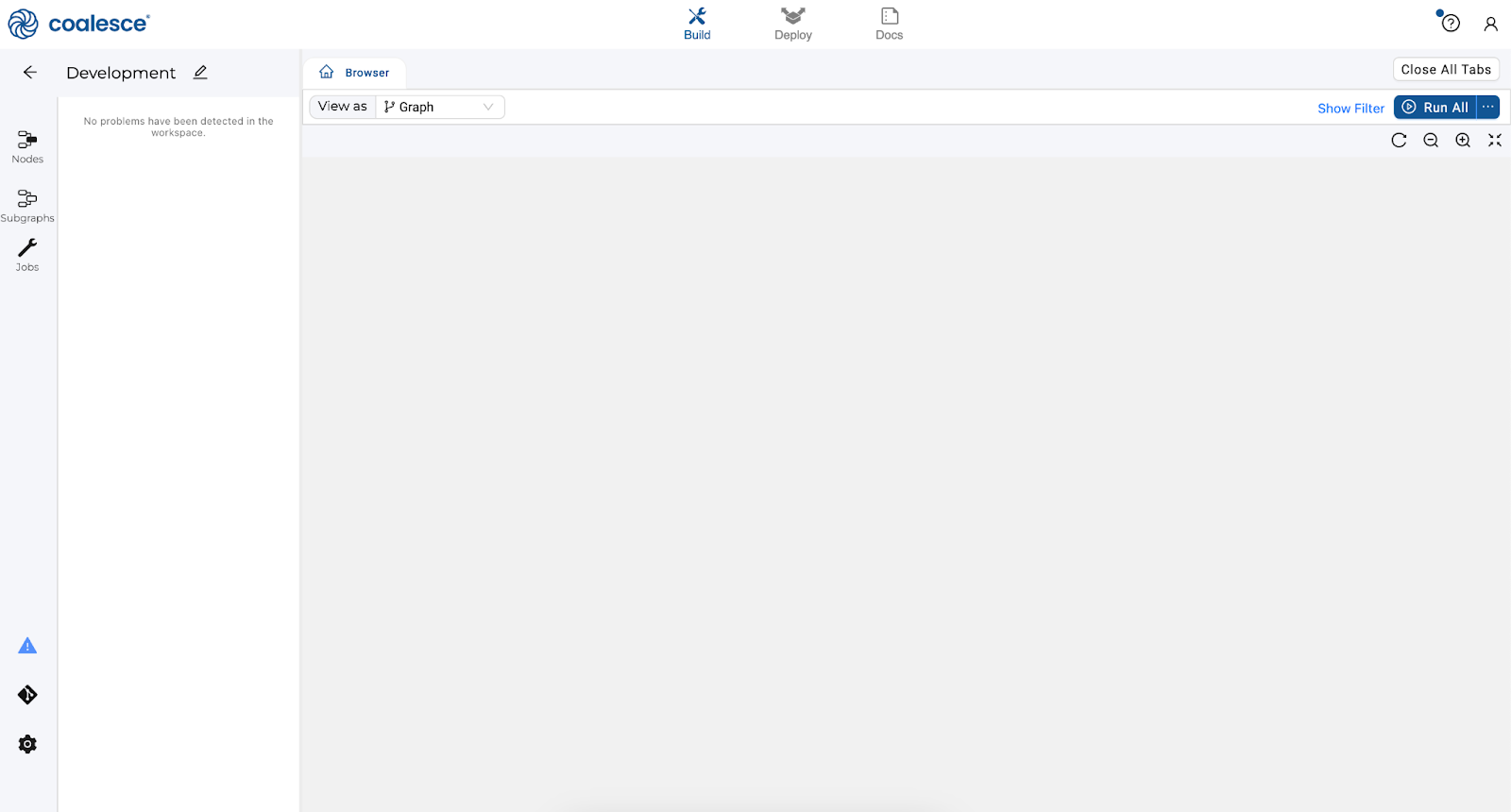

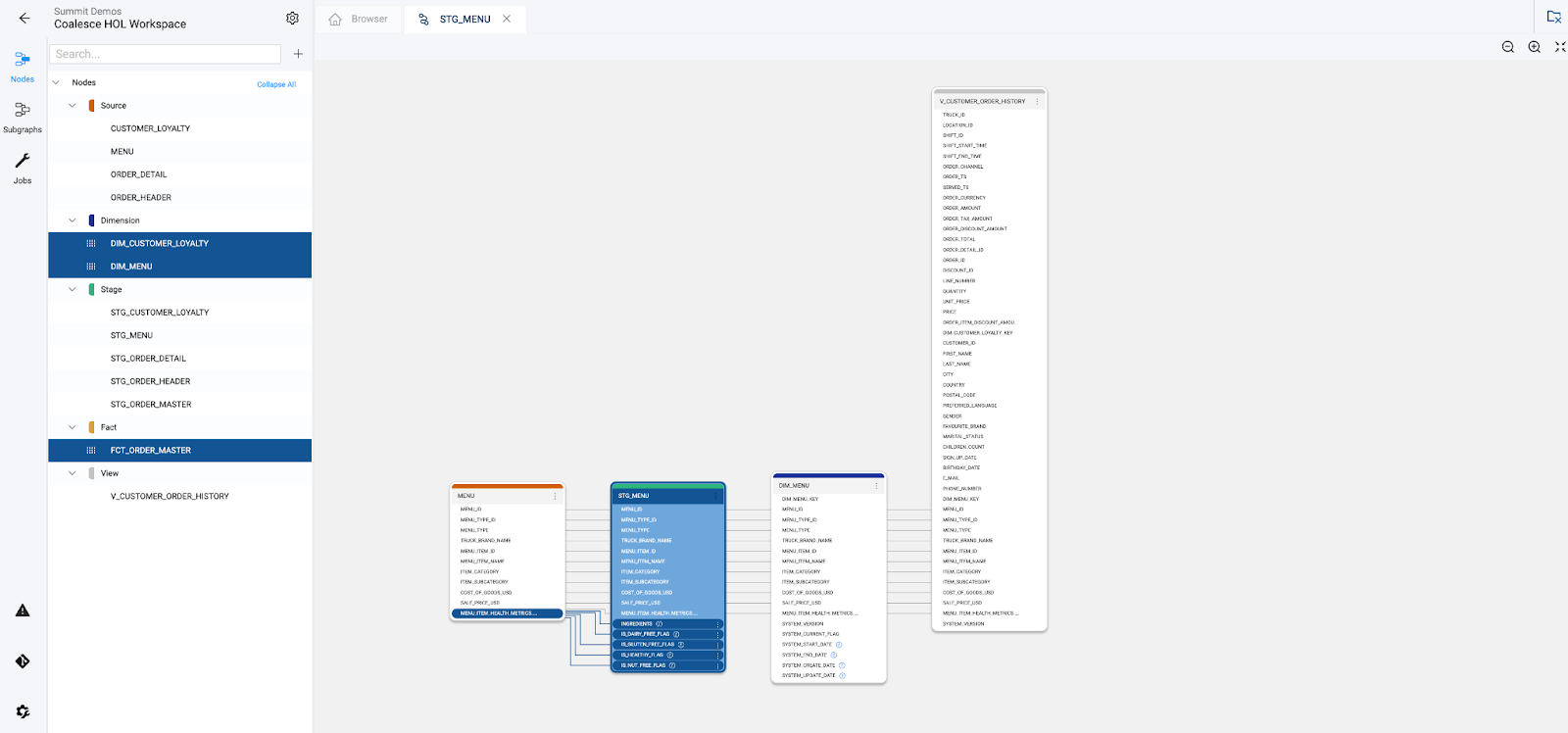

Nodes are visual representations of objects in Snowflake that can be arranged to create a Directed Acyclic Graph (DAG or Graph). A DAG is a conceptual representation of the nodes within your data pipeline and their relationships to and dependencies on each other.

- In the Browser tab of the Build interface, you can visualize your node graph using the Graph, Node Grid, and Column Grid views. In the upper right hand corner, there is a person-shaped icon where you can manage your account and user settings.

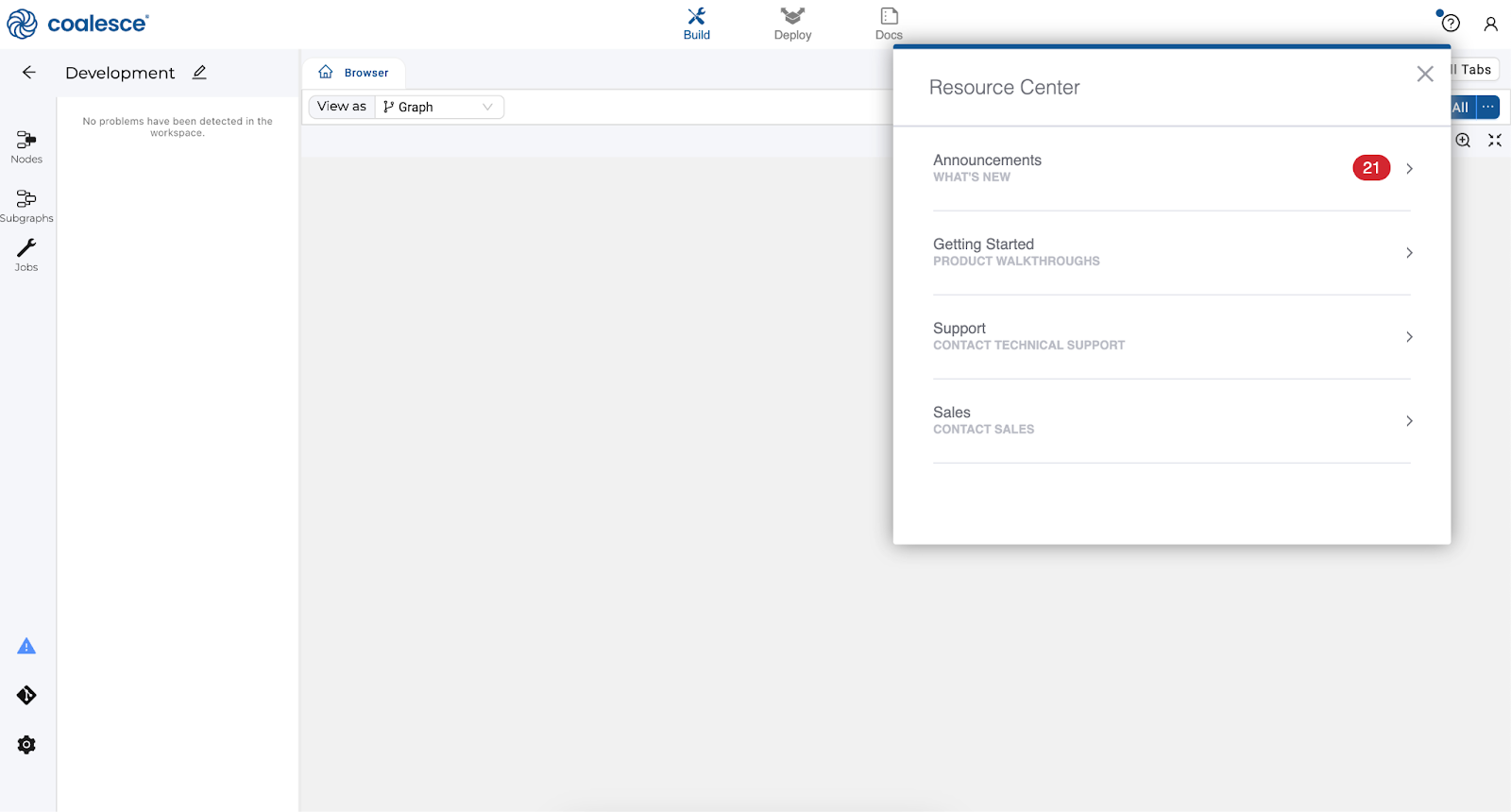

- By clicking the question mark icon, you can access the Resource Center to find product guides, view announcements, request support, and share feedback.

- The Browser tab of the Build interface is where you'll build your pipelines using nodes. You can visualize your graph of these nodes using the Graph, Node Grid, and Column Grid views. While this area is currently empty, we will build out nodes and our Graph in subsequent steps of this lab.

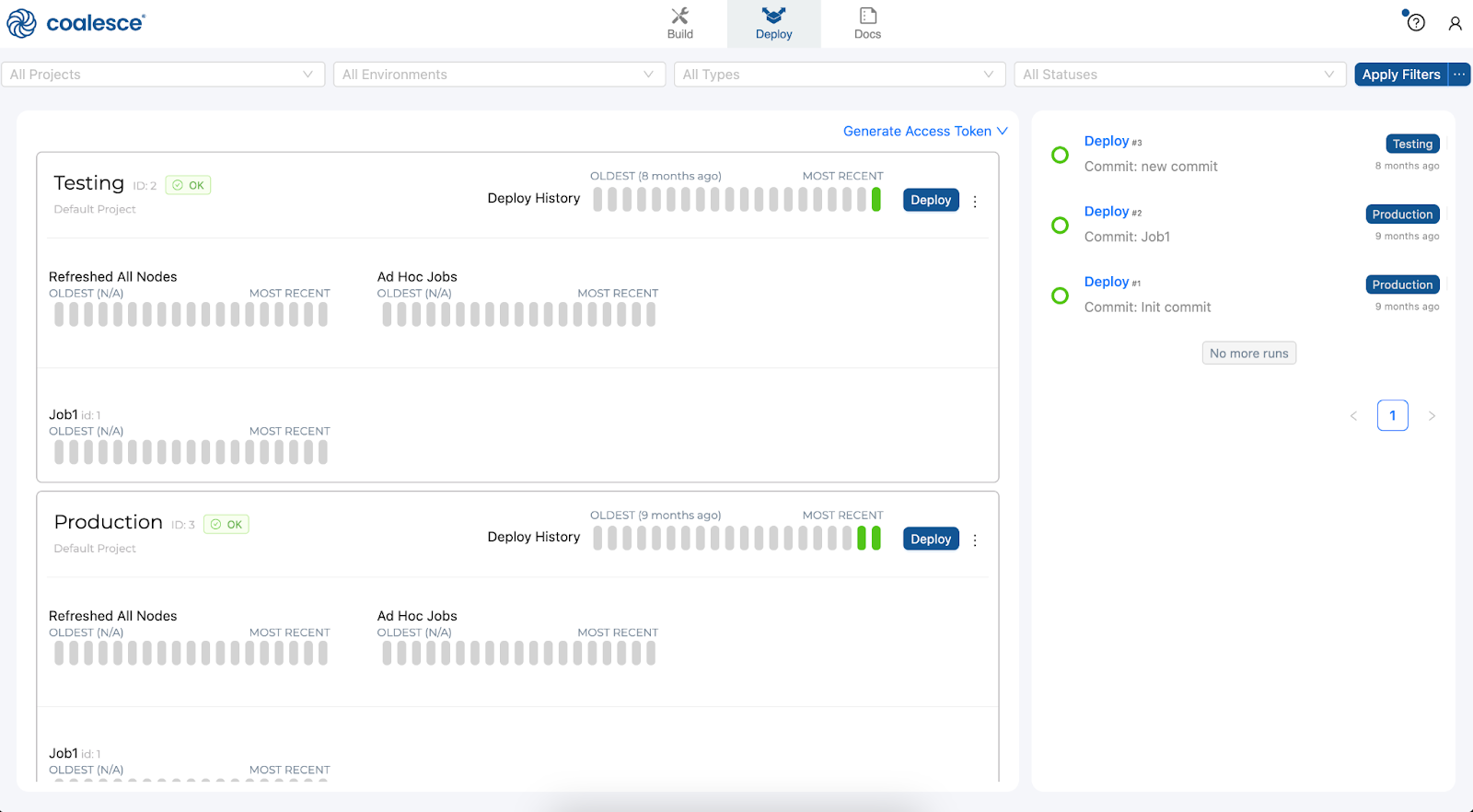

- Next to the Build interface is the Deploy interface. This is where you will push your pipeline to other environments such as Testing or Production.

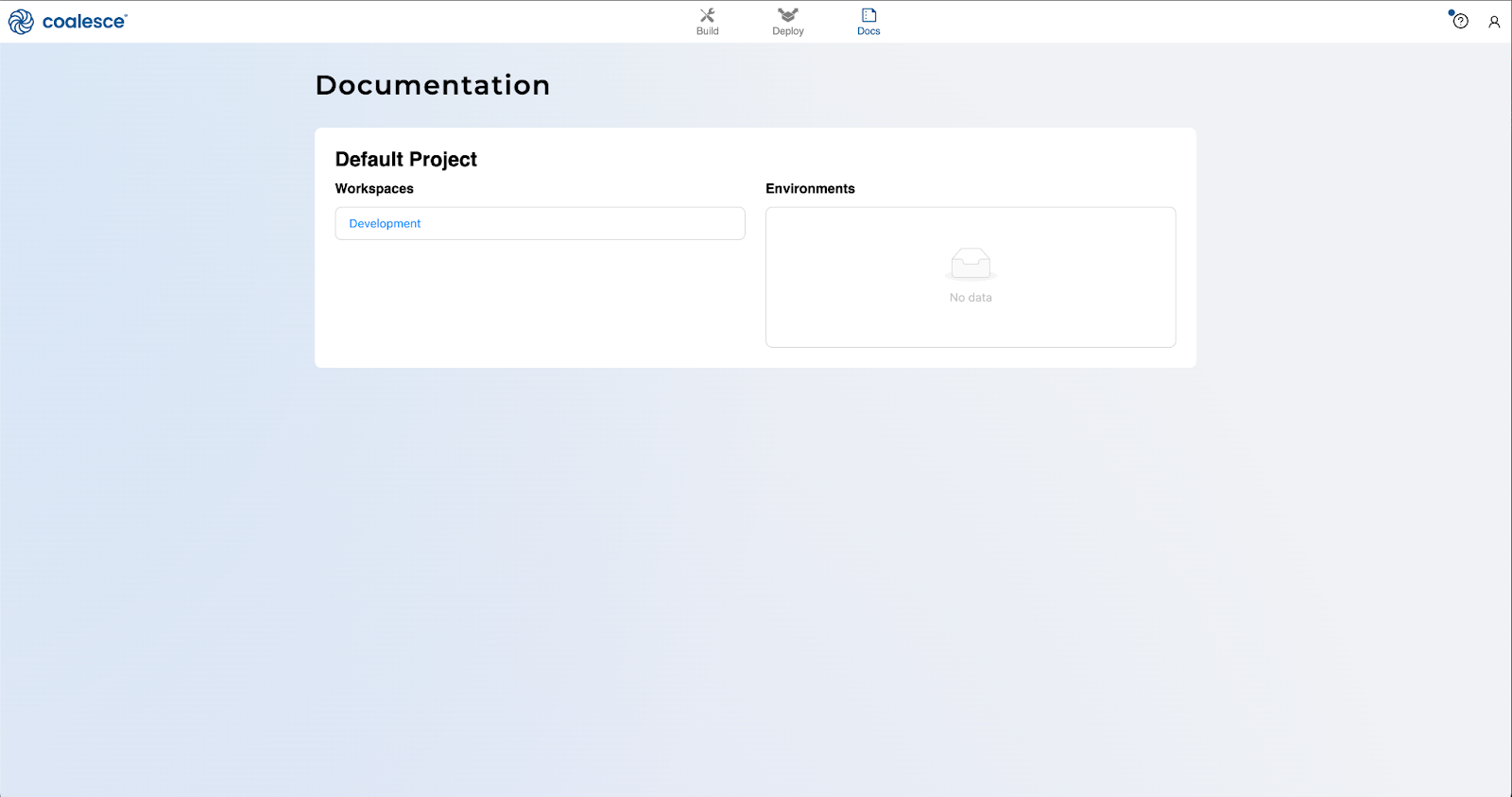

- Next to the Deploy interface is the Docs interface. Documentation is an essential step in building a sustainable data architecture, but is sometimes put aside to focus on development work to meet deadlines. To help with this, Coalesce automatically generates and updates documentation as you work.

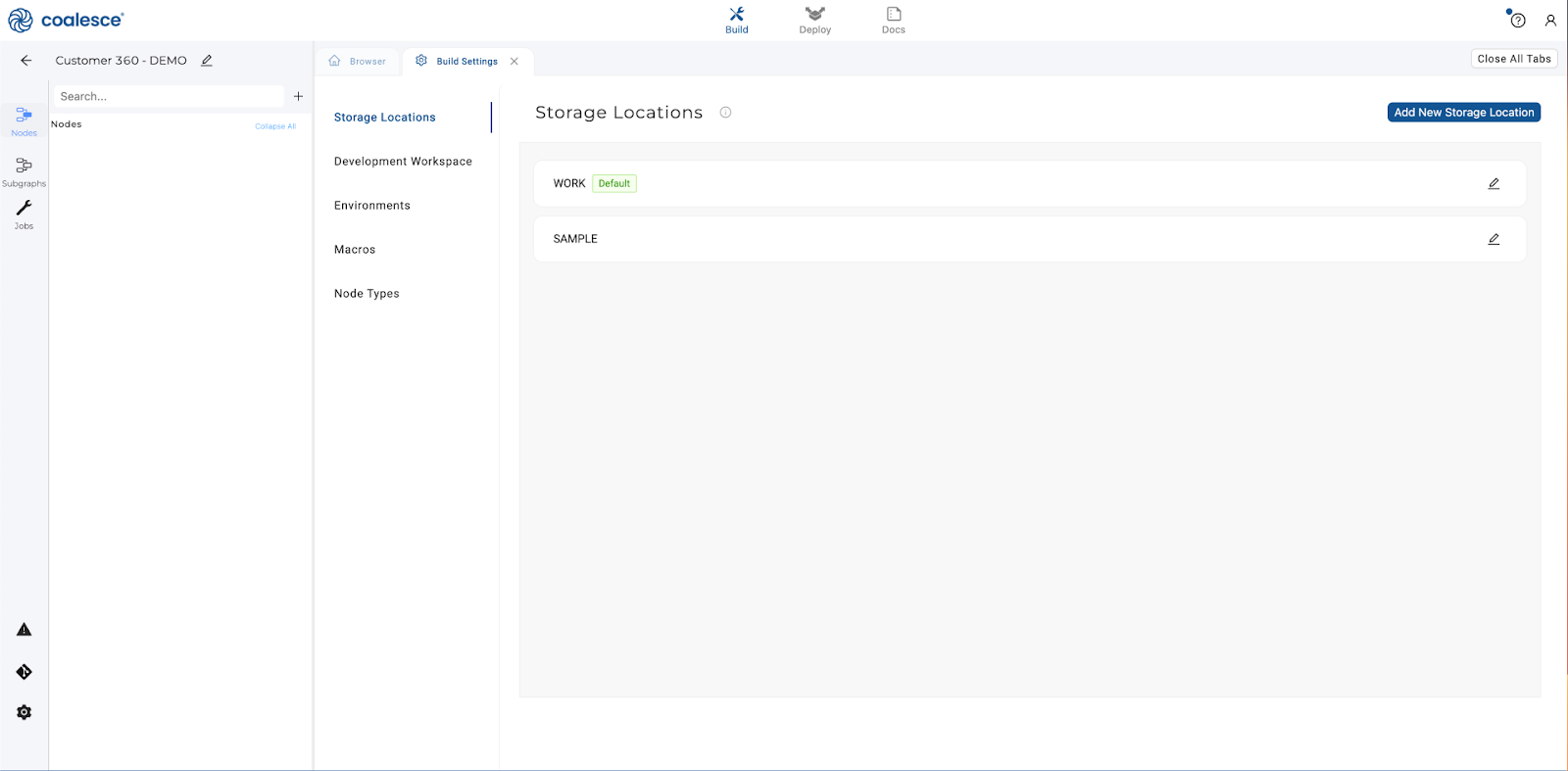

Before you can begin transforming your data, you will need to configure storage locations. Storage locations represent a logical destination in Snowflake for your database objects such as views and tables.

- To add storage locations, navigate to the left side of your screen and click on the Build settings cog.

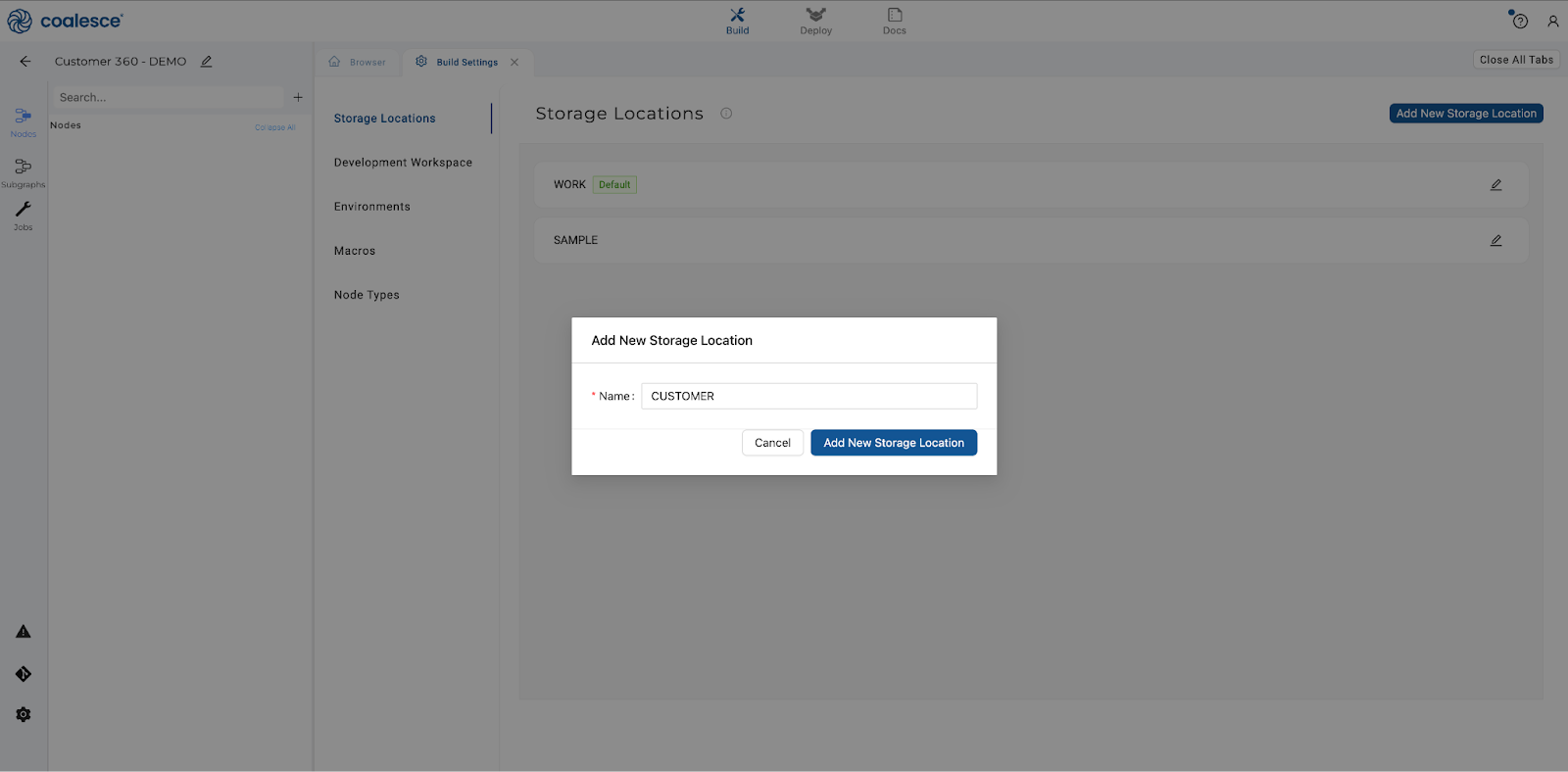

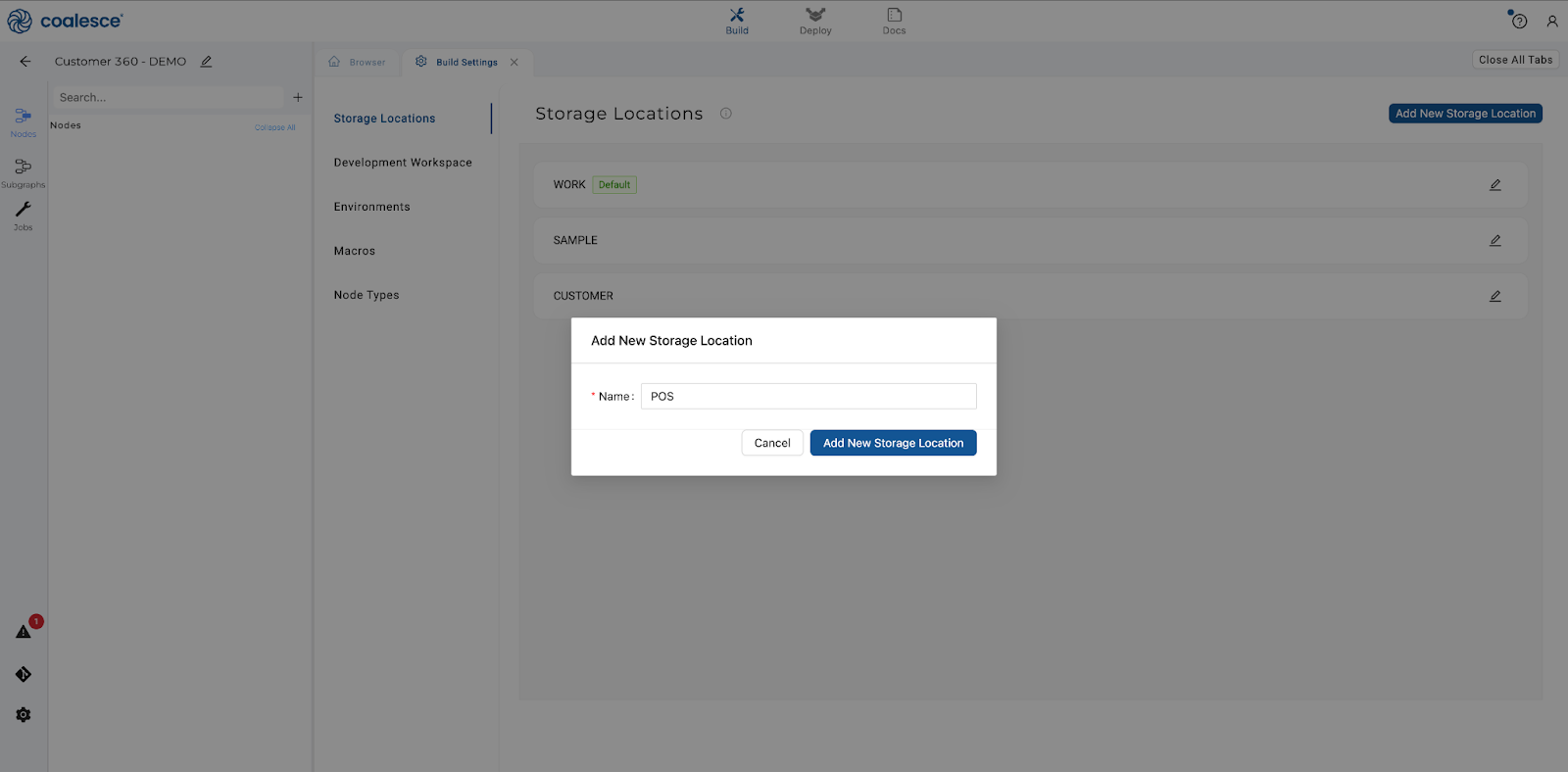

- Click the "Add New Storage Locations" button and name it

CUSTOMERfor your raw customer data.

- Create another storage location called

POSfor your raw point-of-sale data. Coalesce has already created a database for our work to be mapped to (yourWORKlocation).

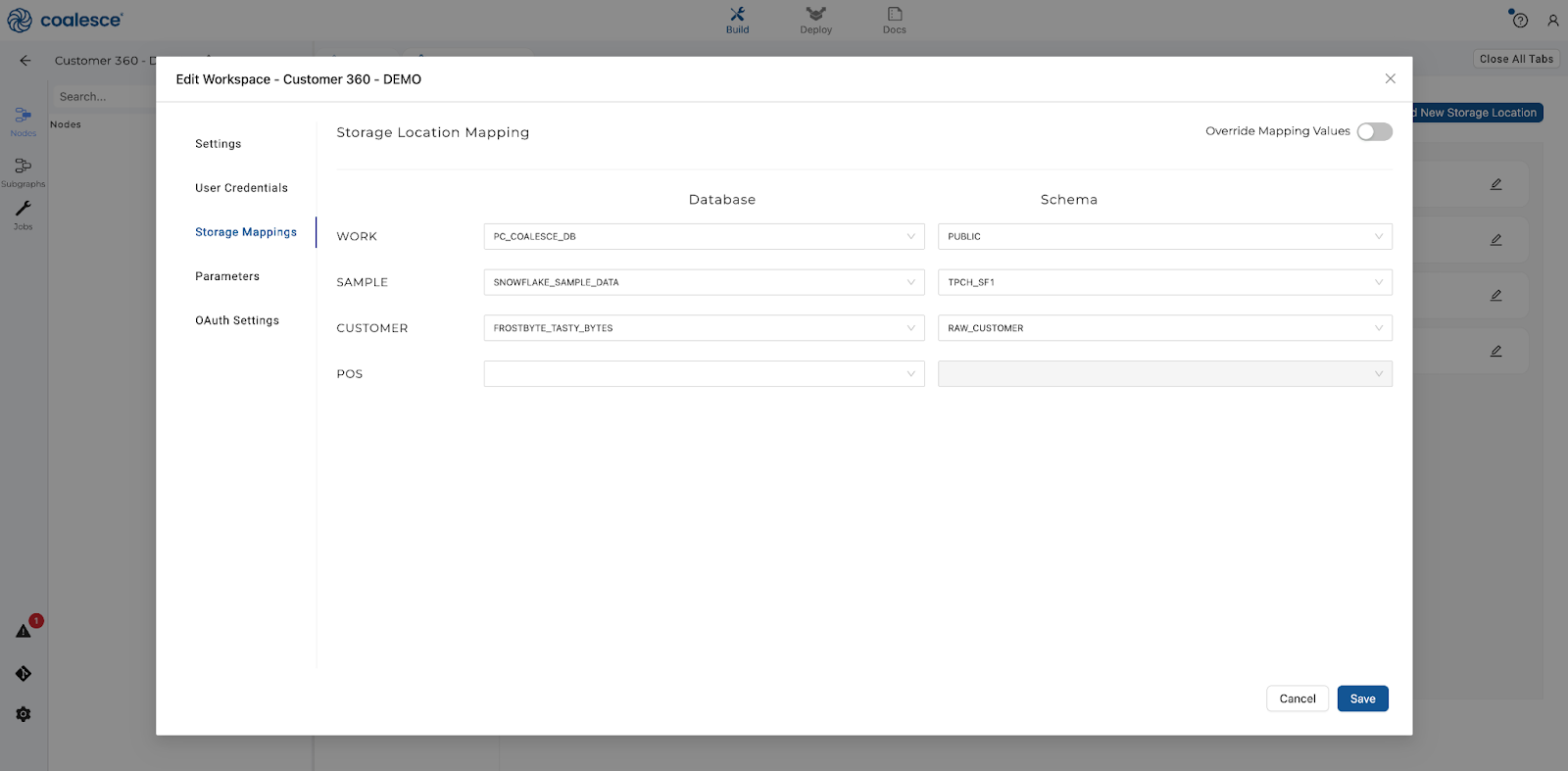

- Now map your storage locations to their logical destinations in Snowflake. In the upper left corner next to your Workspace name, click on the pencil icon to open your Workspace settings. Click on Storage Mappings and map your

CUSTOMERlocation to theFROSTBYTE_TASTY_BYTESdatabase and theRAW_CUSTOMERschema.

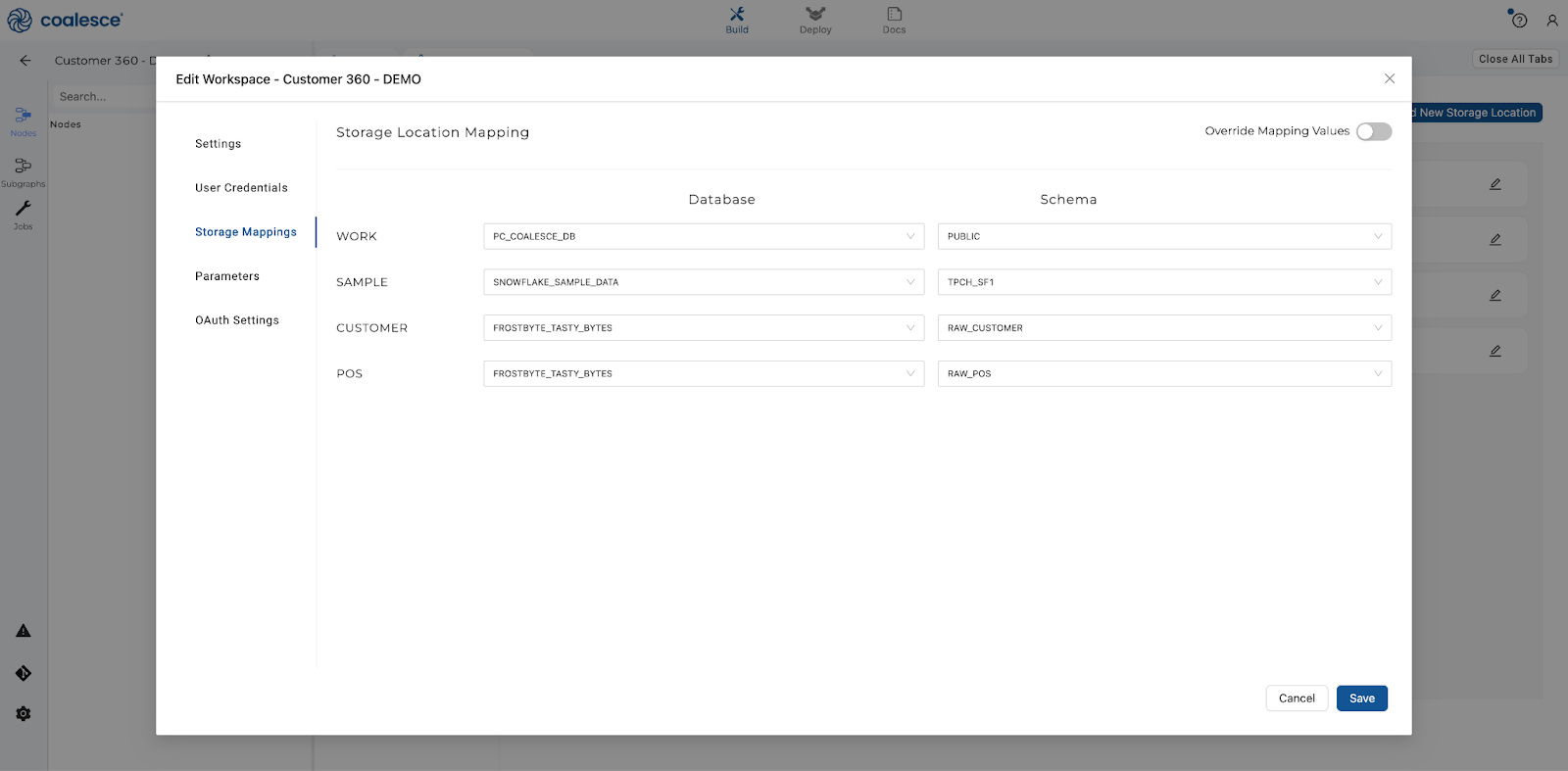

- Map your

POSlocation to theFROSTBYTE_TASTY_BYTESdatabase and theRAW_POSschema. Keep yourWORKlocation mappings the same. Select Save to ensure Coalesce stores your mappings.

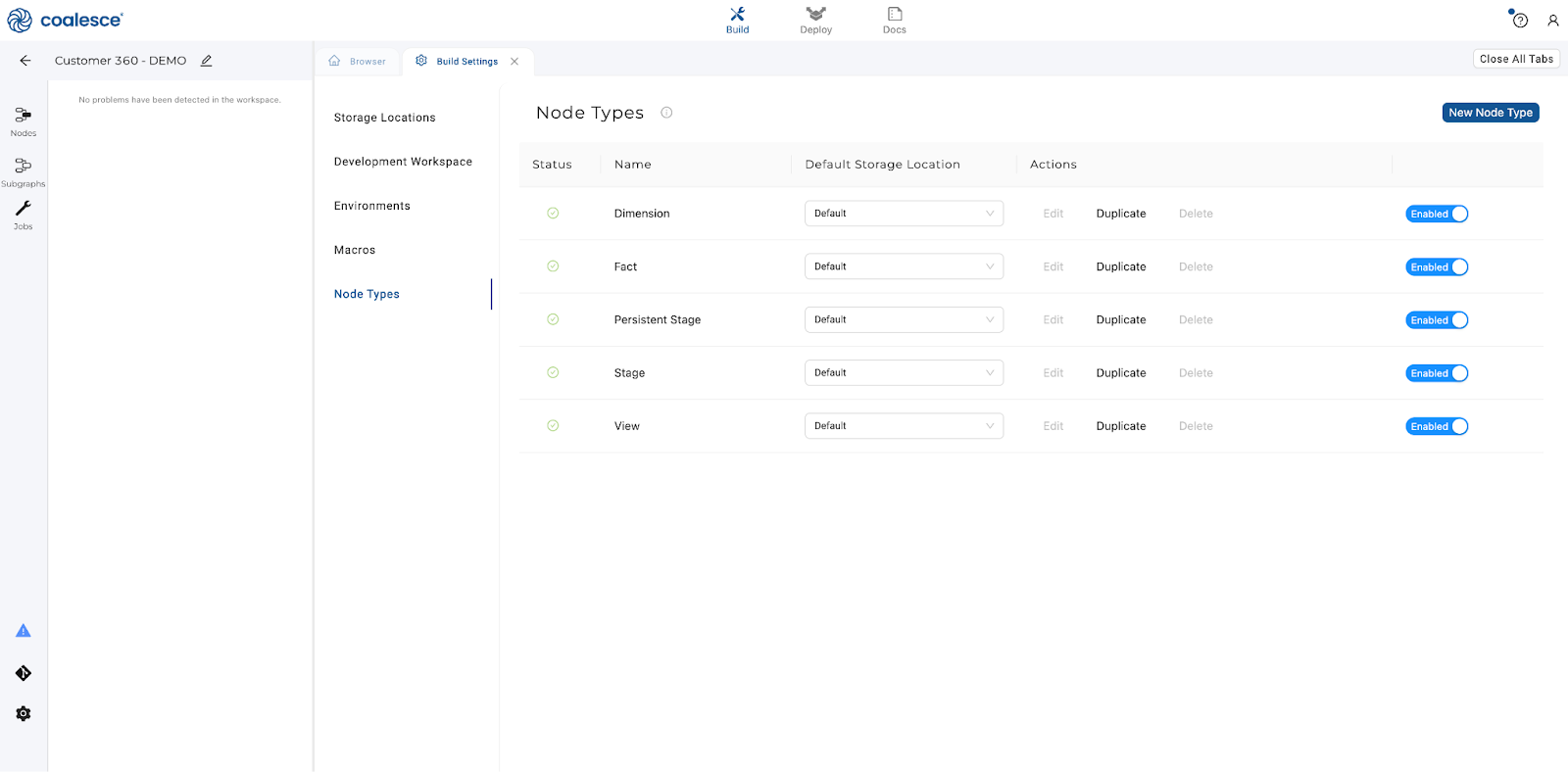

- Go back to the build settings and click on Node Types and then click the toggle button next to View to enable View node types. Now you're ready to start building your pipeline!

Let's start to build the foundation of your data pipeline by creating a Graph (DAG) and adding data in the form of Source nodes.

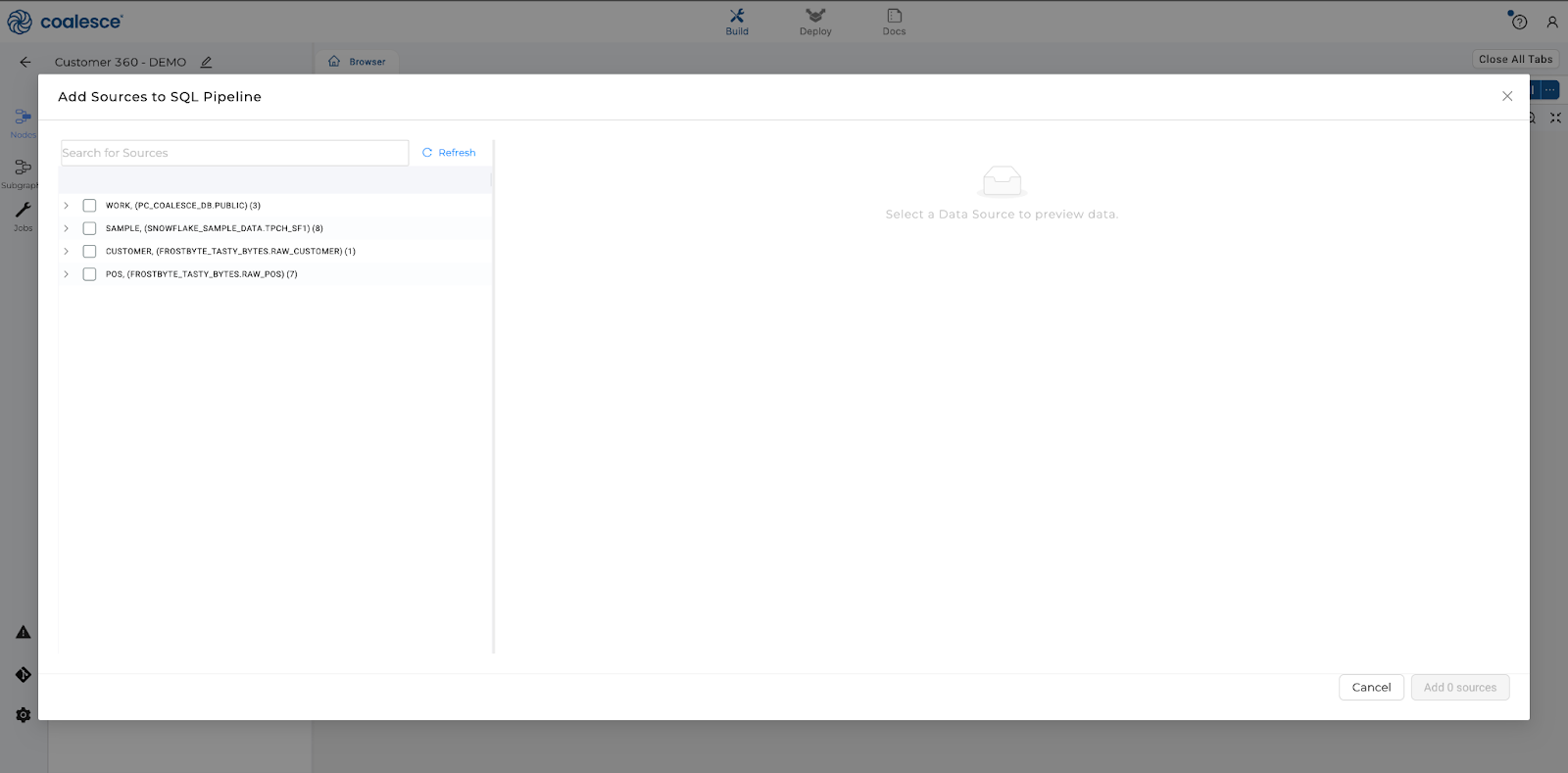

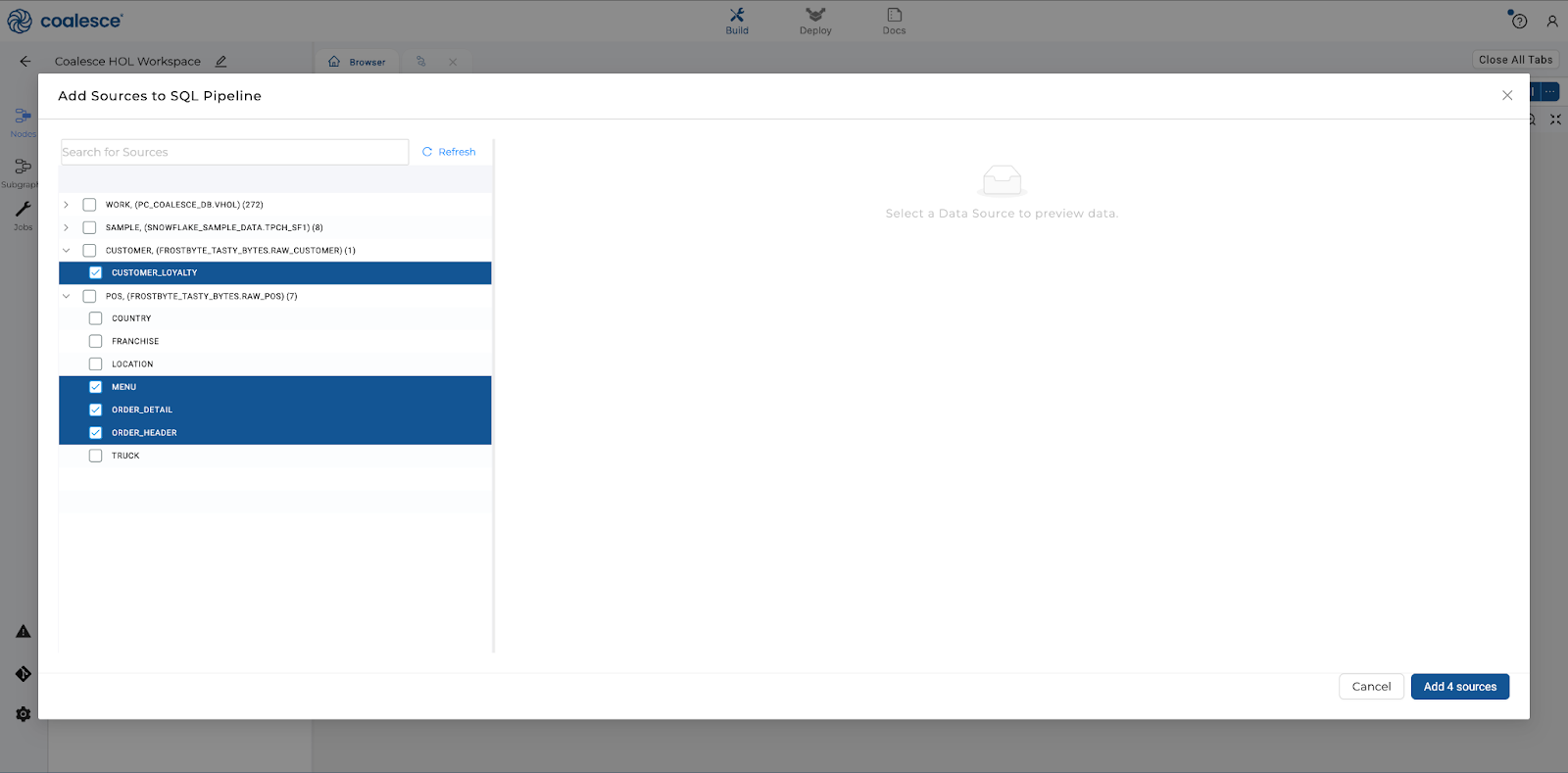

- Start in the Build interface and click on Nodes in the left sidebar (if not already open). Click the + icon and select Add Sources.

- Select all the

CUSTOMERsource nodes(CUSTOMER_LOYALTY)and theMENU,ORDER_DETAIL,ORDER_HEADERfrom thePOSsource storage location. Alternatively, if available, you can useORDER_HEADER_14MandORDER_DETAIL_38M.Then click the Add Sources button.

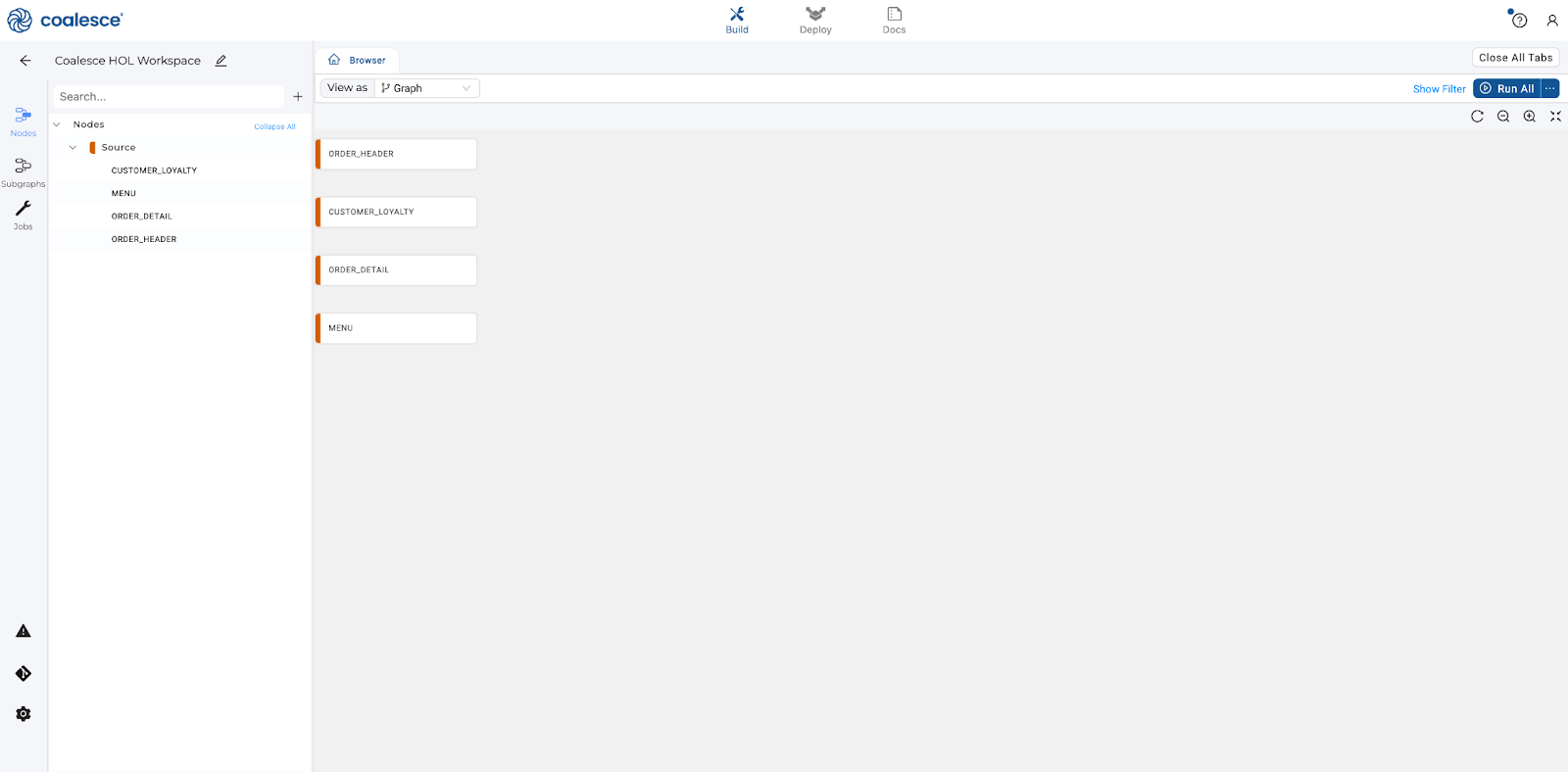

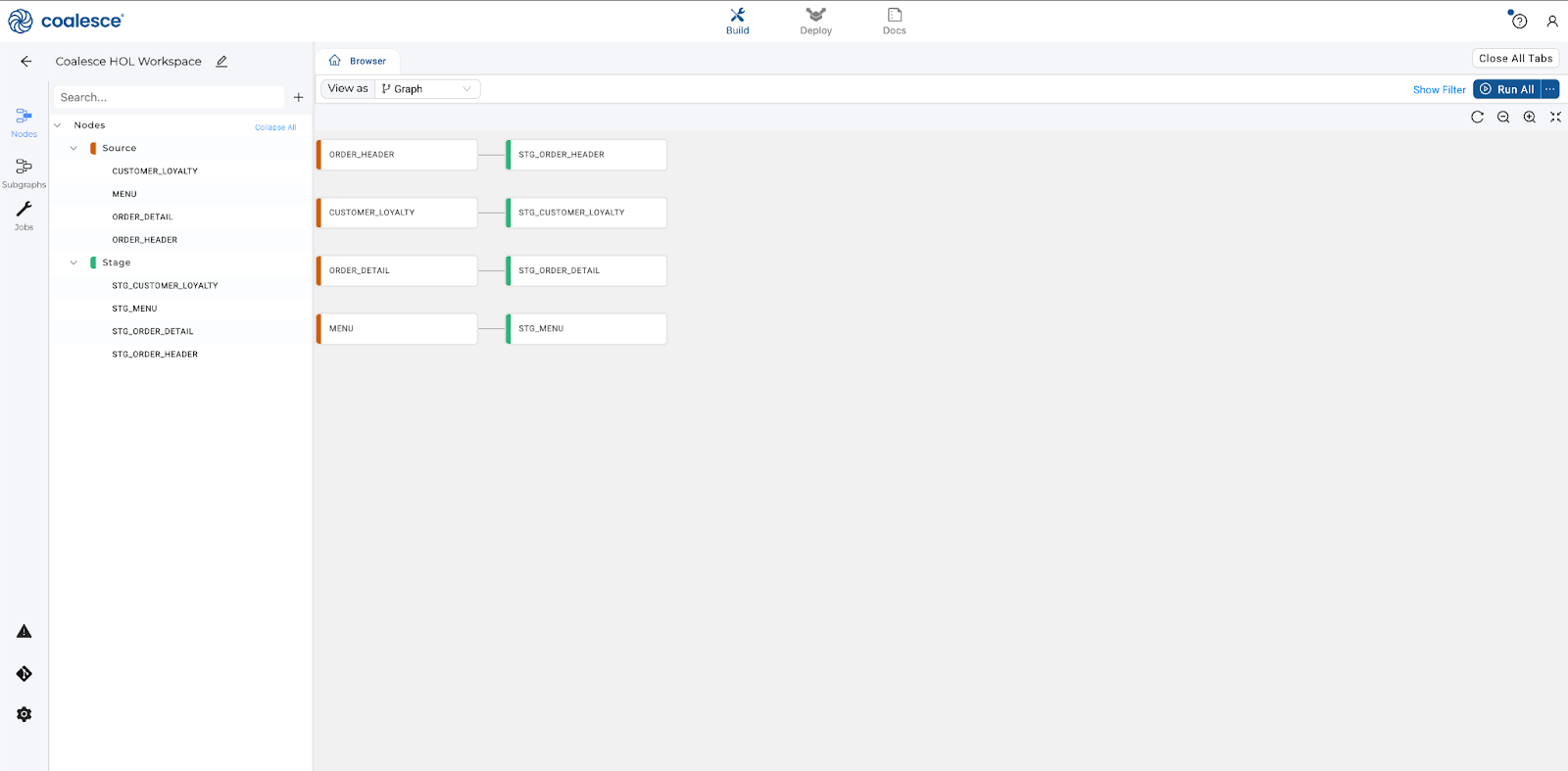

- You'll now see your graph populated with your Source nodes. Note that they are designated in red. Each node type in Coalesce has its own color associated with it, which helps with visual organization when viewing a Graph.

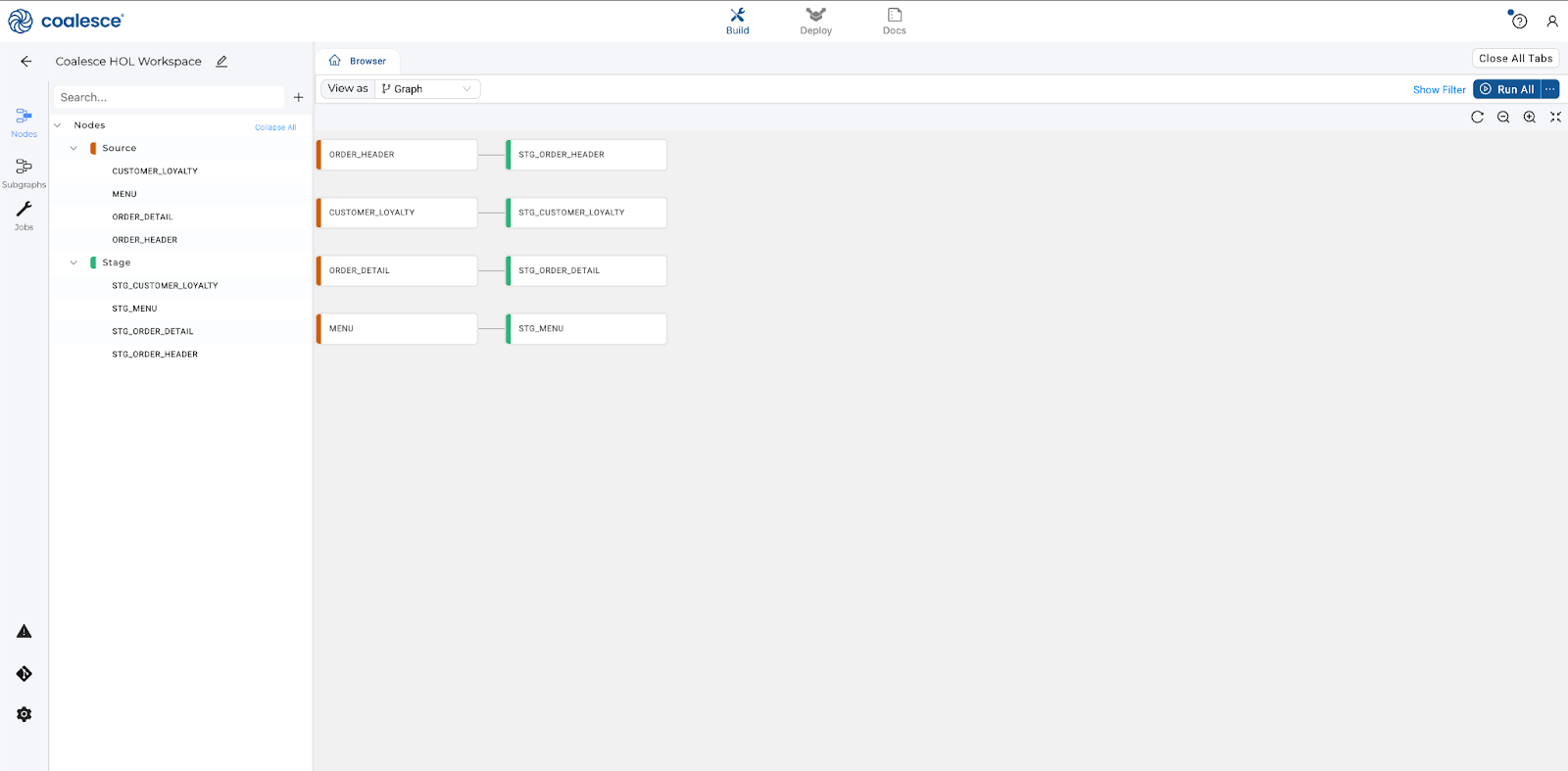

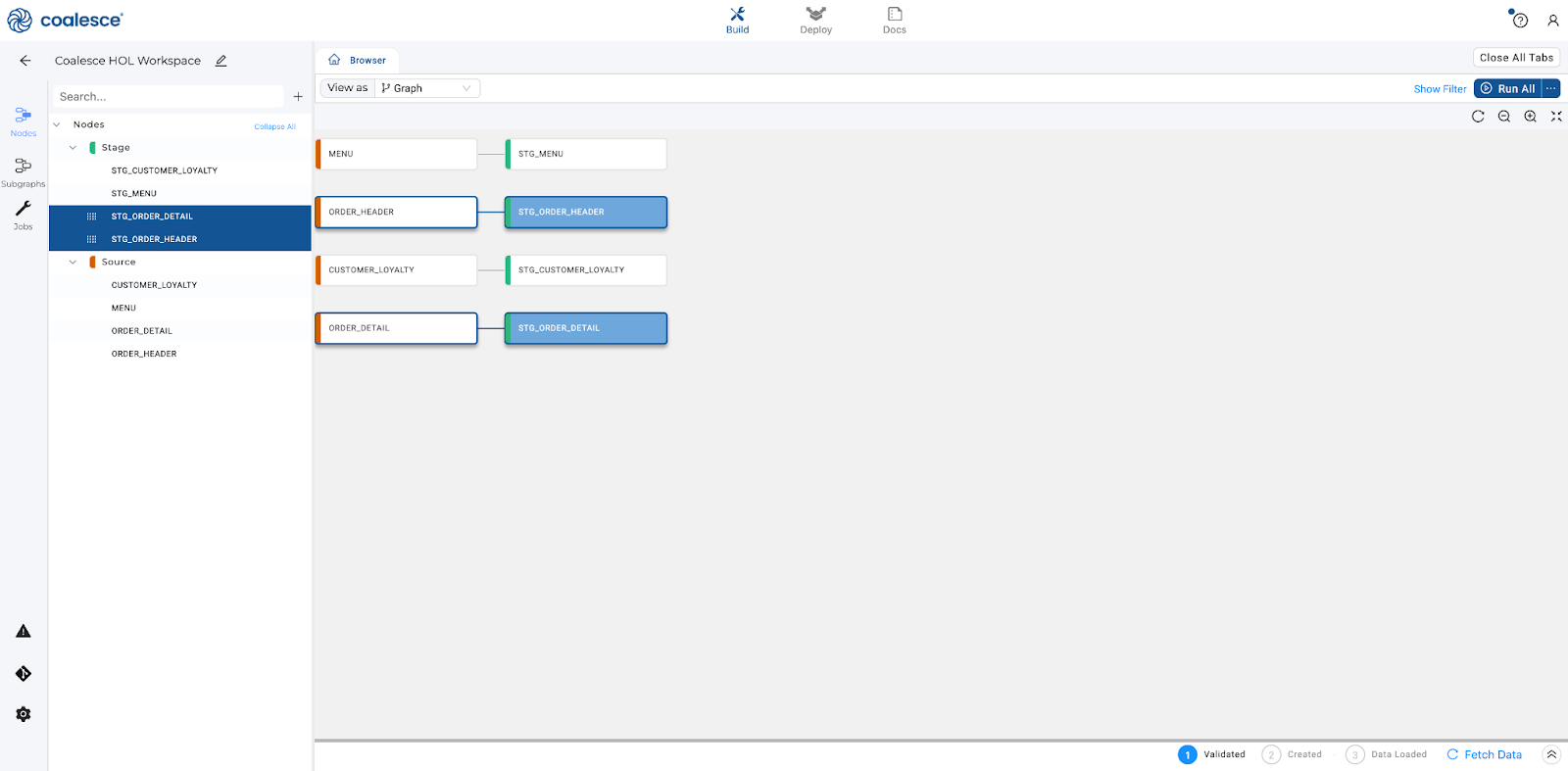

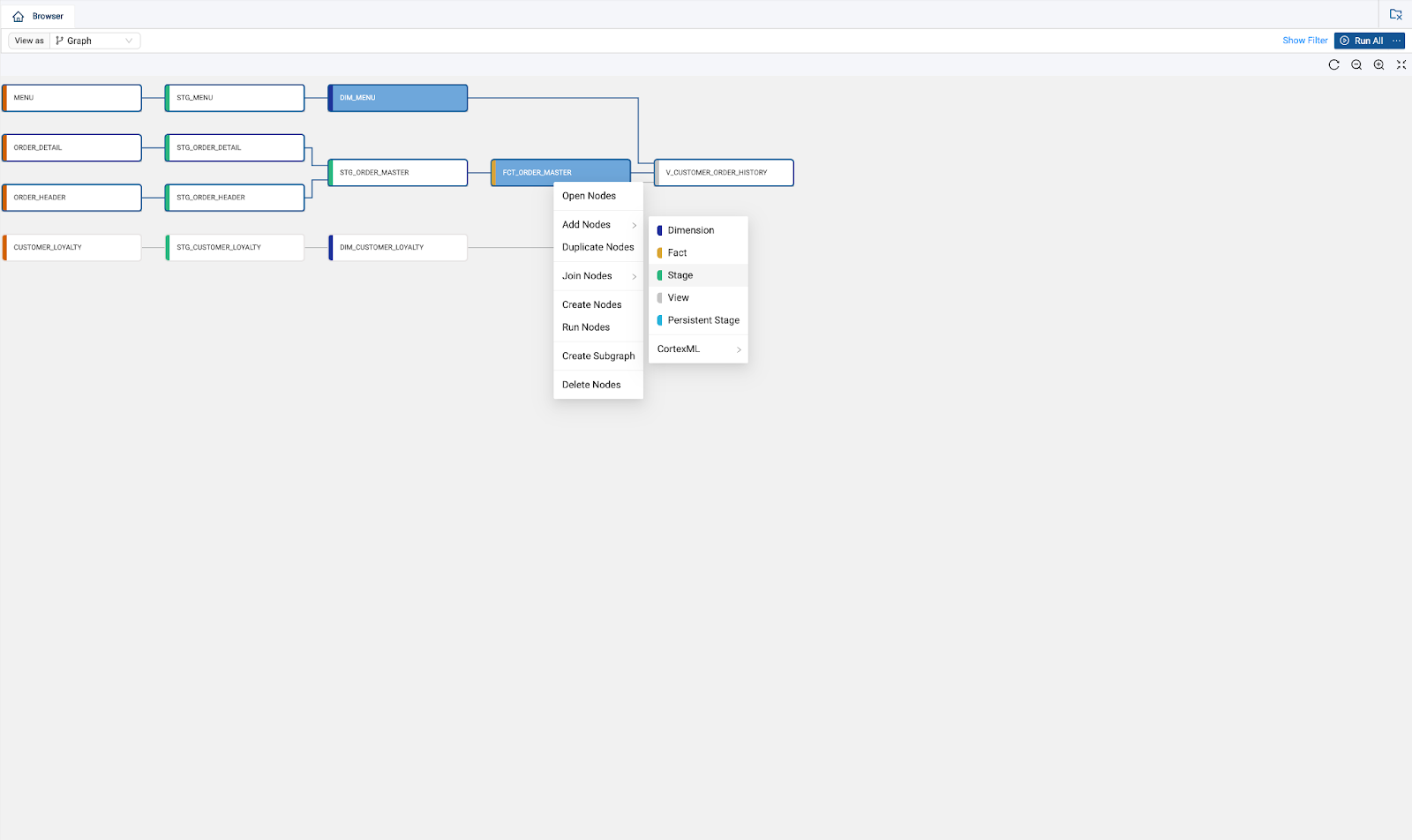

Now that you've added your Source nodes, let's prepare the data by adding business logic with Stage nodes. Stage nodes represent staging tables within Snowflake where transformations can be previewed and performed. Let's start by adding a standardized "stage layer" for all sources.

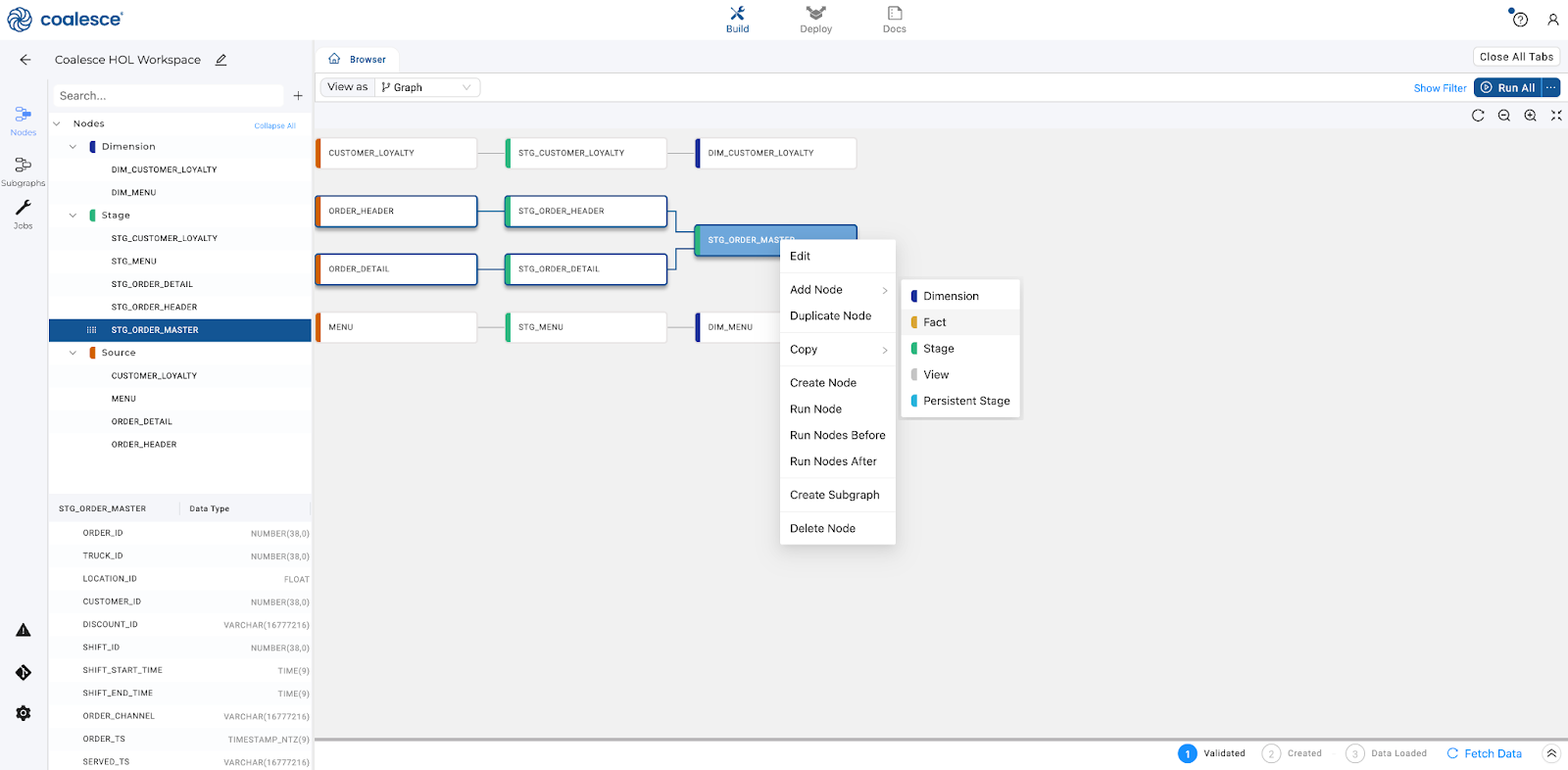

- Press and hold the Shift key to multi-select all of your Source nodes from the node pane on the left side of your screen. Then right click and select Add Node > Stage from the drop down menu. You will now see that all of your Source nodes have corresponding Stage tables associated with them. If the 14M/38M data sources are being used, drop that suffix in the Node name i.e.

STG_ORDER_HEADER

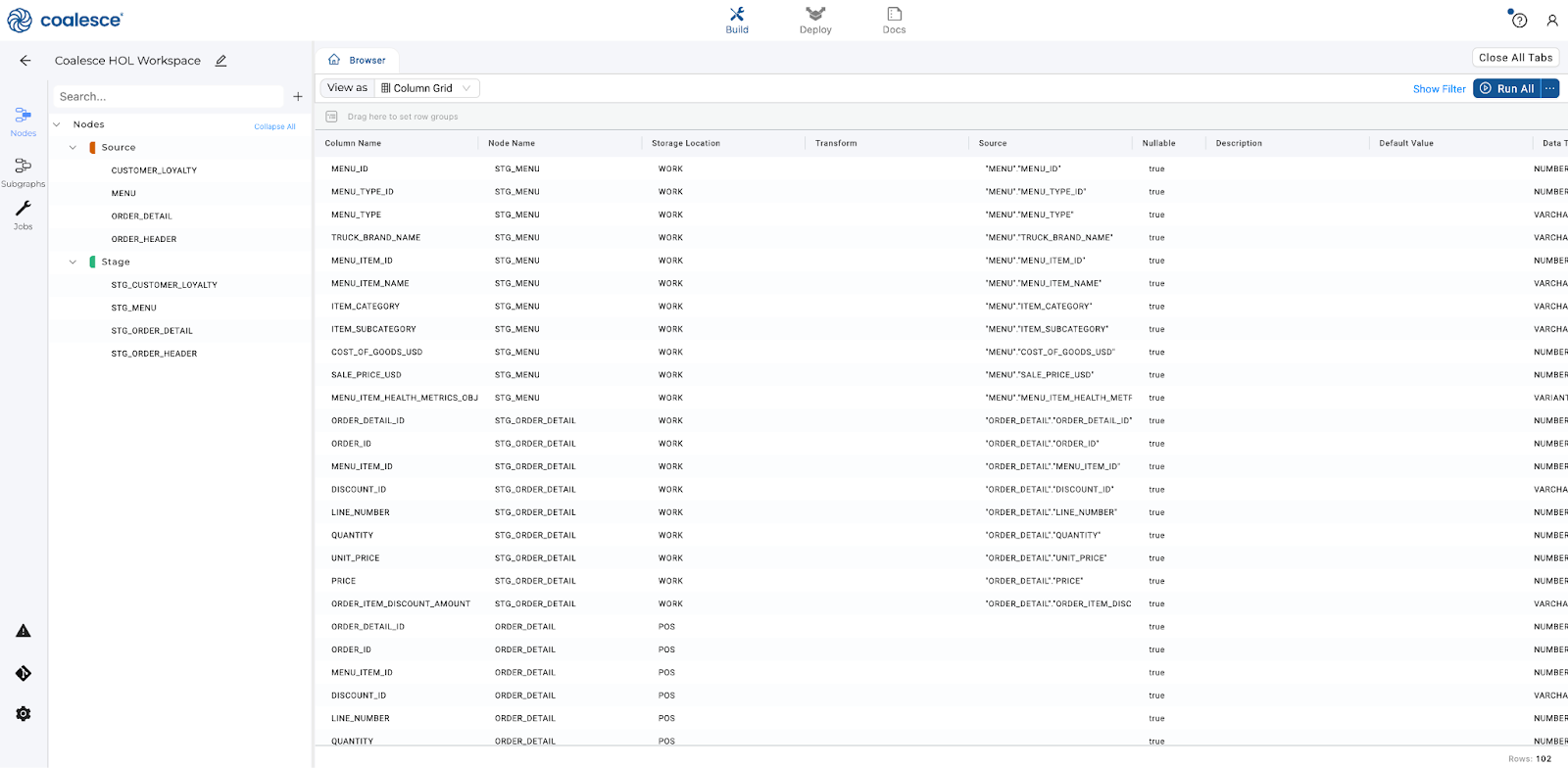

- Now change the Graph to a Column Grid by using the top left dropdown menu. By using the Column Grid view, you can search and group by different column headers.

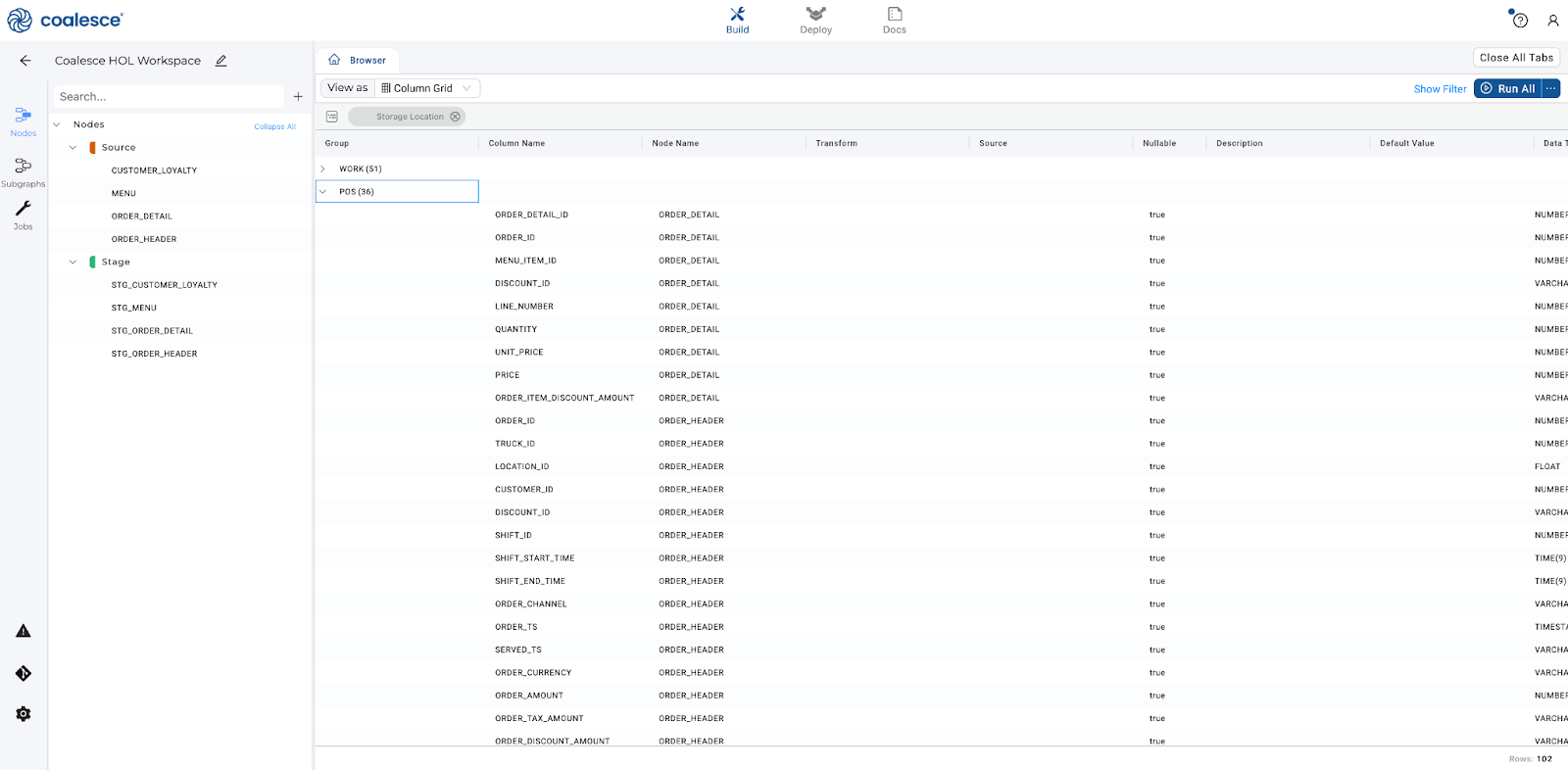

- Click on the Storage Location header and drag it to the sorting bar below the View As dropdown menu. You have now grouped your rows by Storage Location. Then expand all the columns from your

POSlocation.

- Change back to the Graph view from Column Grid to with the dropdown menu.

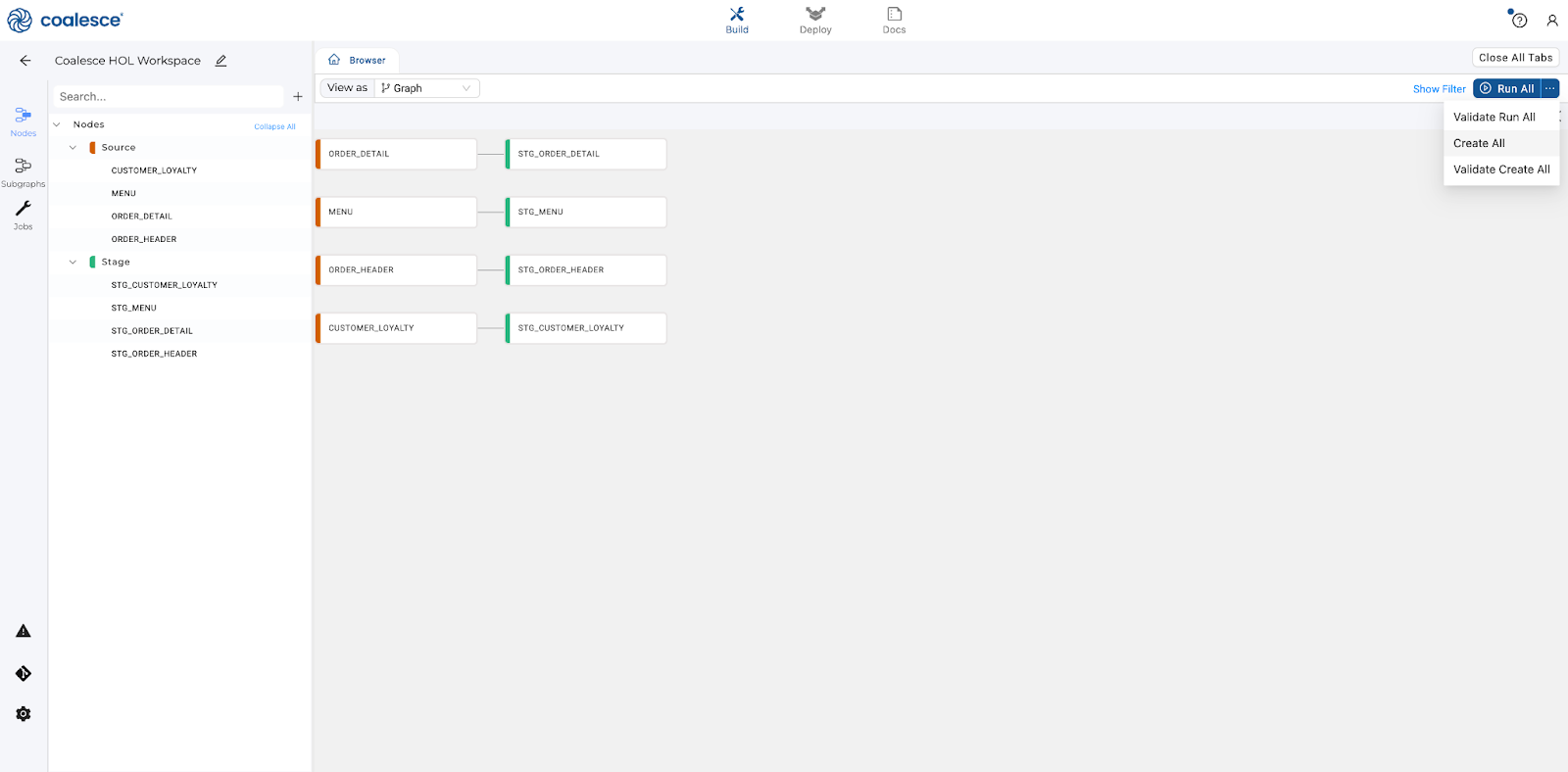

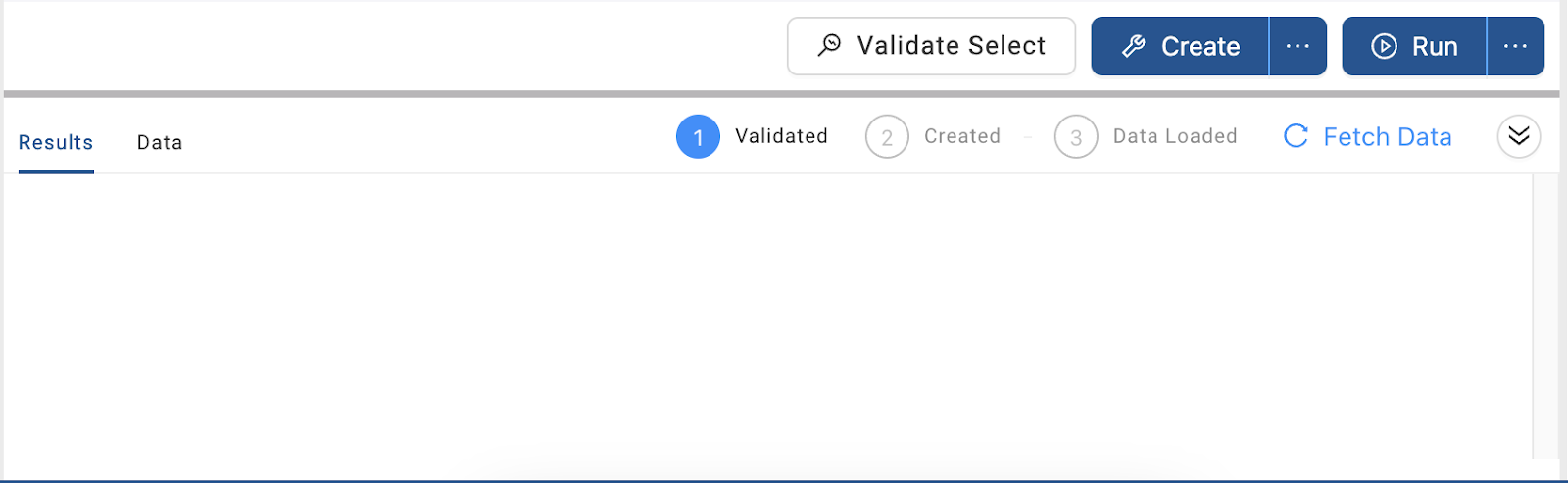

- Now let's create the foundation for your data pipeline. In the upper right hand corner, press the Create All button to create your tables in Snowflake. Then click the Run All button to populate your nodes with data.

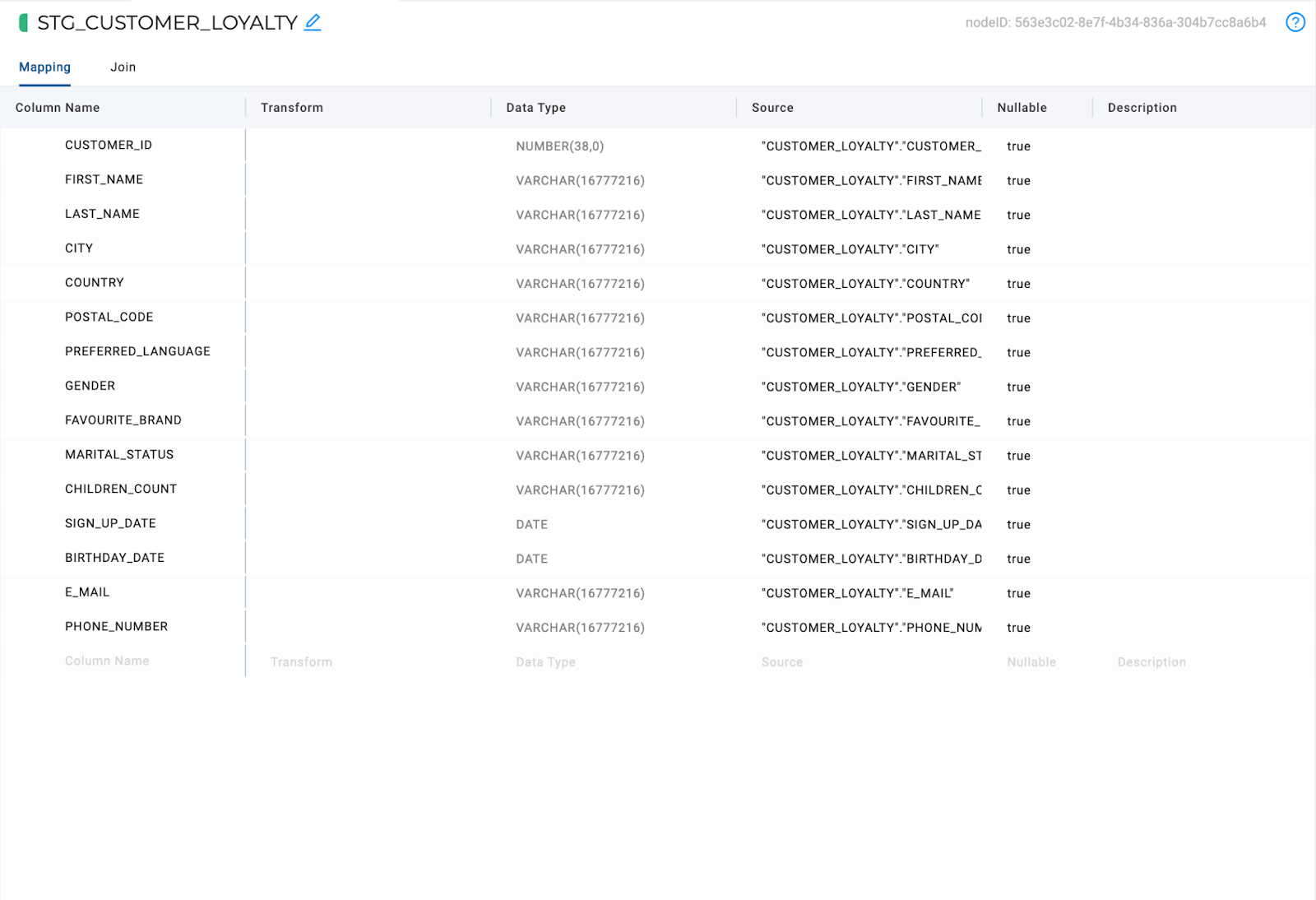

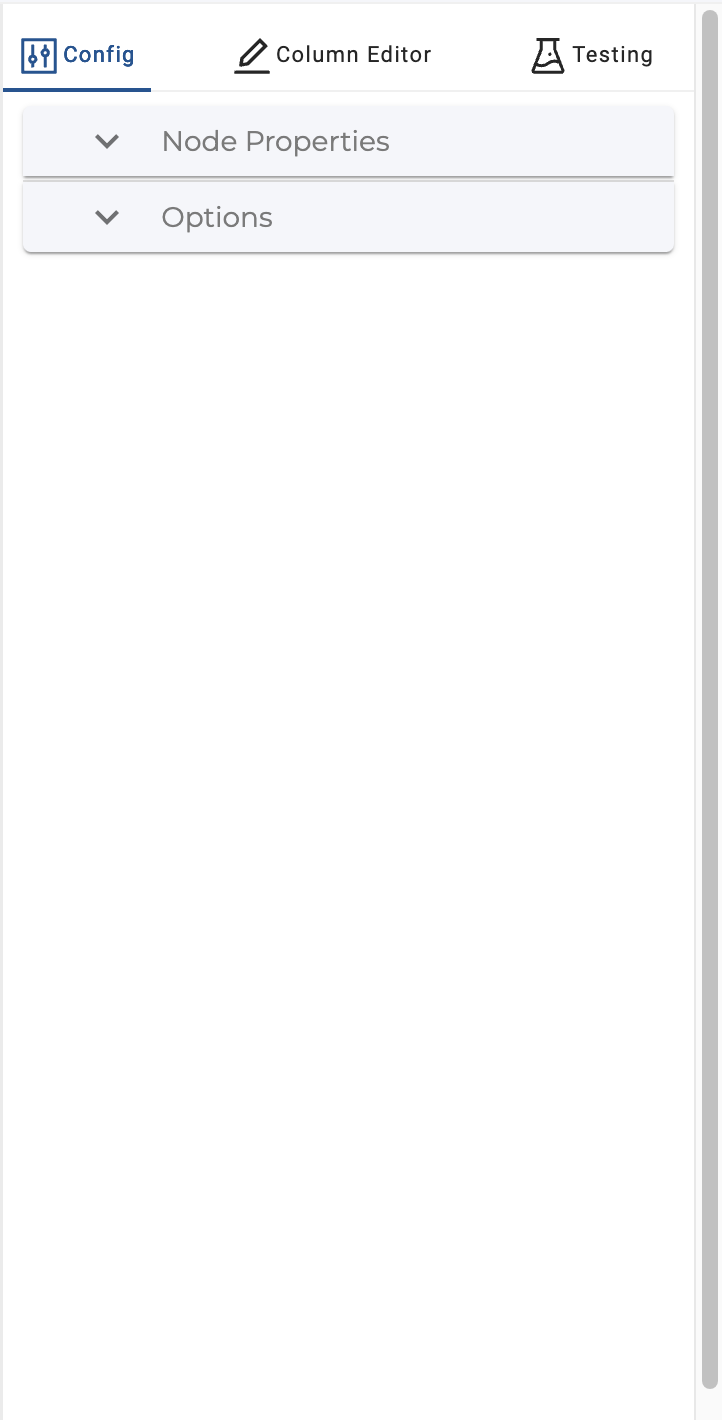

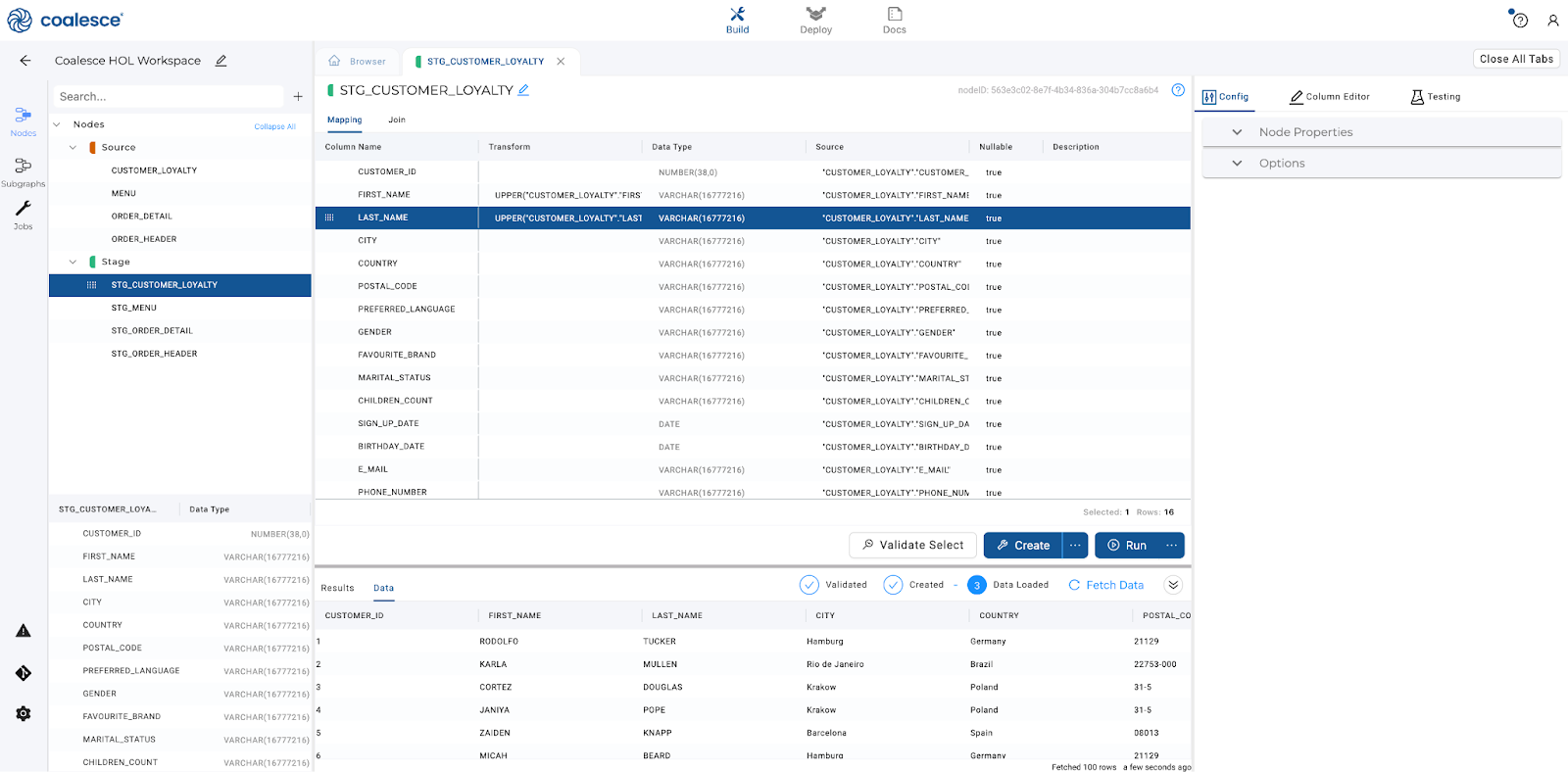

Your Node Editor is used to edit the node object, the columns within it, and its relationship to other nodes. There are a few different components to the Node Editor.

- Double click on your

STG_CUSTOMER_LOYALTYnode to open up your Node Editor. The large middle section is your Mapping grid, where you can see the structure of your node along with column metadata like transformations, data types, and sources.

- On the right hand side of the Node Editor is the Config section, where you can view and set configurations based on the type of node you're using.

- At the bottom of the Node Editor, press the arrow button to view the Data Preview pane.

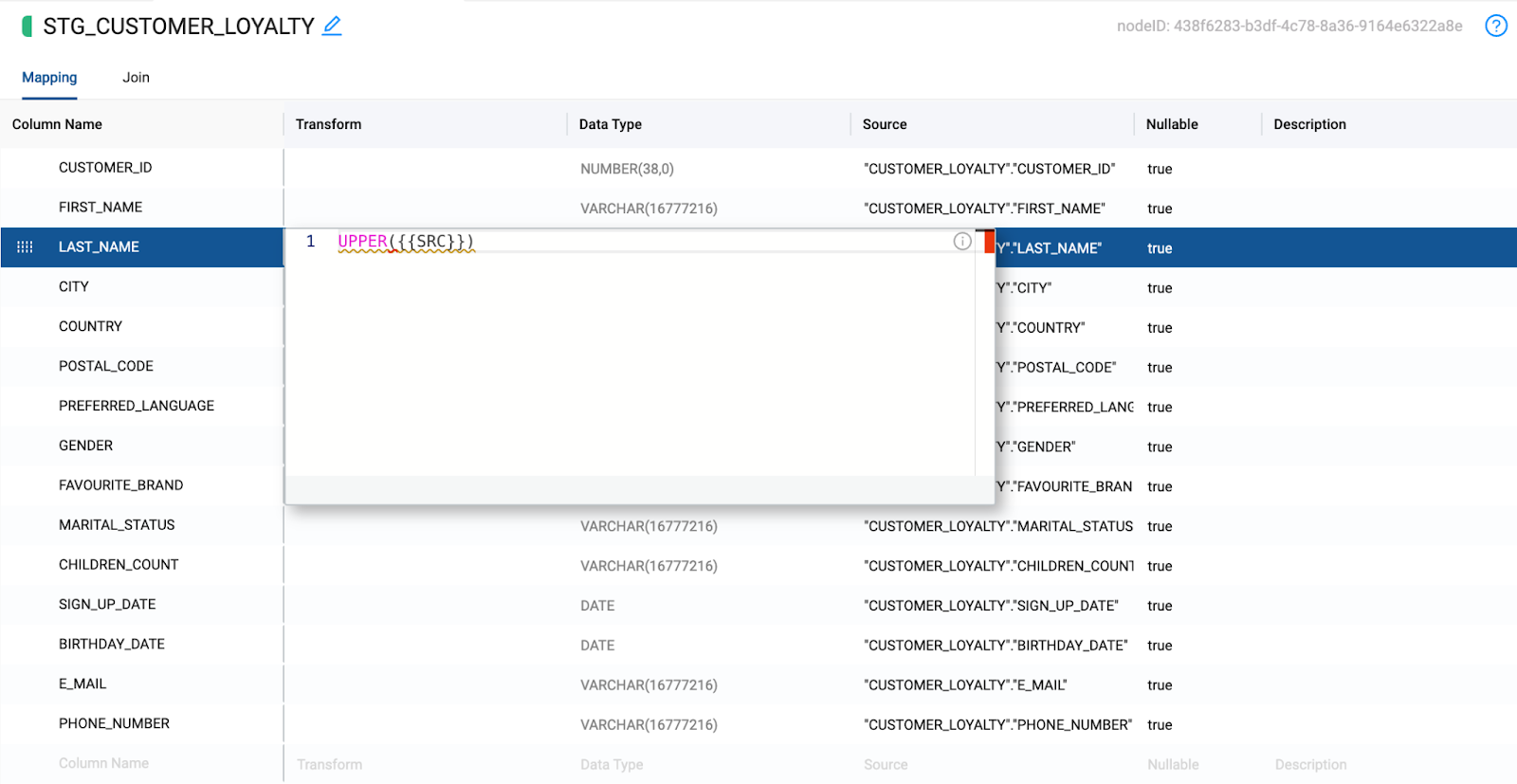

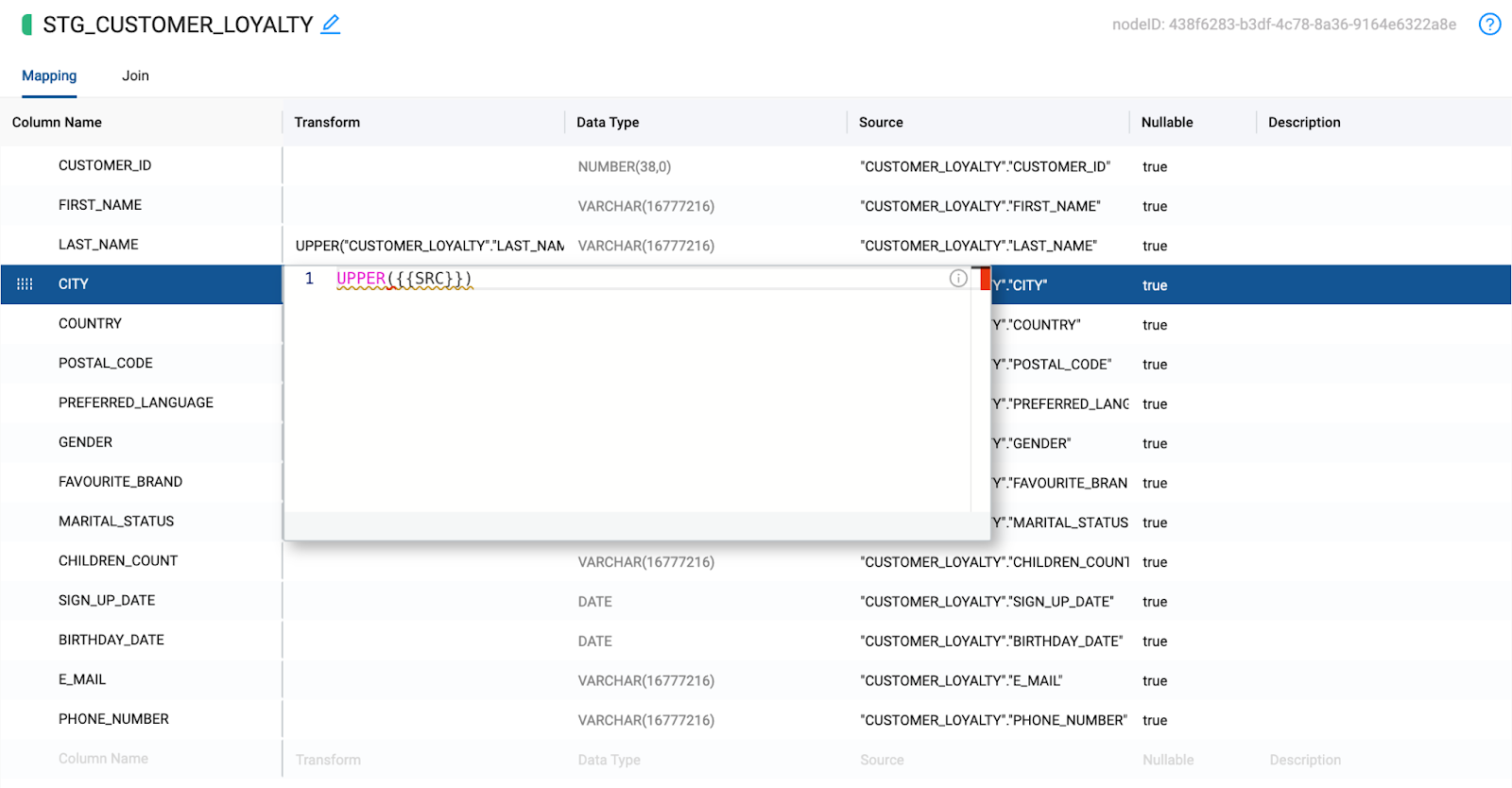

Now let's apply a single column transformation in your STG_CUSTOMER_LOYALTY node by applying a consistent naming convention to our customer names. This will ensure that any customer names are standardized.

- Select the

FIRST_NAMEcolumn and click the blank Transform field in the Mapping grid. Enter the following transformation and then press Enter:

UPPER({{SRC}})

- Repeat this transformation for your

LAST_NAMEcolumn:

UPPER({{SRC}})

- Click the Create and Run buttons to create and repopulate your node.

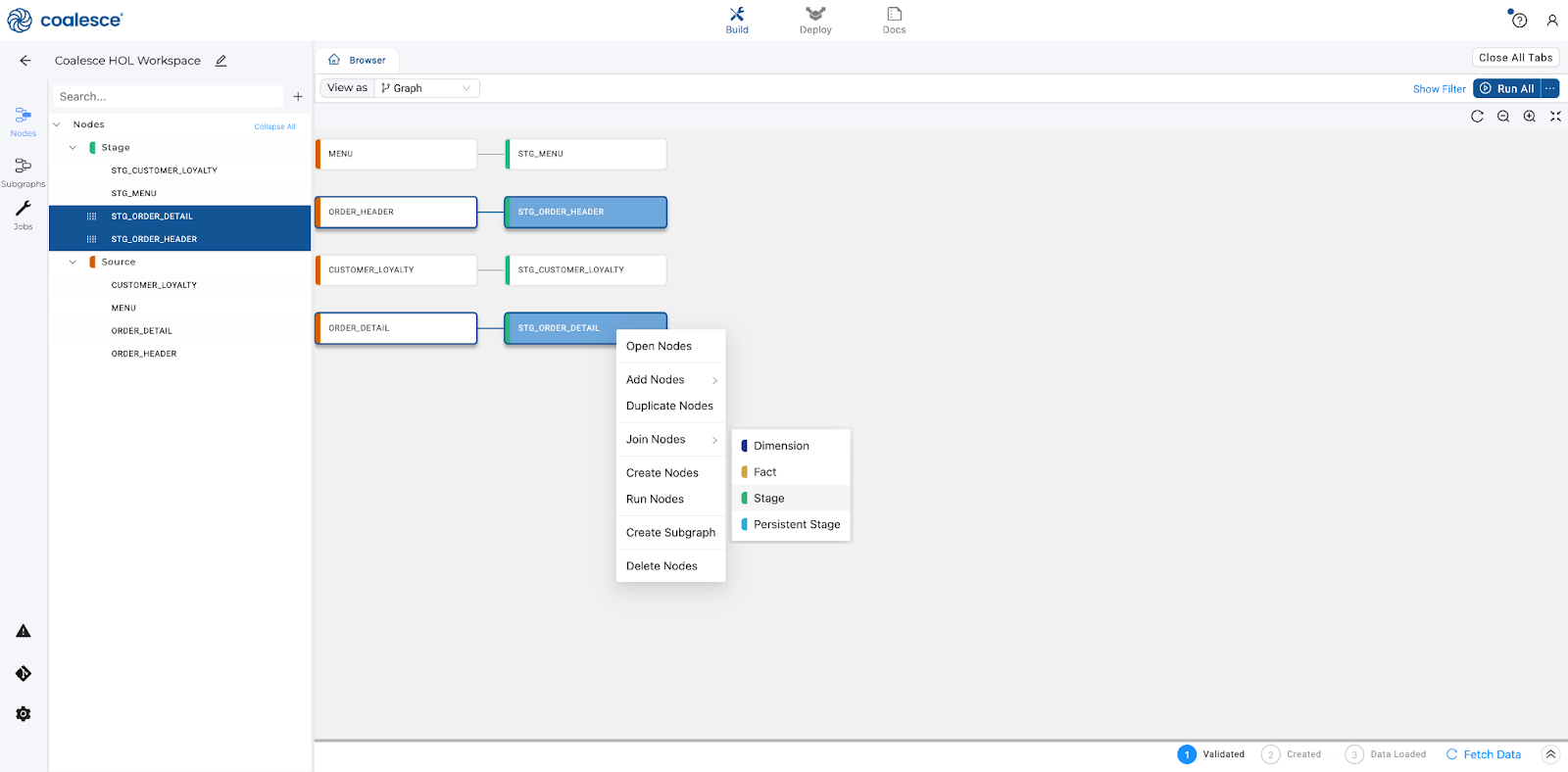

In order to prepare the data within your data pipeline, it needs to be processed more with an additional stage layer.

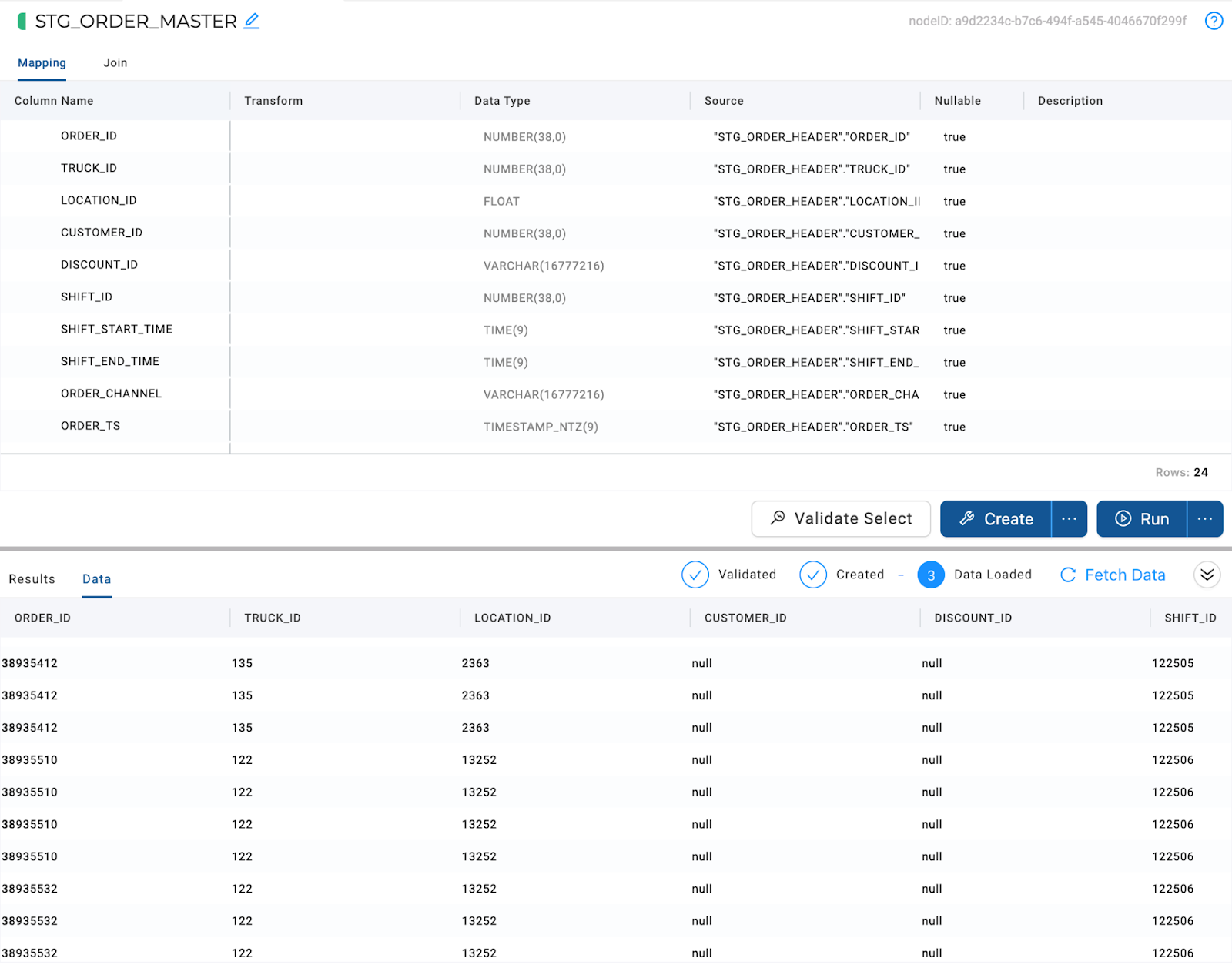

- Return to the Browser tab and select your

STG_ORDER_HEADERdata with yourSTG_ORDER_DETAILdata by holding down the Shift key and clicking on both nodes.

- Right click on either node and select Join Nodes, and then select Stage. This will bring you to the node editor of your new Stage node.

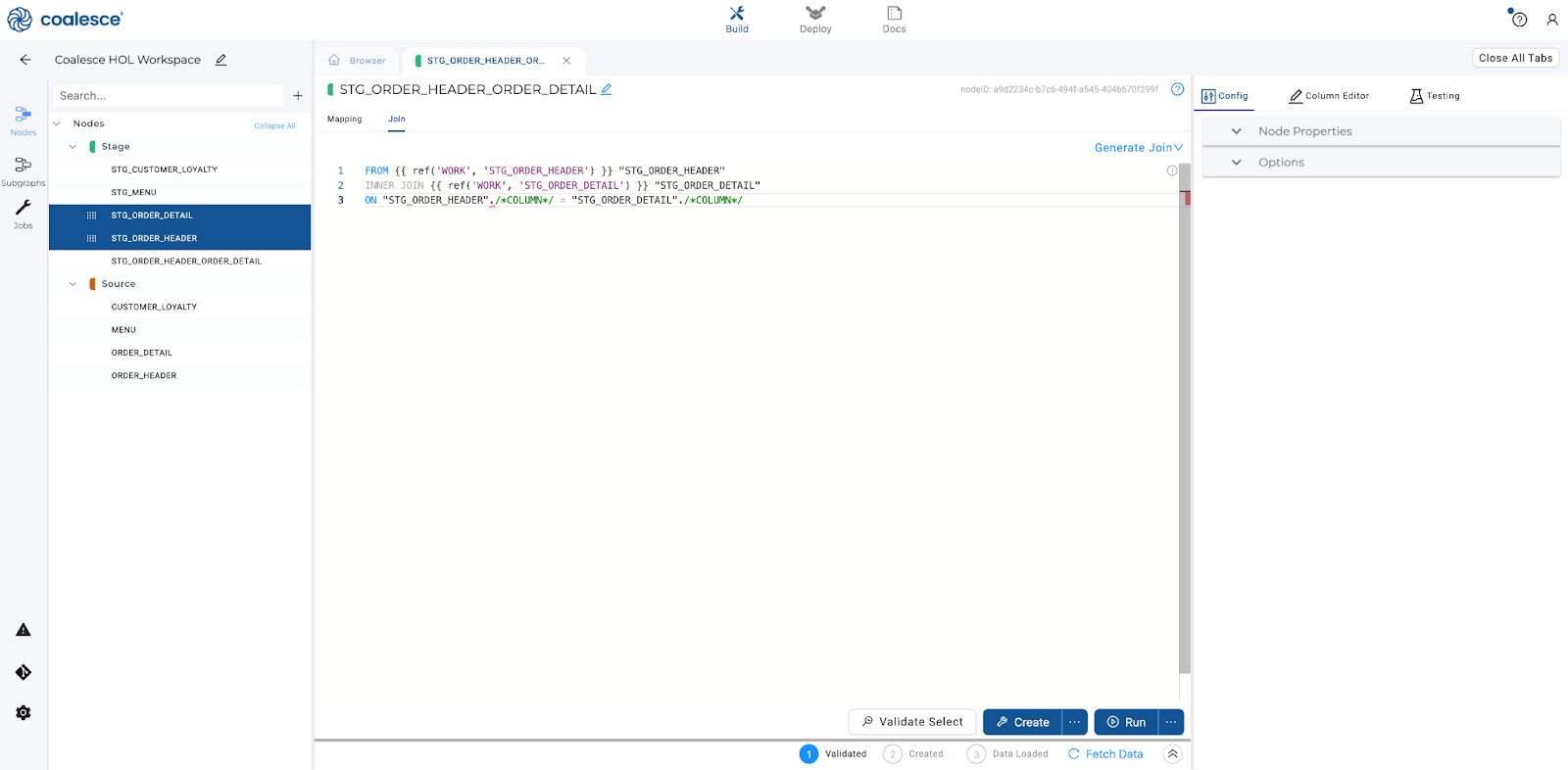

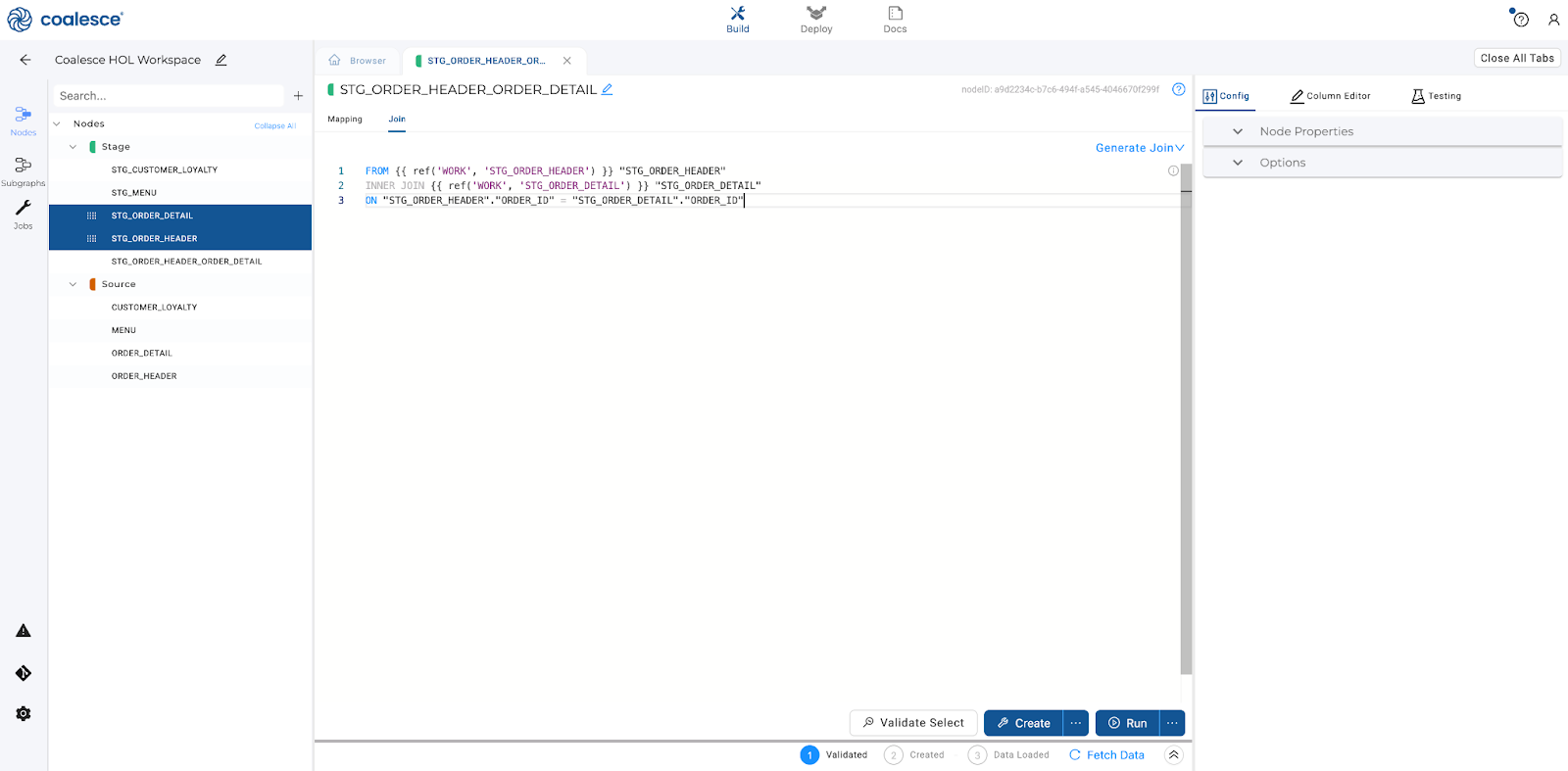

- Select the Join tab in the Node Editor. This is where you can define the logic to join data from multiple nodes, with the help of a generated Join statement.

- Add column relationships in the last line of the existing Join statement using the bolded code below:

FROM {{ ref('WORK', 'STG_ORDER_HEADER') }} "STG_ORDER_HEADER"

INNER JOIN {{ ref('WORK', 'STG_ORDER_DETAIL') }} "STG_ORDER_DETAIL"

ON "STG_ORDER_HEADER"."ORDER_ID" = "STG_ORDER_DETAIL"."ORDER_ID"

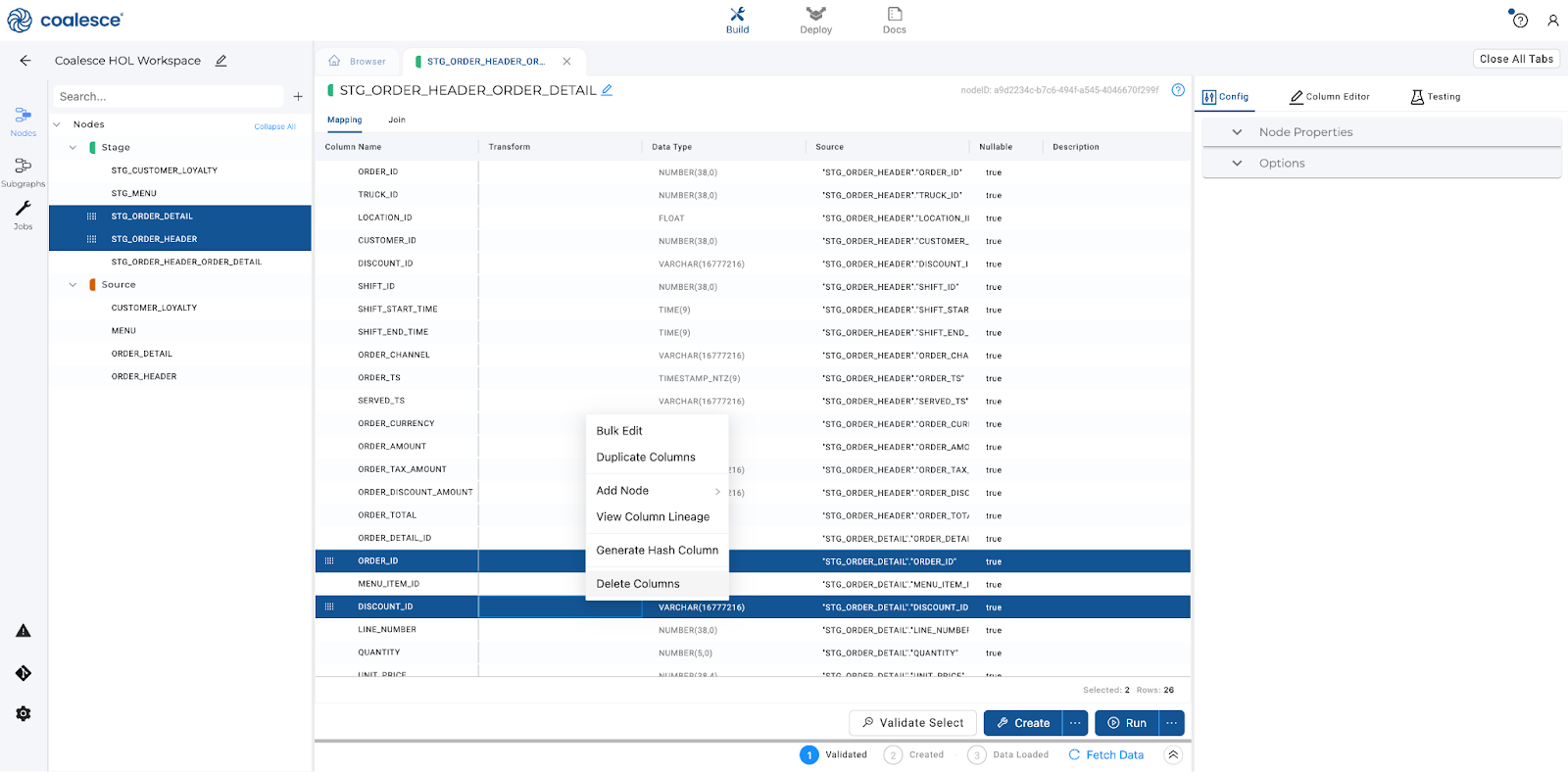

- Since both nodes contain a

ORDER_IDandDISCOUNT_IDcolumns, we will need to rename or remove one of the columns to avoid getting a duplicate column name error. Let's delete theORDER_IDandDISCOUNT_IDfrom theSTG_ORDER_DETAIL.

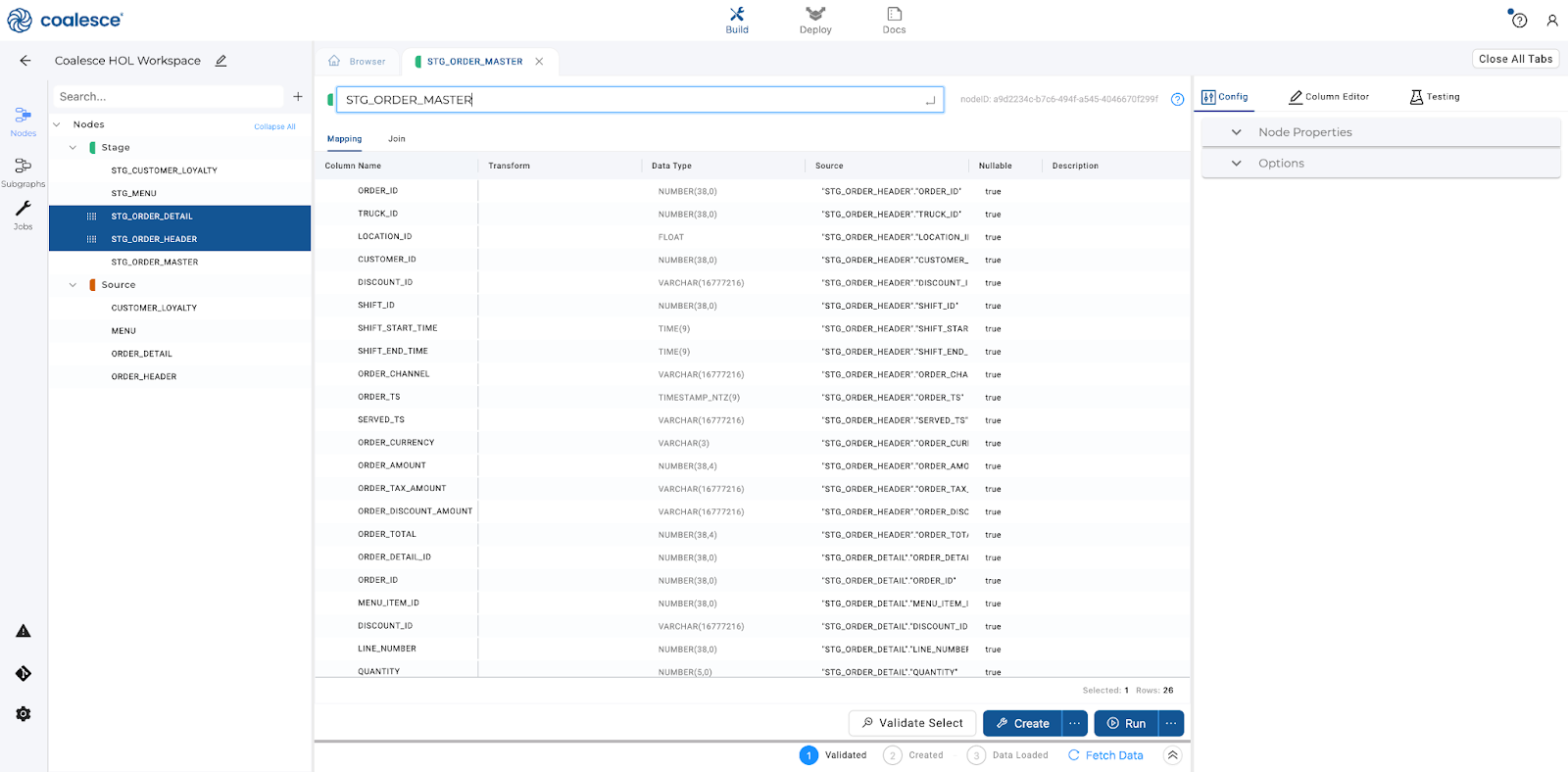

- You can rename the node by clicking on the pencil icon next to the node name and give the node a name of your choice. Rename the node to

STG_ORDER_MASTER.

- To create your Stage Node, click the Create button in the lower half of the screen. Then click the Run button to populate your

STG_ORDER_MASTERnode and preview the contents of your node by clicking Fetch Data.

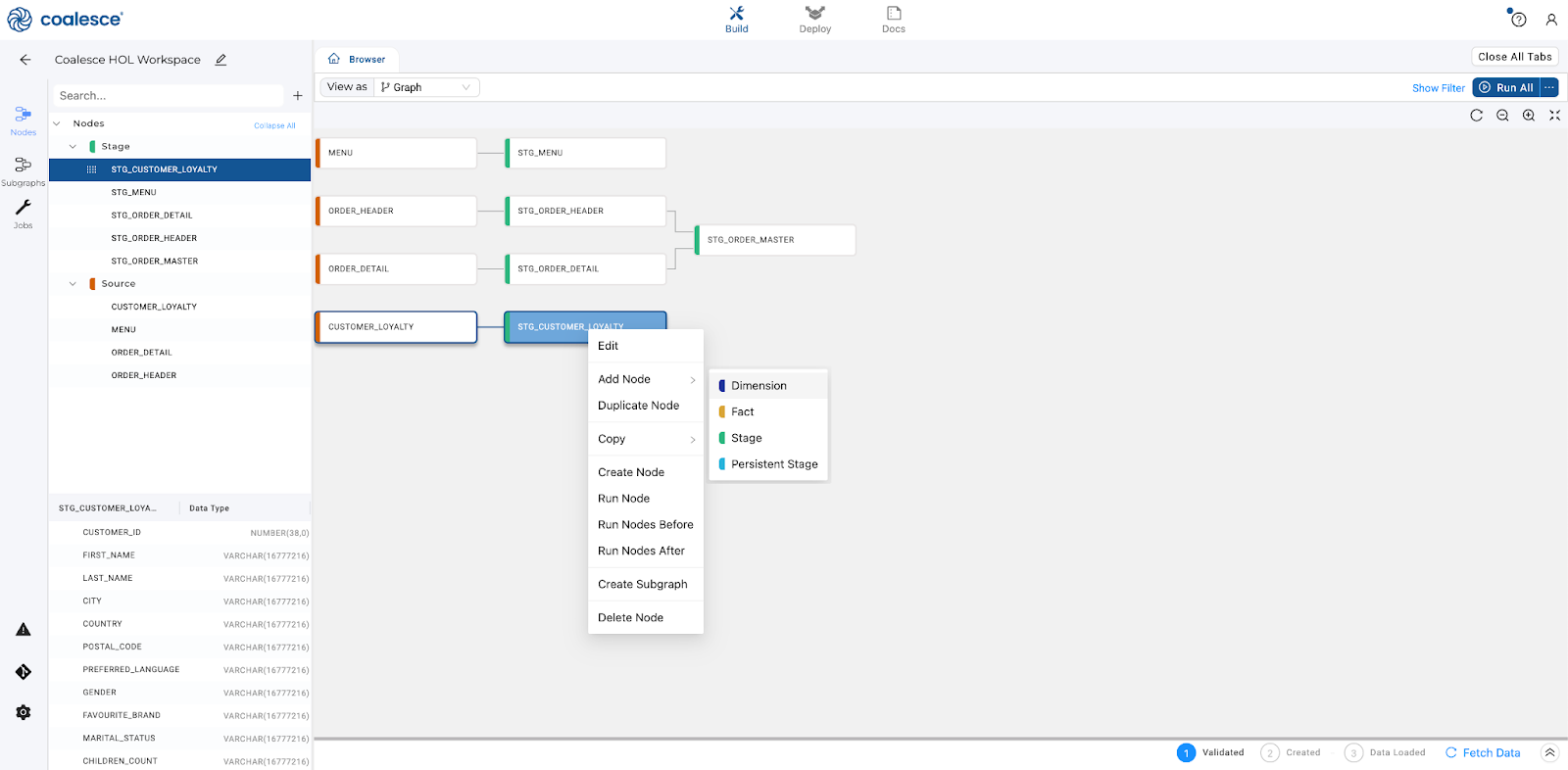

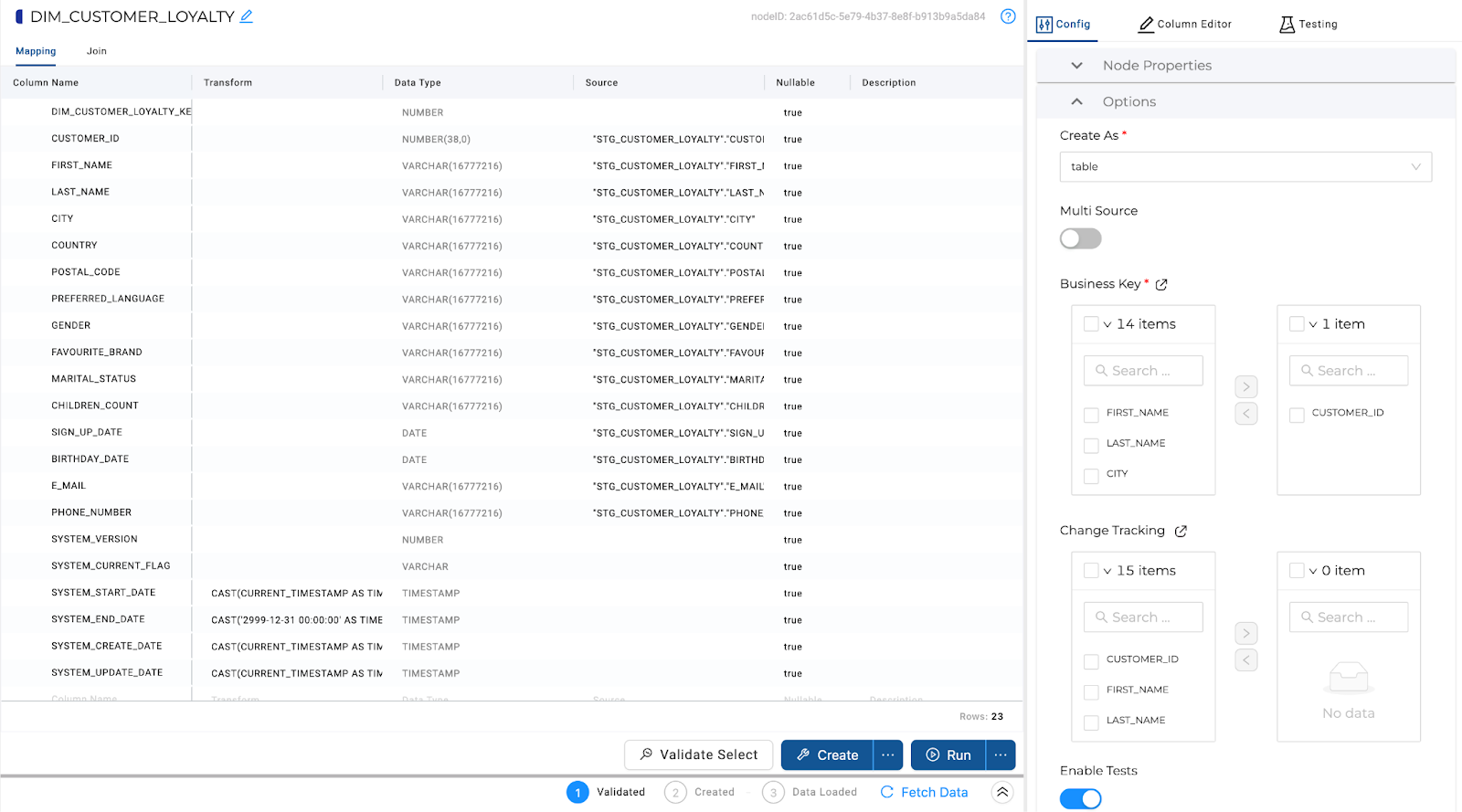

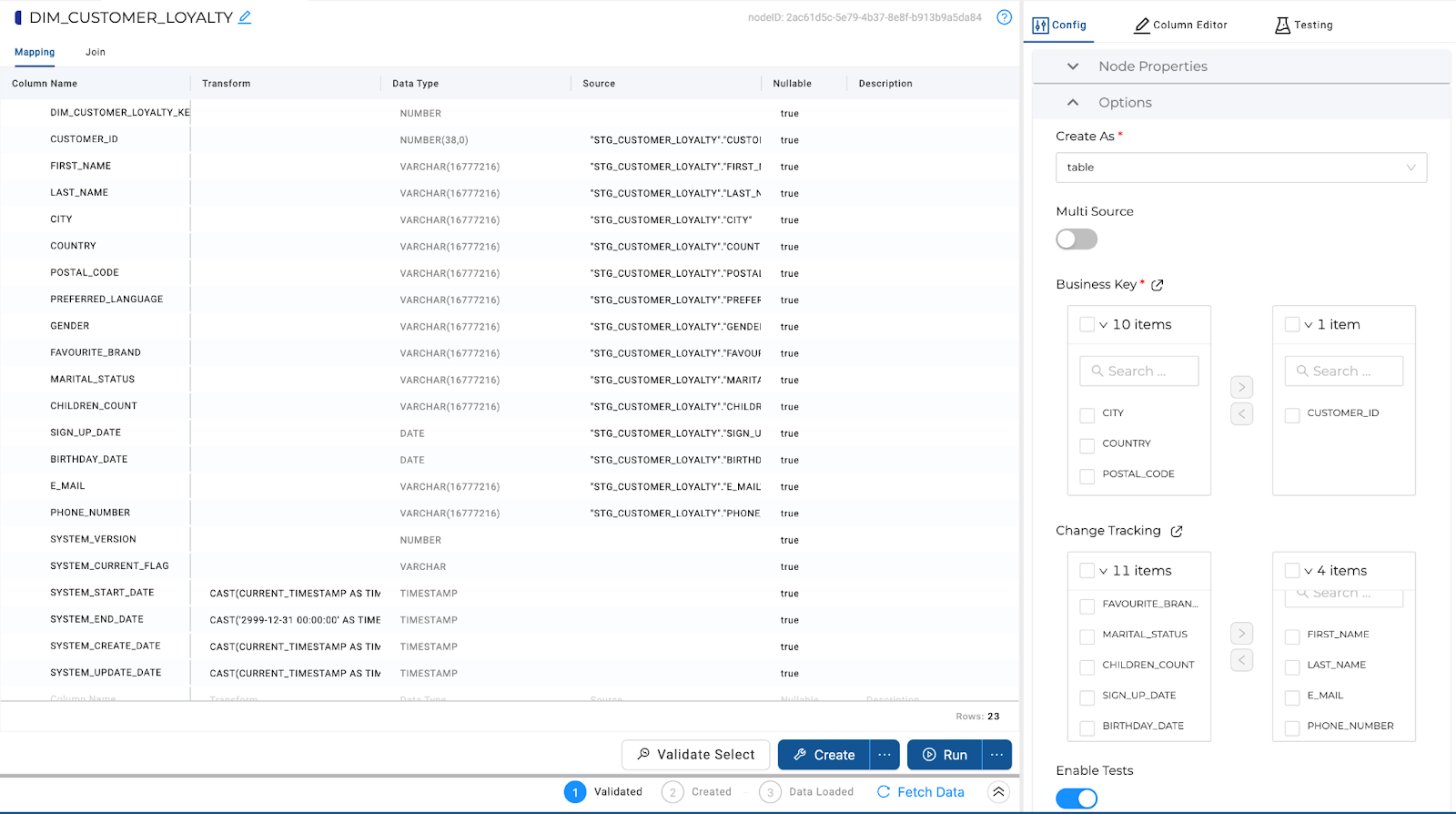

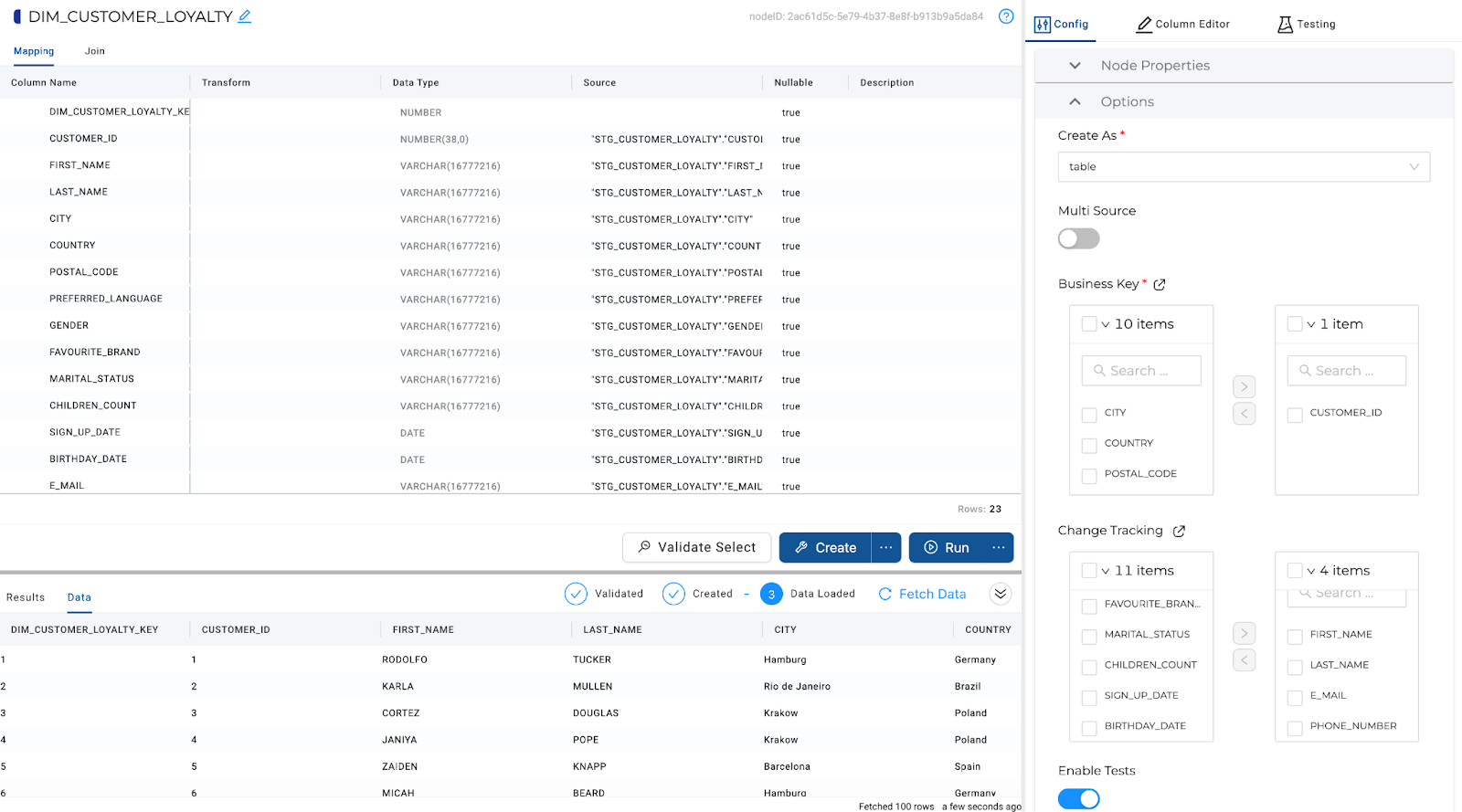

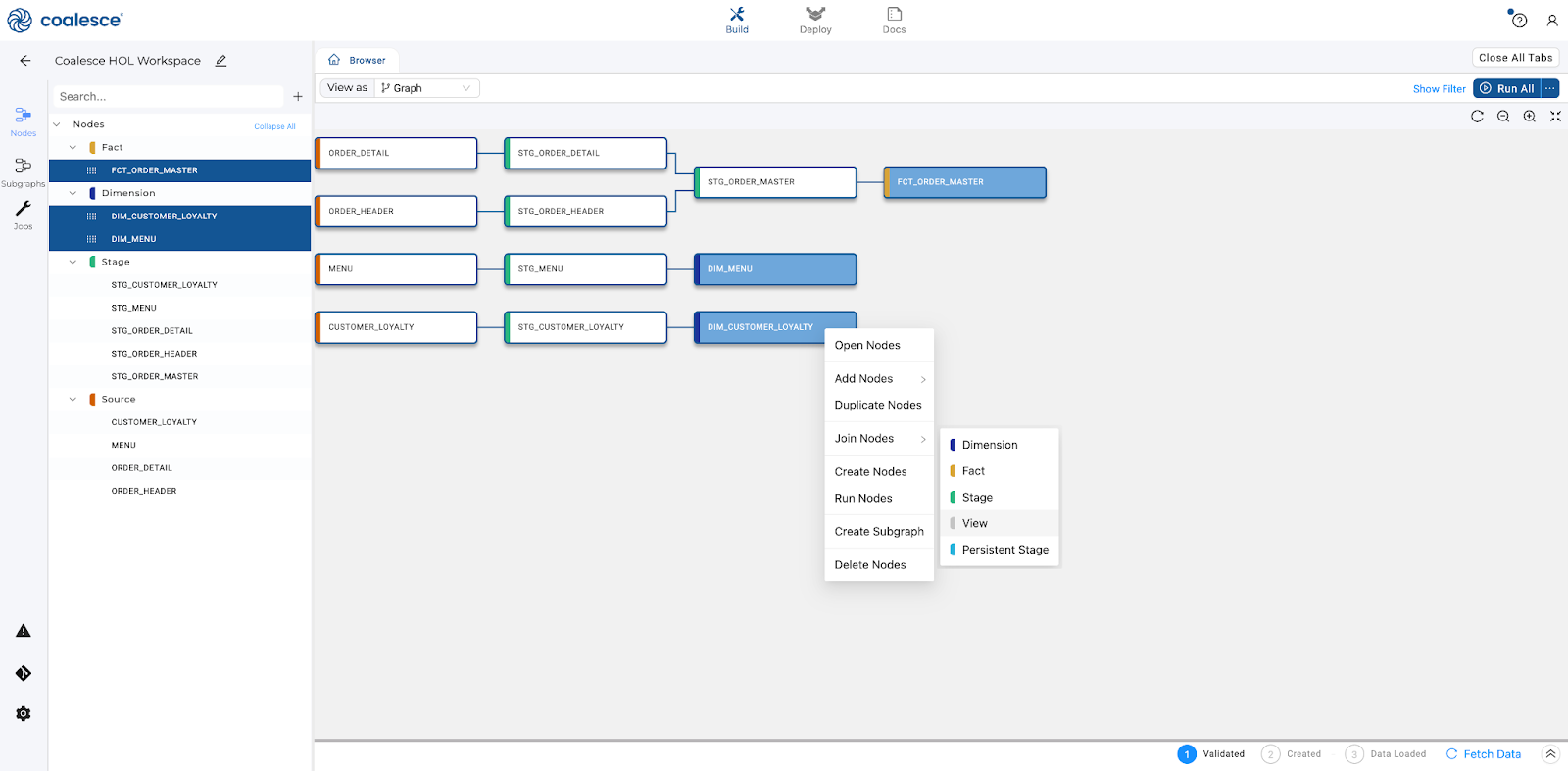

Now let's experiment with creating Dimension nodes. These nodes are generally descriptive in nature and can be used to track particular aspects of data over time (such as time or location). Coalesce currently supports Type 1 and Type 2 slowly changing dimensions, which we will explore in this section.

- Select

STG_CUSTOMER_LOYALTYand right click, create Dimension node. Because the demographics of your customer base changes over time, creating a Dimension node on top of this data will allow you to capture customer changes over time.

- Within the Option configuration section, under Business Key, check the box next to

CUSTOMER_IDand press the > button to select it as your business key.

- To create a Type 2 dimension, select the

FIRST_NAME, LAST_NAME, EMAIL, PHONE_NUMBERcolumns under Change Tracking. This will allow you to track changes to these columns over time.

- In the lower right of your screen, click the Create button to create your Dimension node and then the Run button to populate it with data.

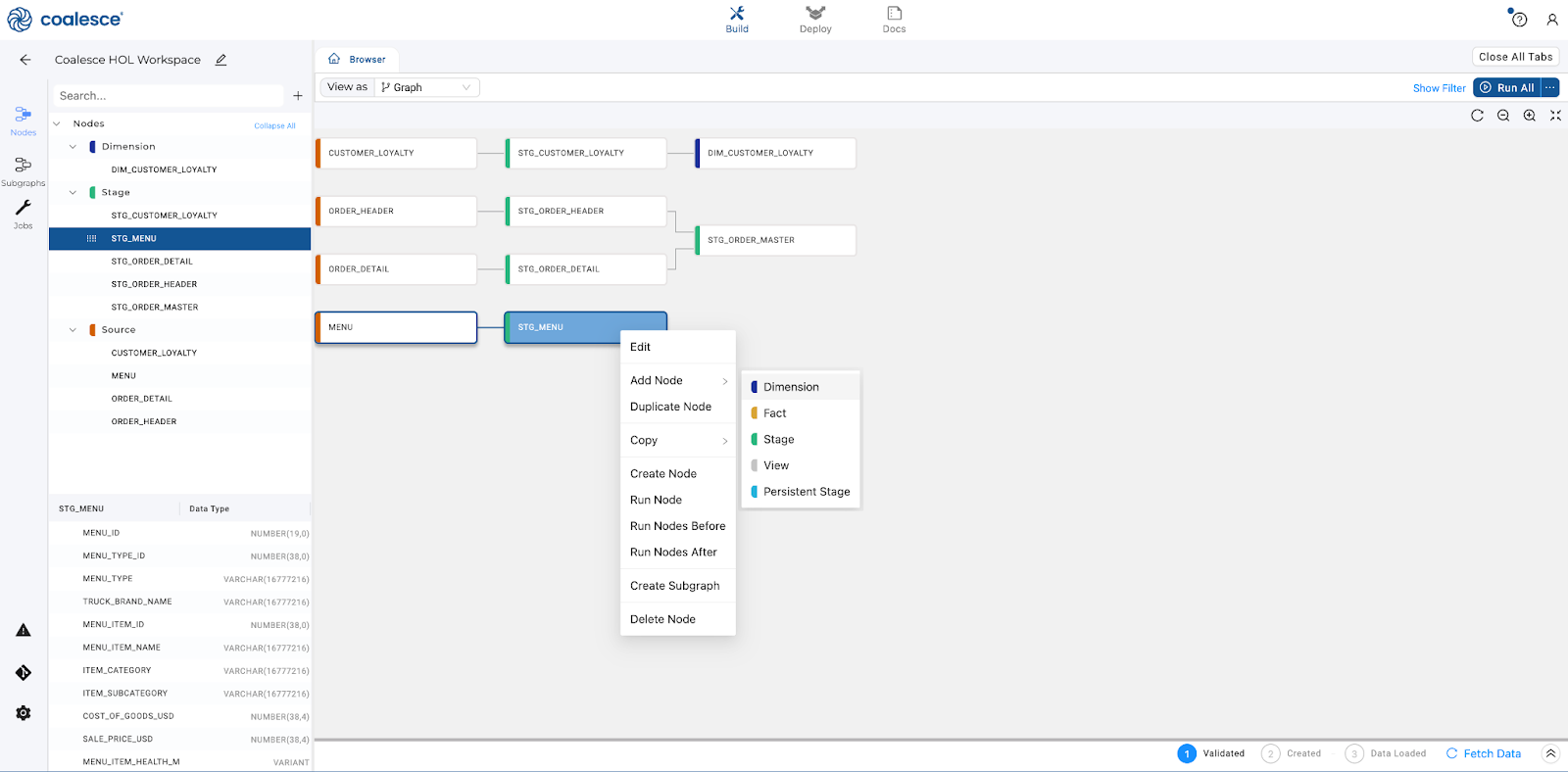

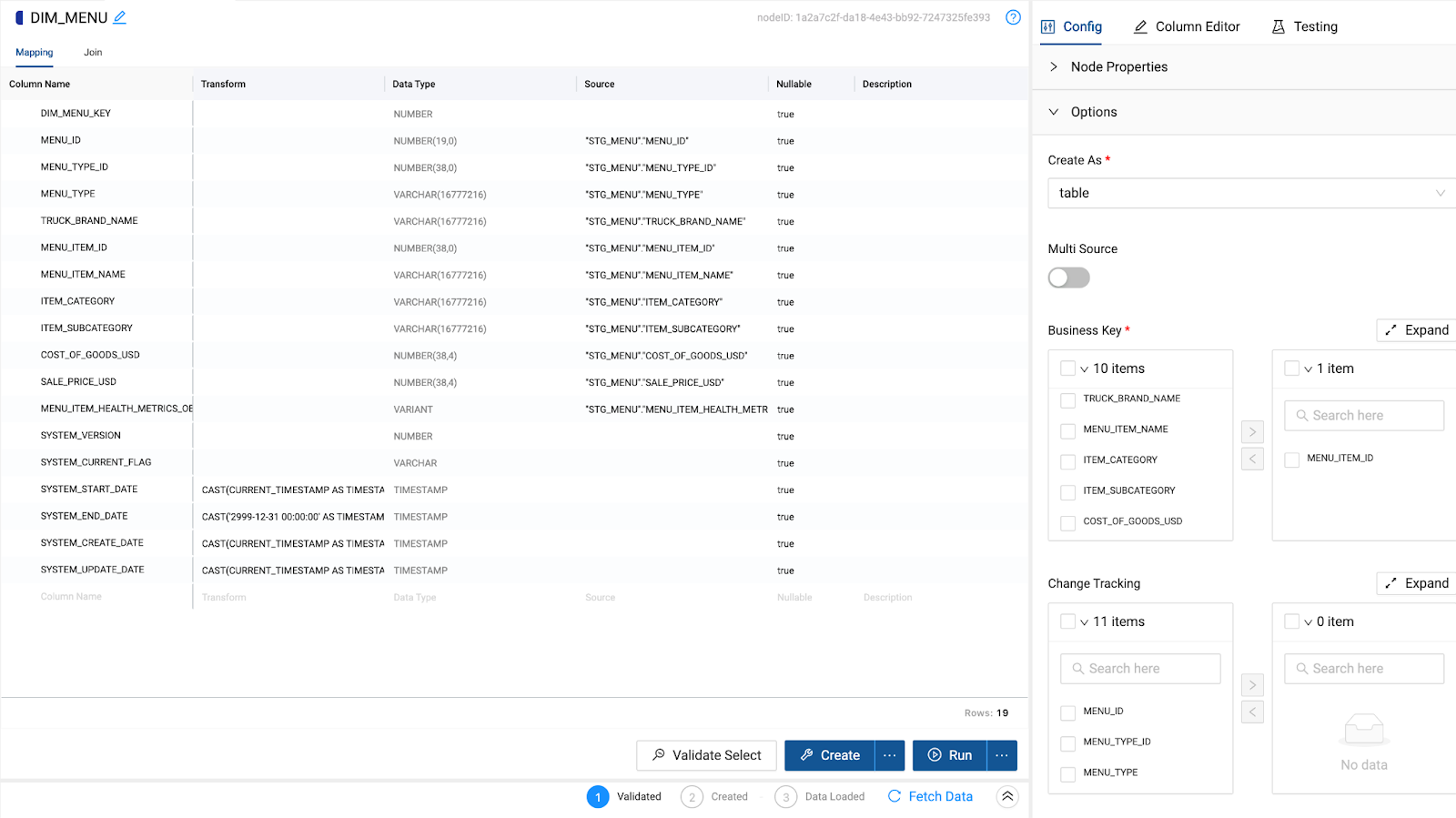

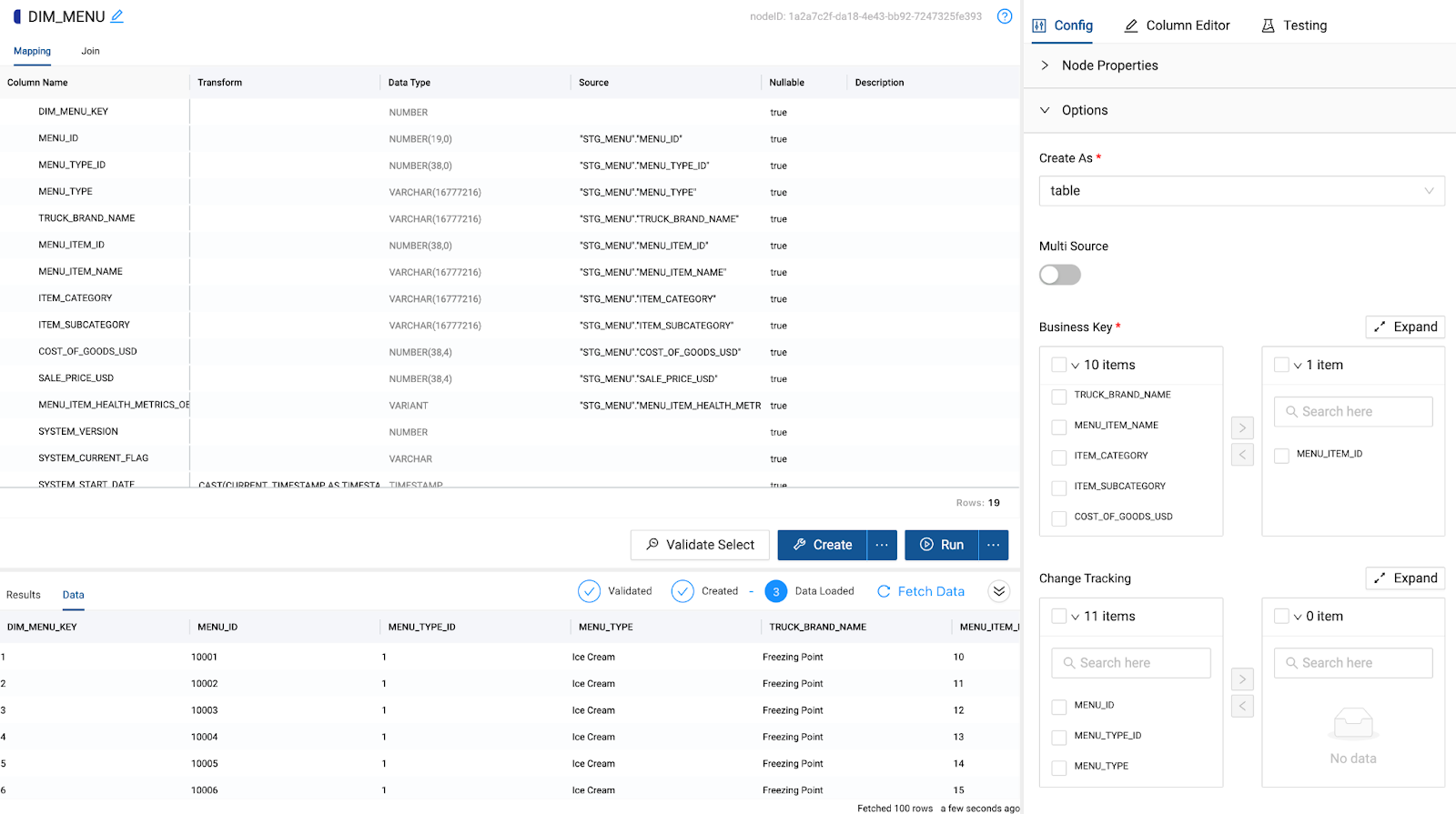

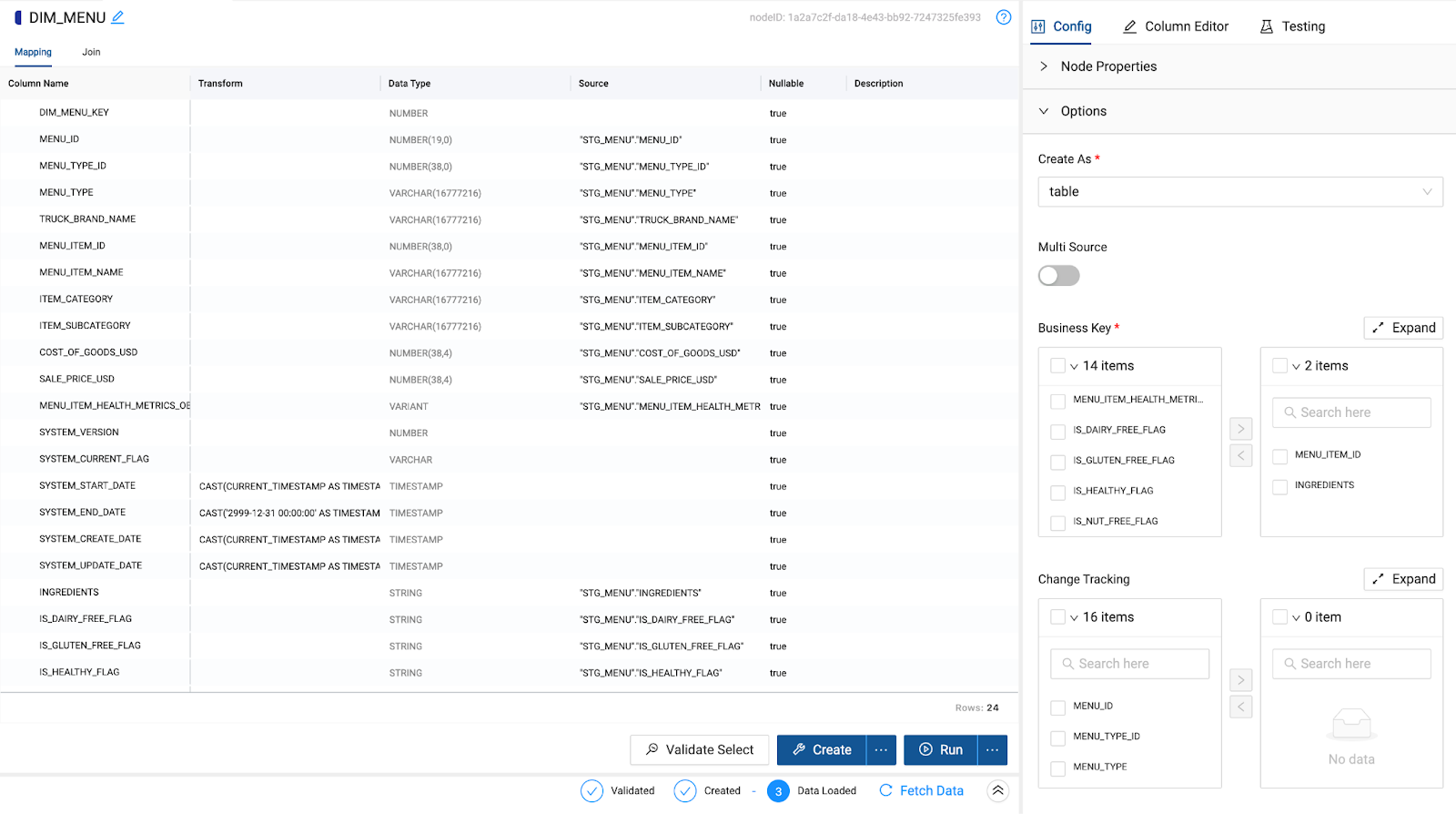

- Now let's create a Type 1 dimension which will help you focus on particular customer details. Navigate back to the Browser tab and select your

STG_MENUnode. Right click and select Add Node > Dimension from the dropdown menu.

- In your

DIM_MENUnode, navigate to the right and expand the Options drop down in the Config section. Under Business Key, check the box next toMENU_ITEM_IDand press the > button to select it as your business key.

- In the lower right of your screen, click the Create button to create your Dimension node and then the Run button to populate it with data.

Fact nodes represent Coalesce's implementation of a Kimball Fact Table, which consists of the measurements, metrics, or facts of a business process and is typically located at the center of a star or Snowflake schema surrounded by dimension tables.

Let's create a Fact table to allow us to query the metrics surrounding our customer orders.

- In your Browser tab, select the

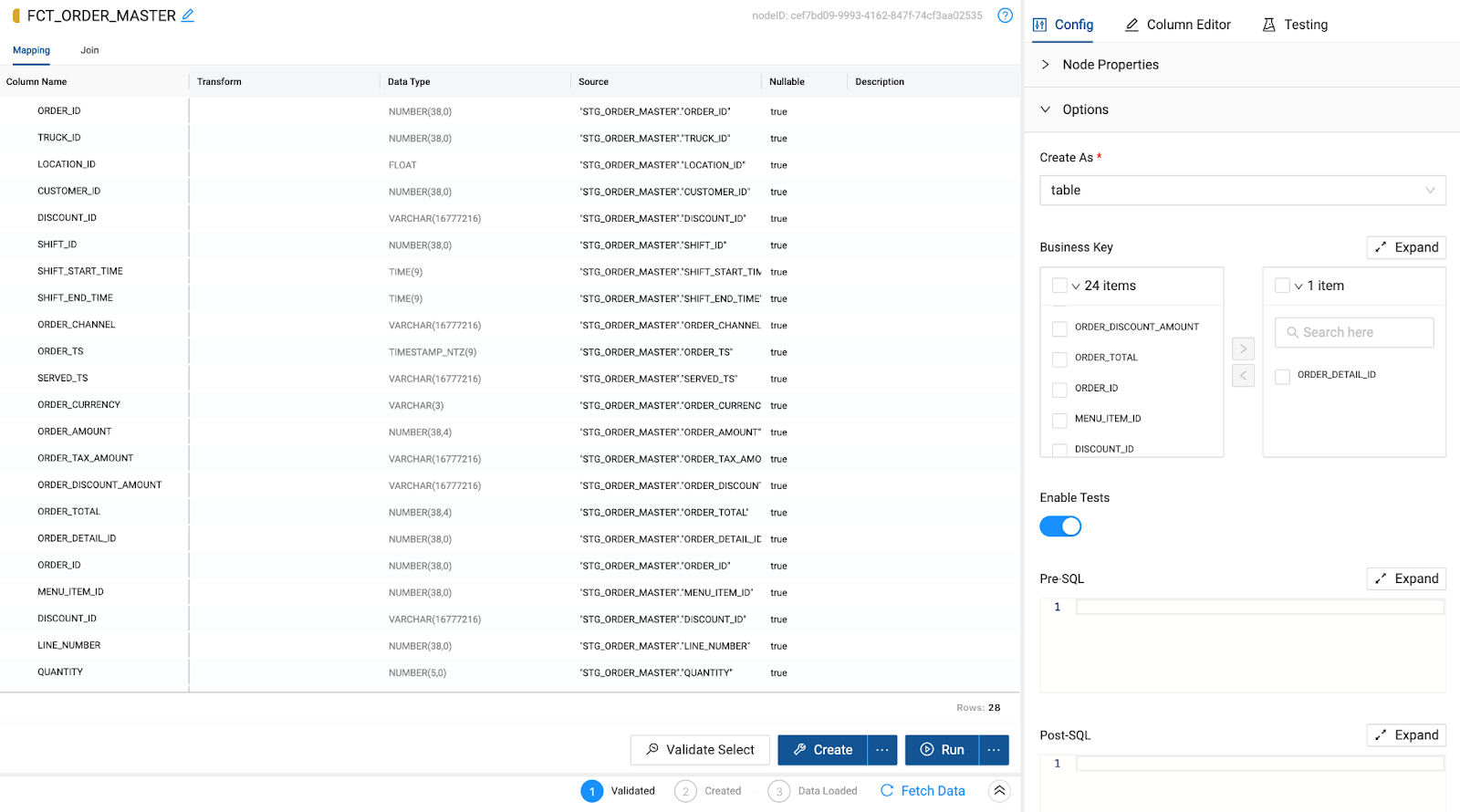

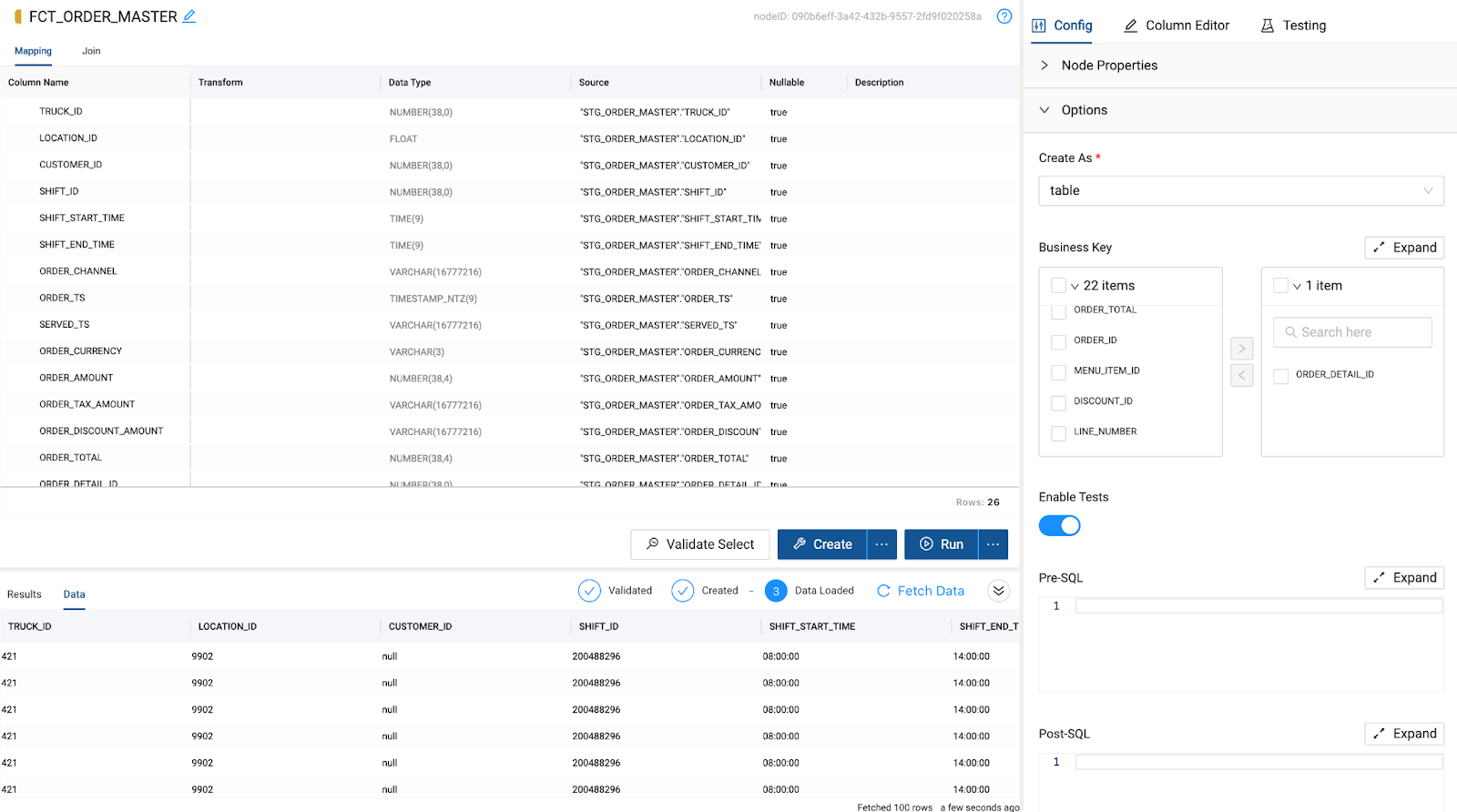

STG_ORDER_MASTERnode. Then right click and select Add Node > Fact.

- Navigate to the right and expand the Options drop down in the Config section. Under Business Key, check the boxes next to

ORDER_DETAIL_ID.Then press the > button to select them as your business key.

- Click the Create and Run buttons to run and populate your

FCT_ORDER_MASTERnode.

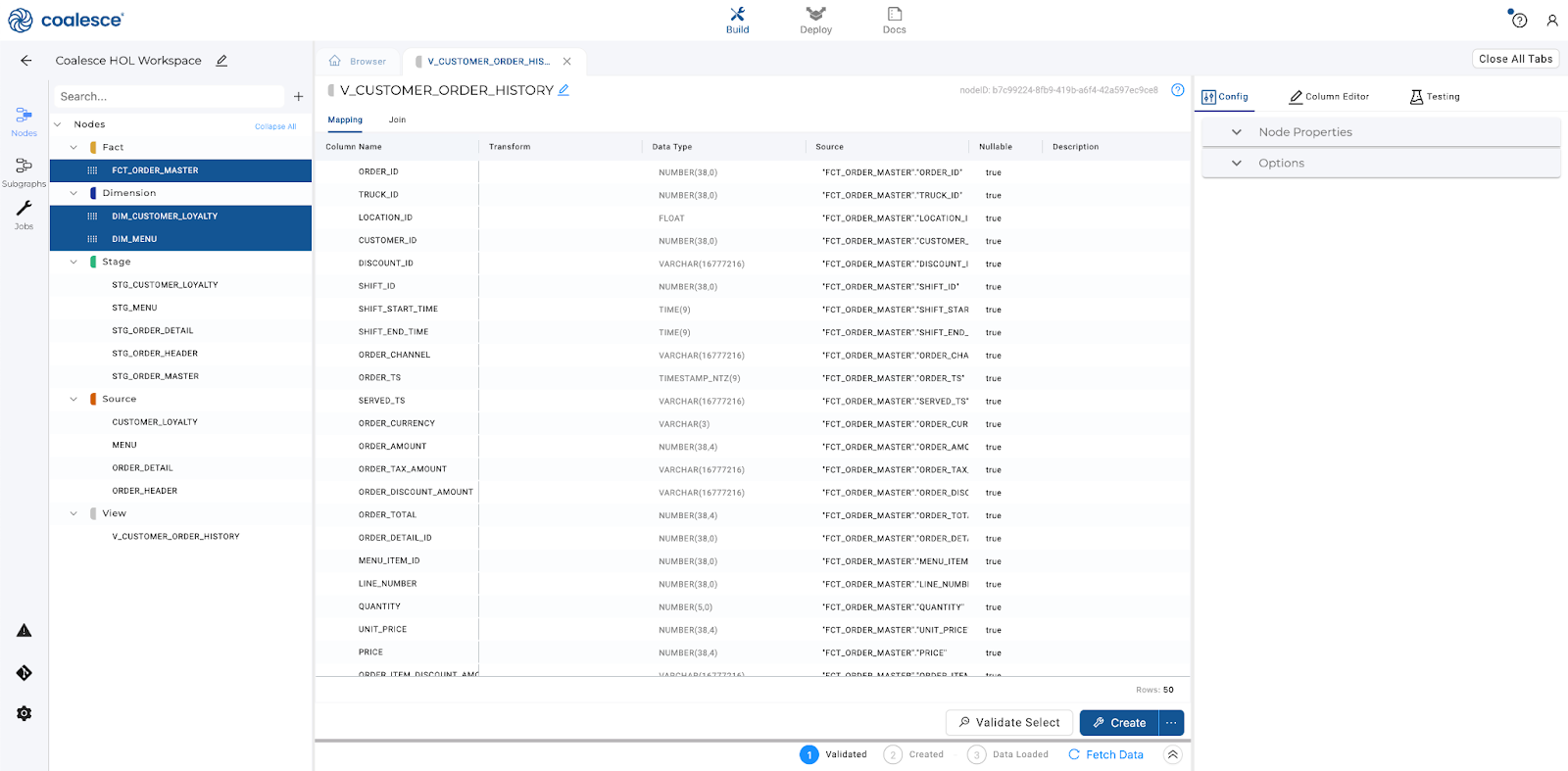

Now let's create a view for our analysts to to query allowing them to answer questions they have about our customers.

- Select

FCT_ORDER_MASTER, DIM_CUSTOMER_LOYALTY, andDIM_MENU,right click > join nodes > view.

- Rename your View node to

V_CUSTOMER_ORDER_HISTORY. To keep the data relevant to customer order history, we're going to delete many of these columns. First, delete all of theSYSTEM_columns. Next, delete theCUSTOMER_IDfrom theDIM_CUSTOMER_LOYALTYnode and theMENU_ITEM_IDfrom theDIM_MENUnode.

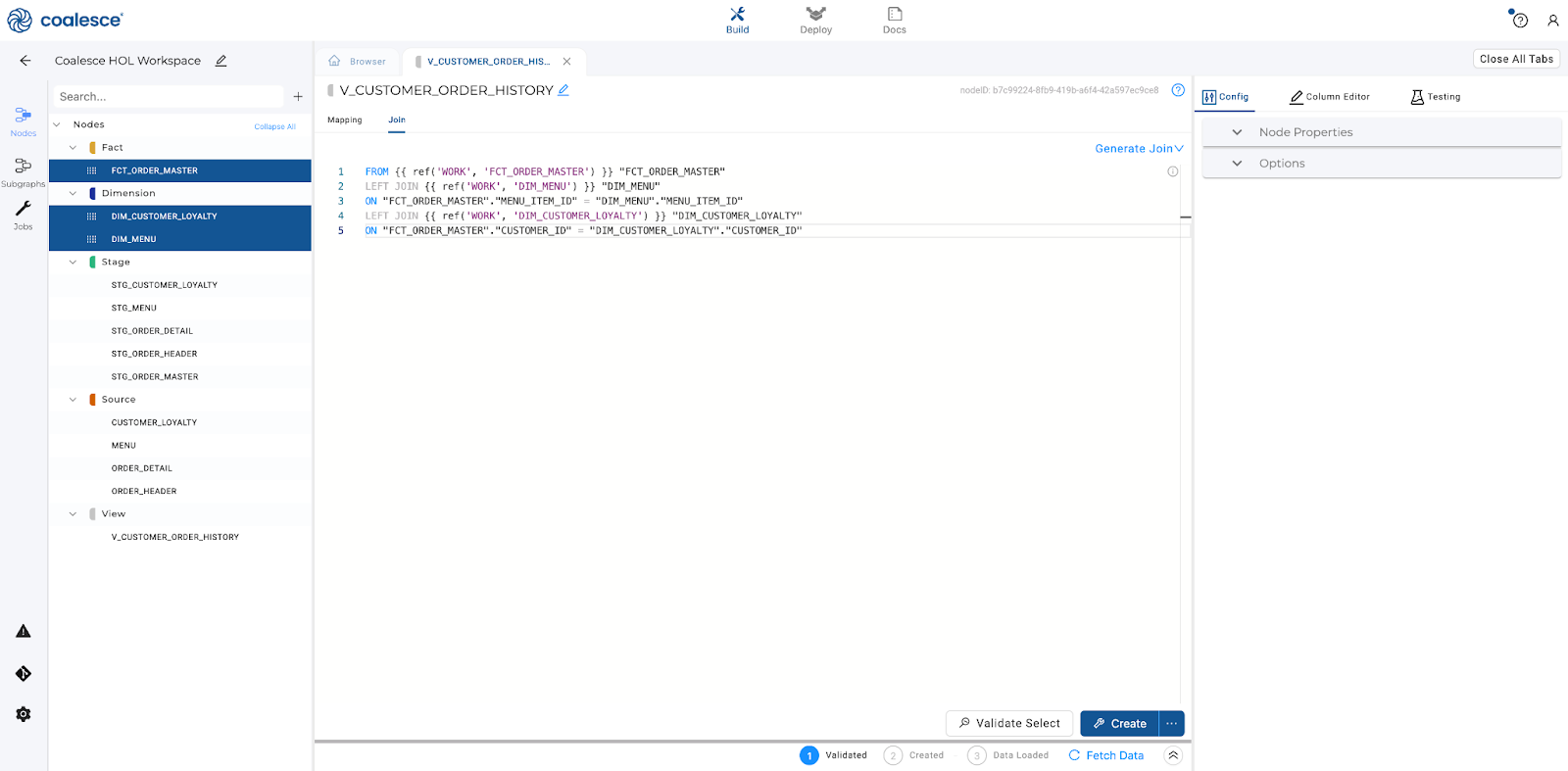

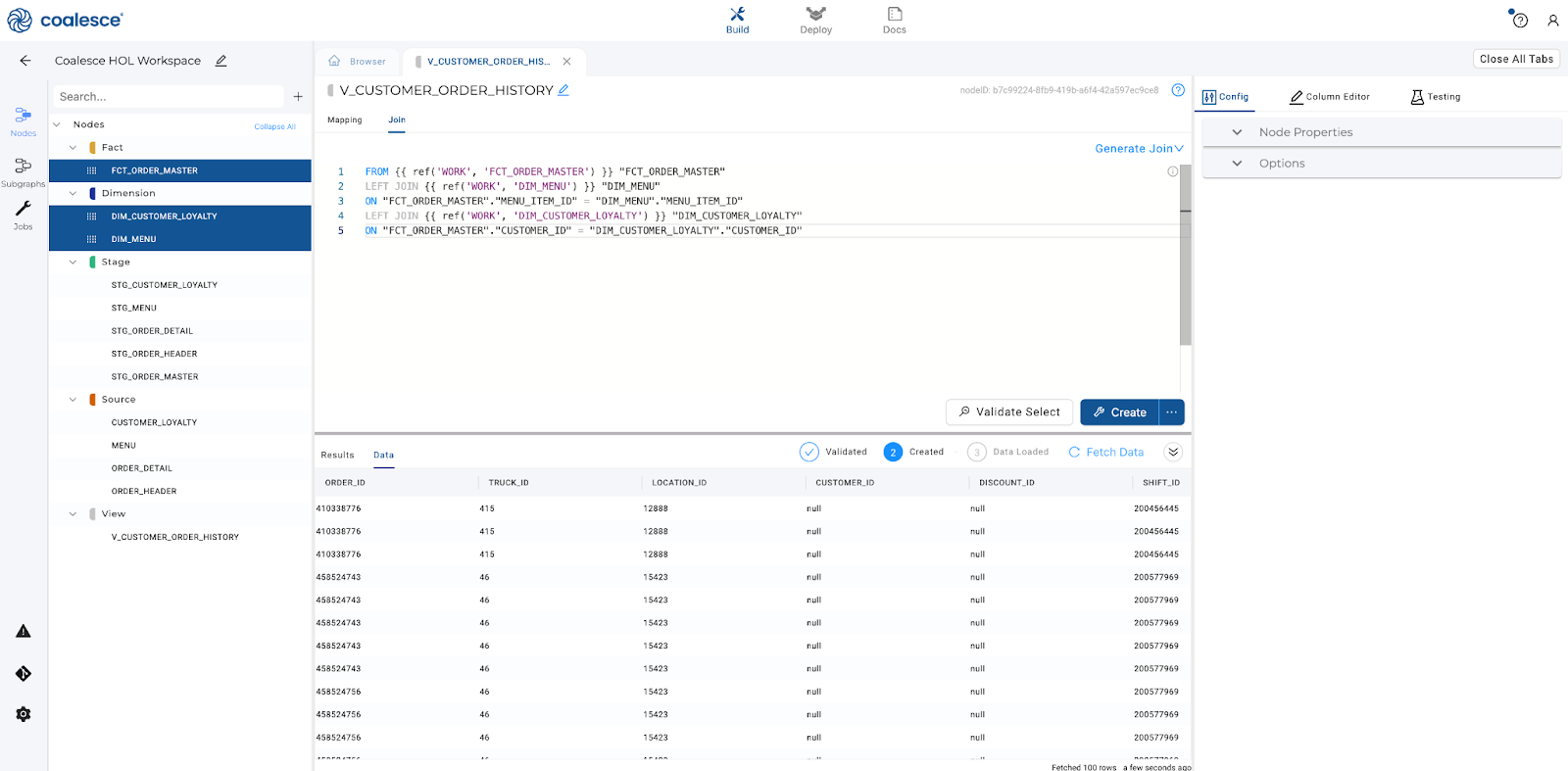

- Now click on the Join tab and enter column references as bolded below. If you write the join yourself, you should follow the below as a reference.

FROM {{ ref('WORK', 'FCT_ORDER_MASTER') }} "FCT_ORDER_MASTER"

LEFT JOIN {{ ref('WORK', 'DIM_MENU') }} "DIM_MENU"

ON "FCT_ORDER_MASTER"."MENU_ITEM_ID" = "DIM_MENU"."MENU_ITEM_ID"

LEFT JOIN {{ ref('WORK', 'DIM_CUSTOMER_LOYALTY') }} "DIM_CUSTOMER_LOYALTY"

ON "FCT_ORDER_MASTER"."CUSTOMER_ID" = "DIM_CUSTOMER_LOYALTY"."CUSTOMER_ID"

- Click the Create button to populate your view.

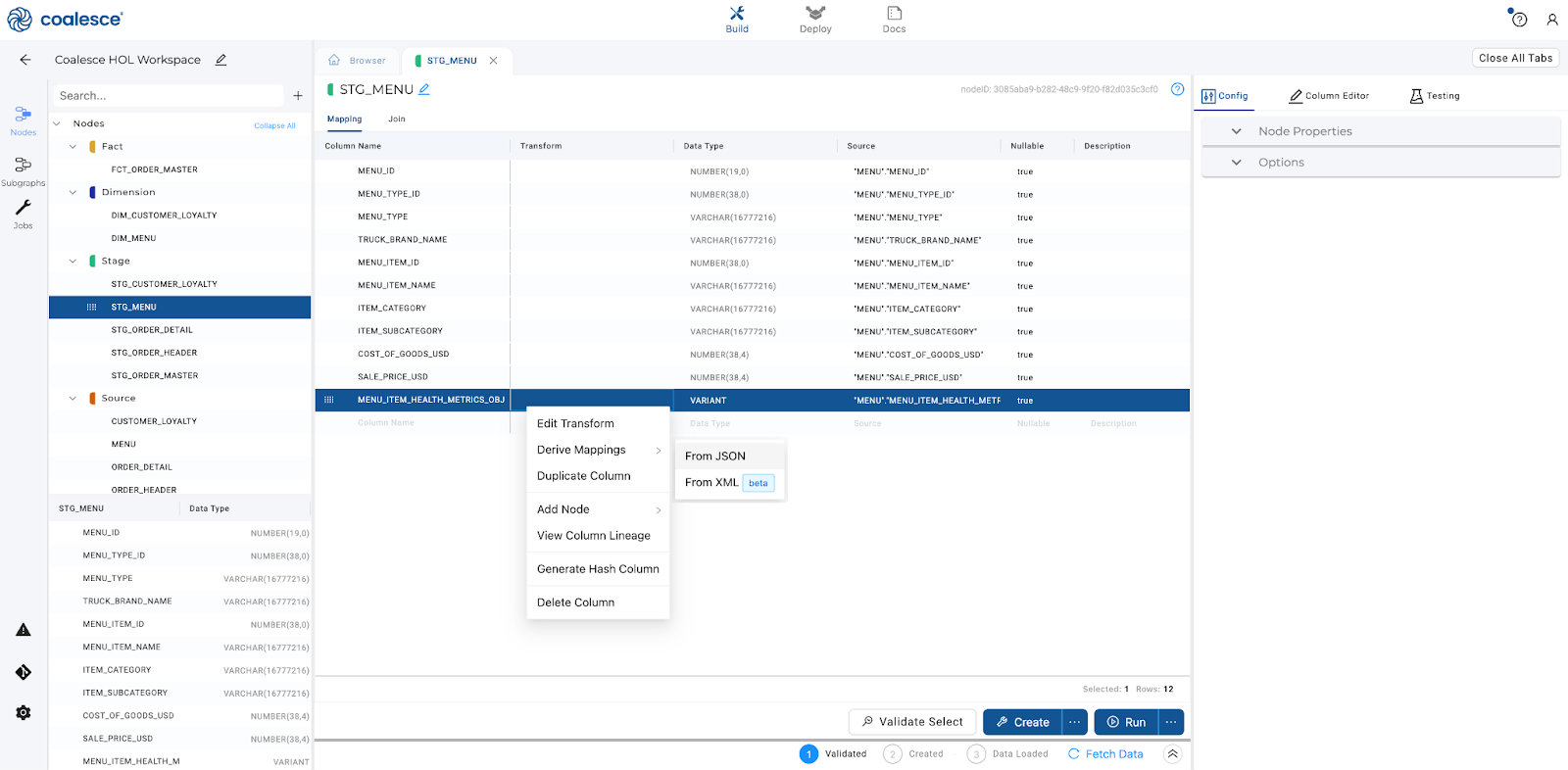

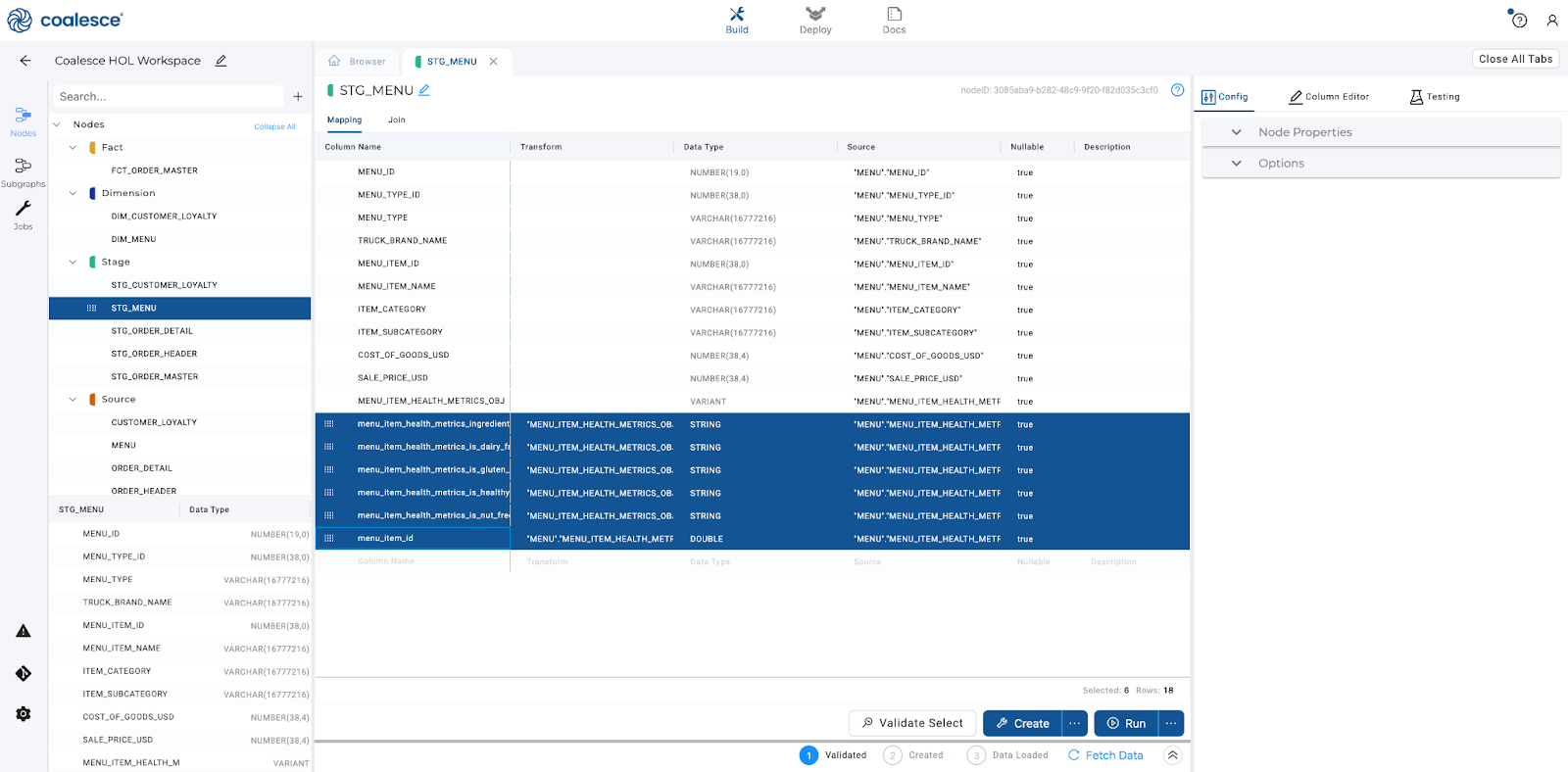

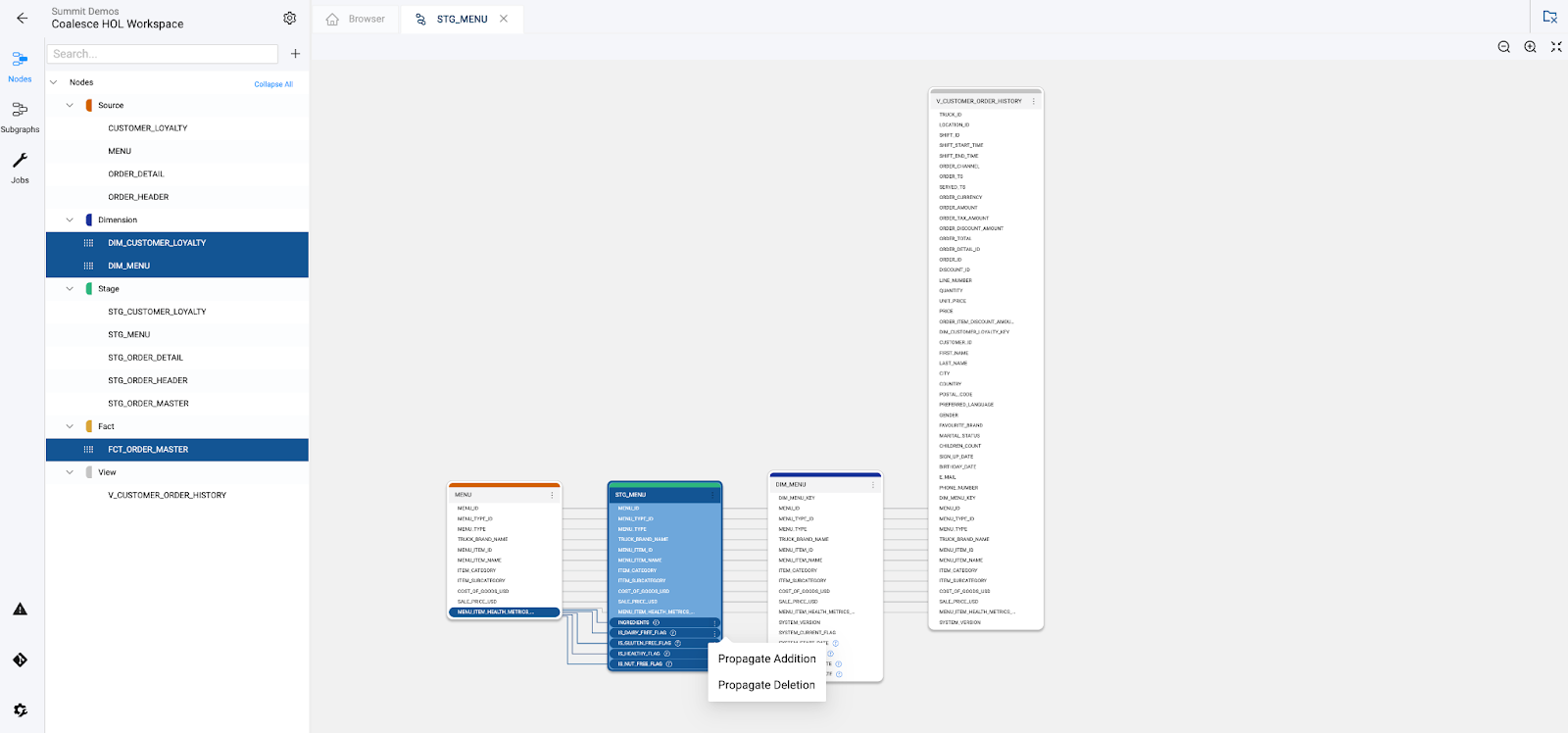

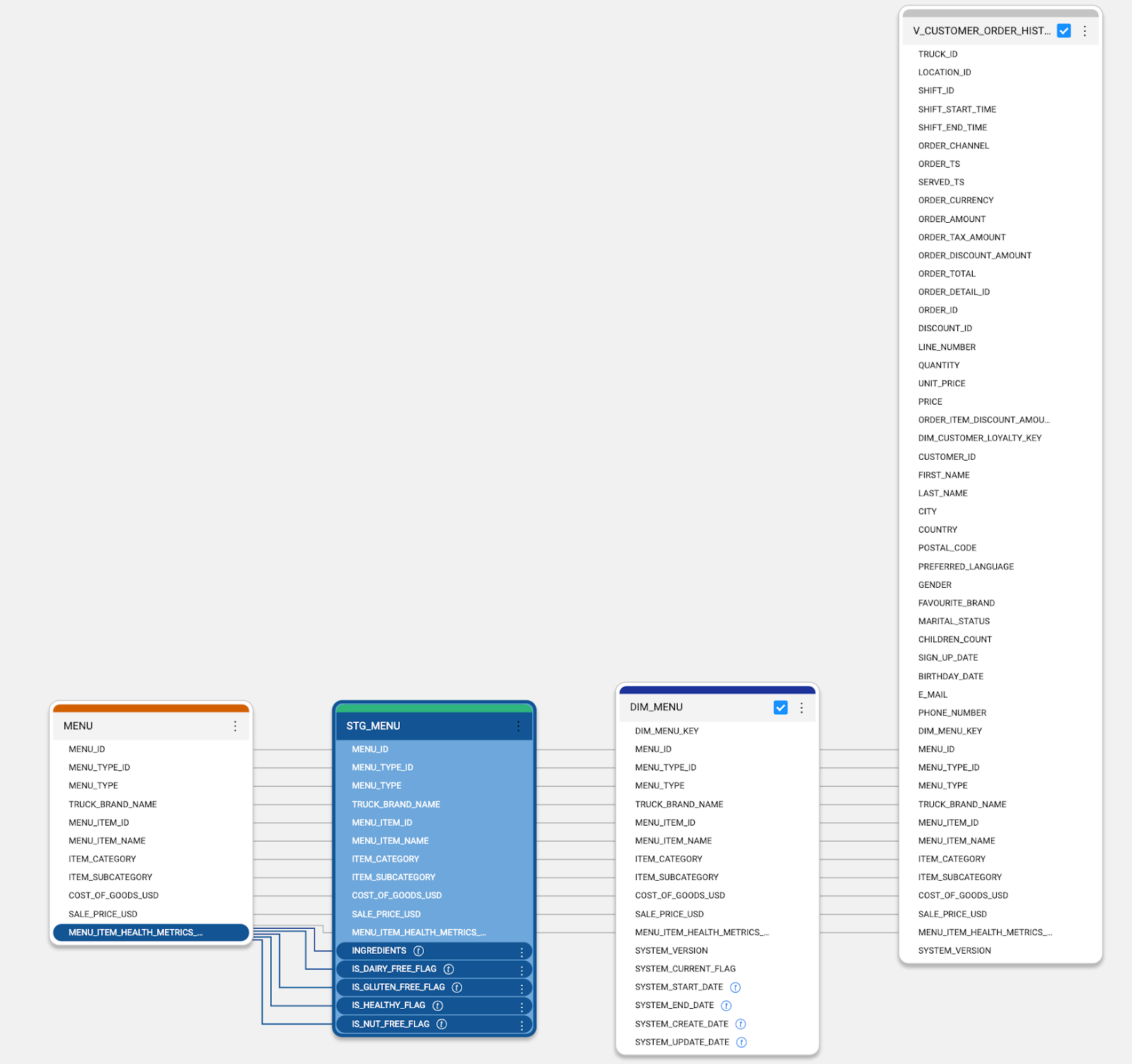

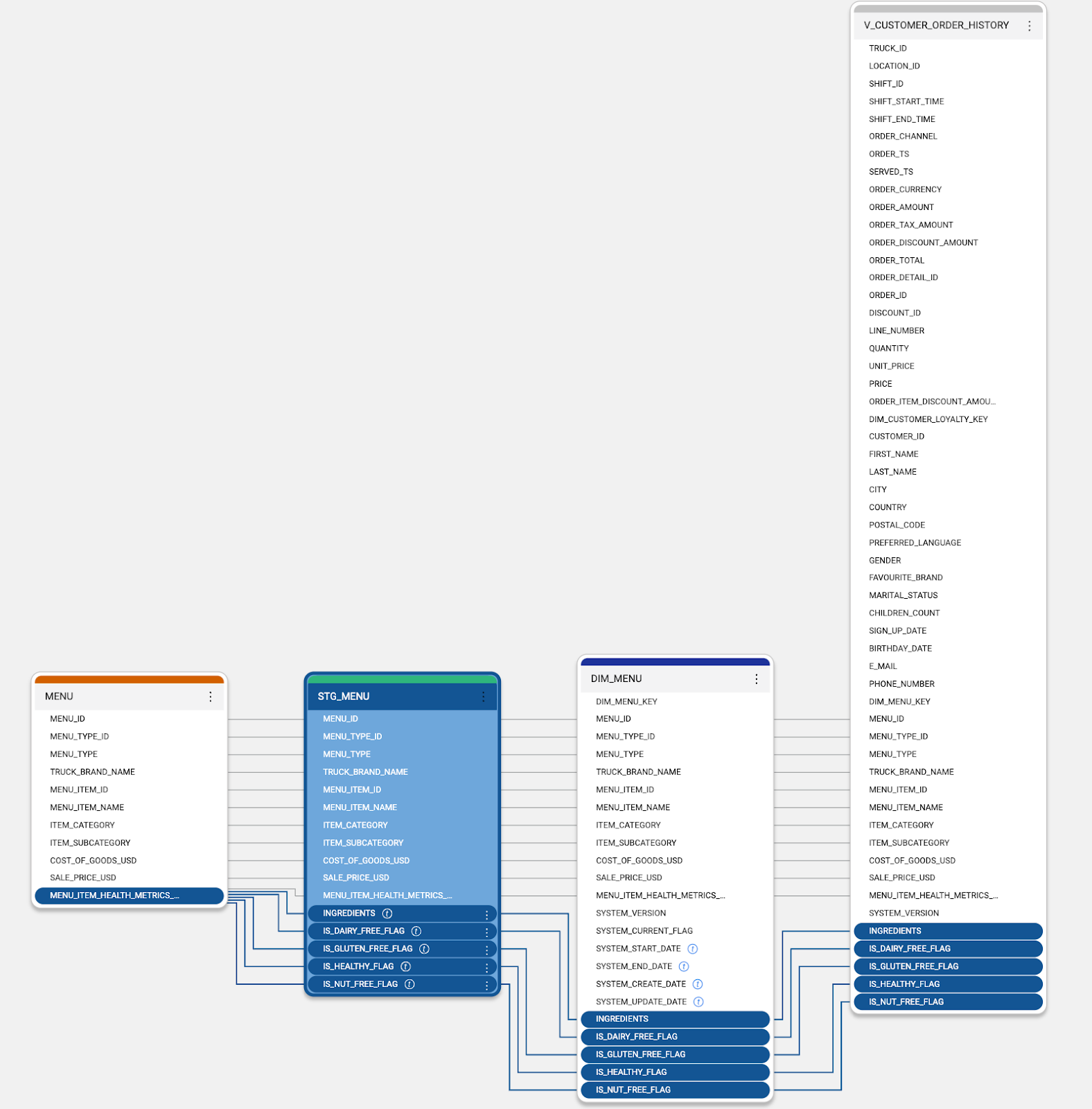

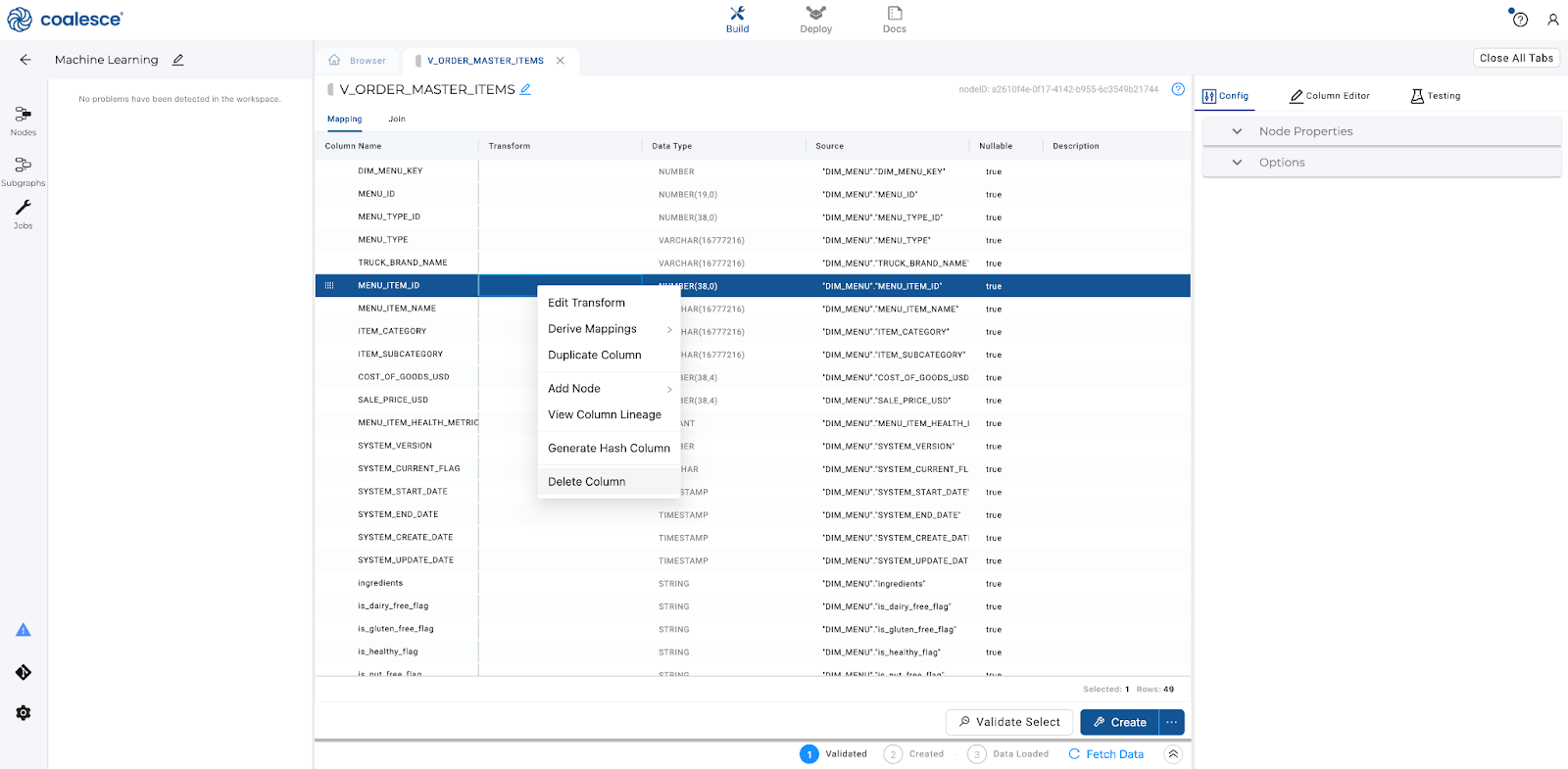

While you were building your pipeline, you may have noticed there was a VARIANT column containing JSON data in our STG_MENU node. You'll want to use this data to enrich your nodes and make more informed decisions. This means you first need to derive the JSON mappings from the VARIANT column into individual columns. Then, you need to propagate those columns to all of your downstream nodes in order to use them for decision making.

- Within the

STG_MENUnode, select theMENU_ITEM_HEALTH_METRICS_OBJcolumn. You'll notice the Data type isVARIANT. Right click on the column and select Derive Mappings > FromJSON. Coalesce will automatically unnest this data into their own separate columns.

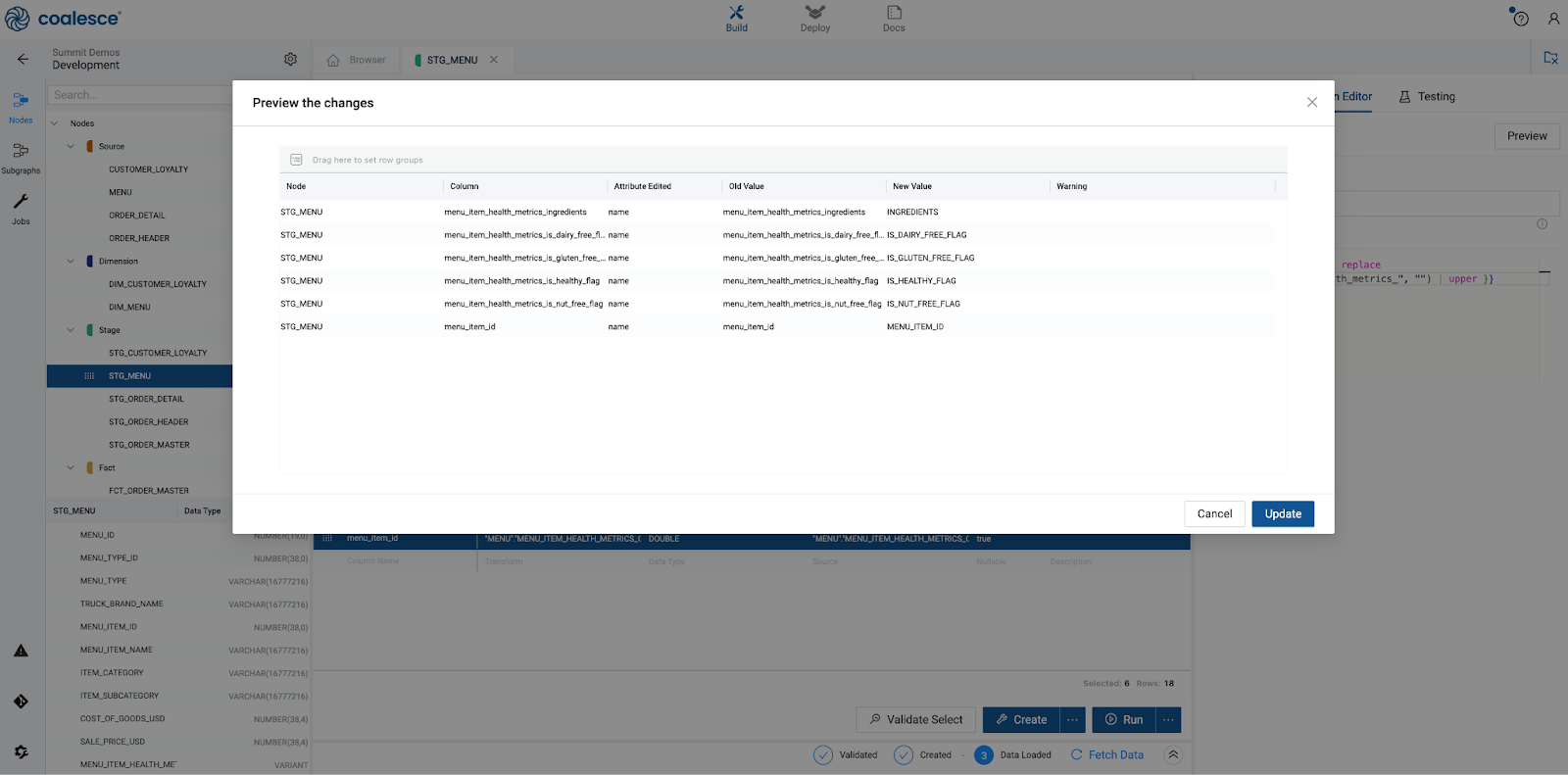

- The columns contain a prefix inherited from the

VARIANTcolumn. You can bulk edit these columns and quickly update the name to remove the unneeded prefix.

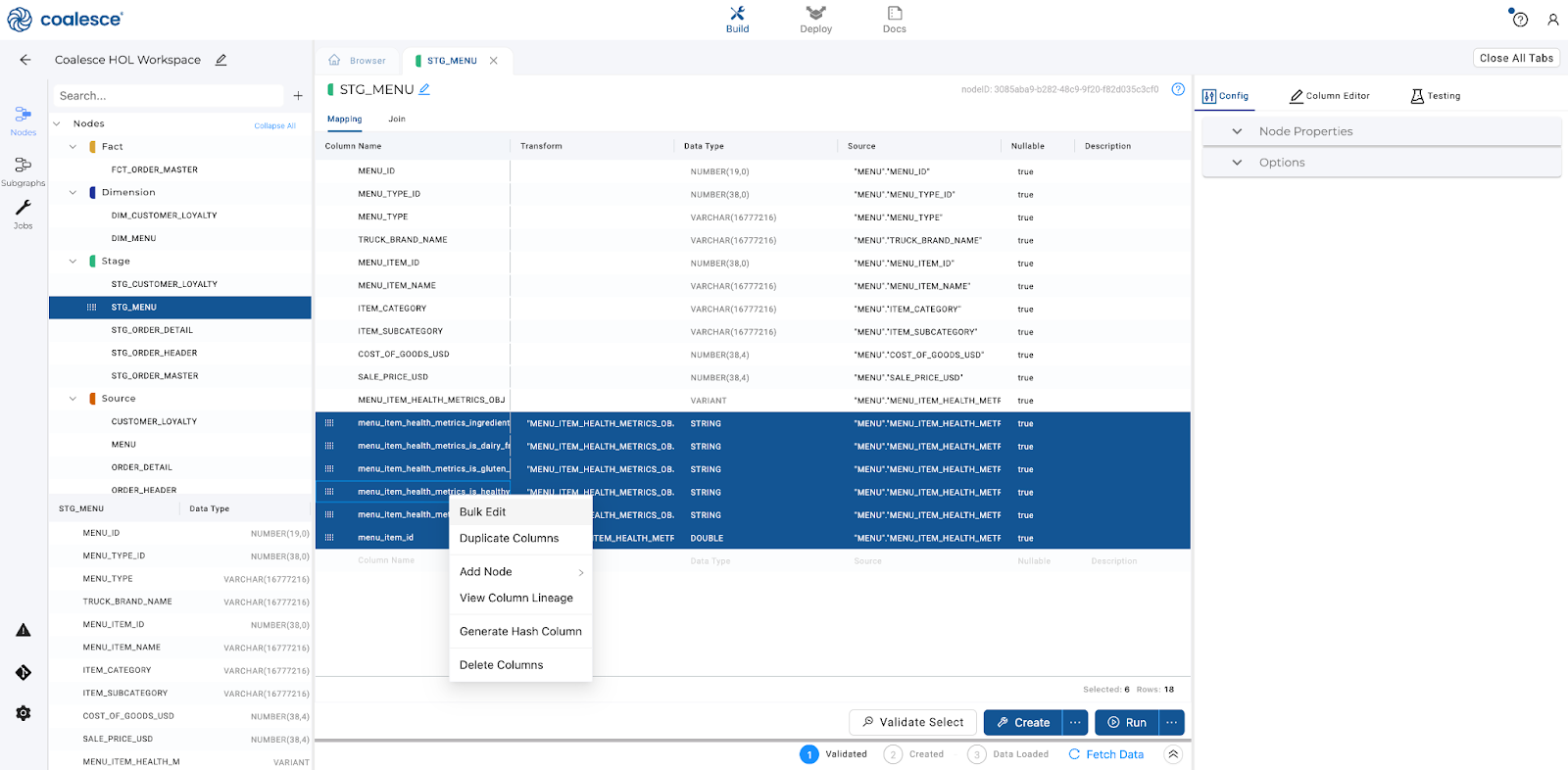

- Select the

menu_item_health_metrics_ingrediantscolumn, hold shift, and selectmenu_item_id. This will highlight all of the columns you just parsed. Right click on any of the columns and select Bulk Edit.

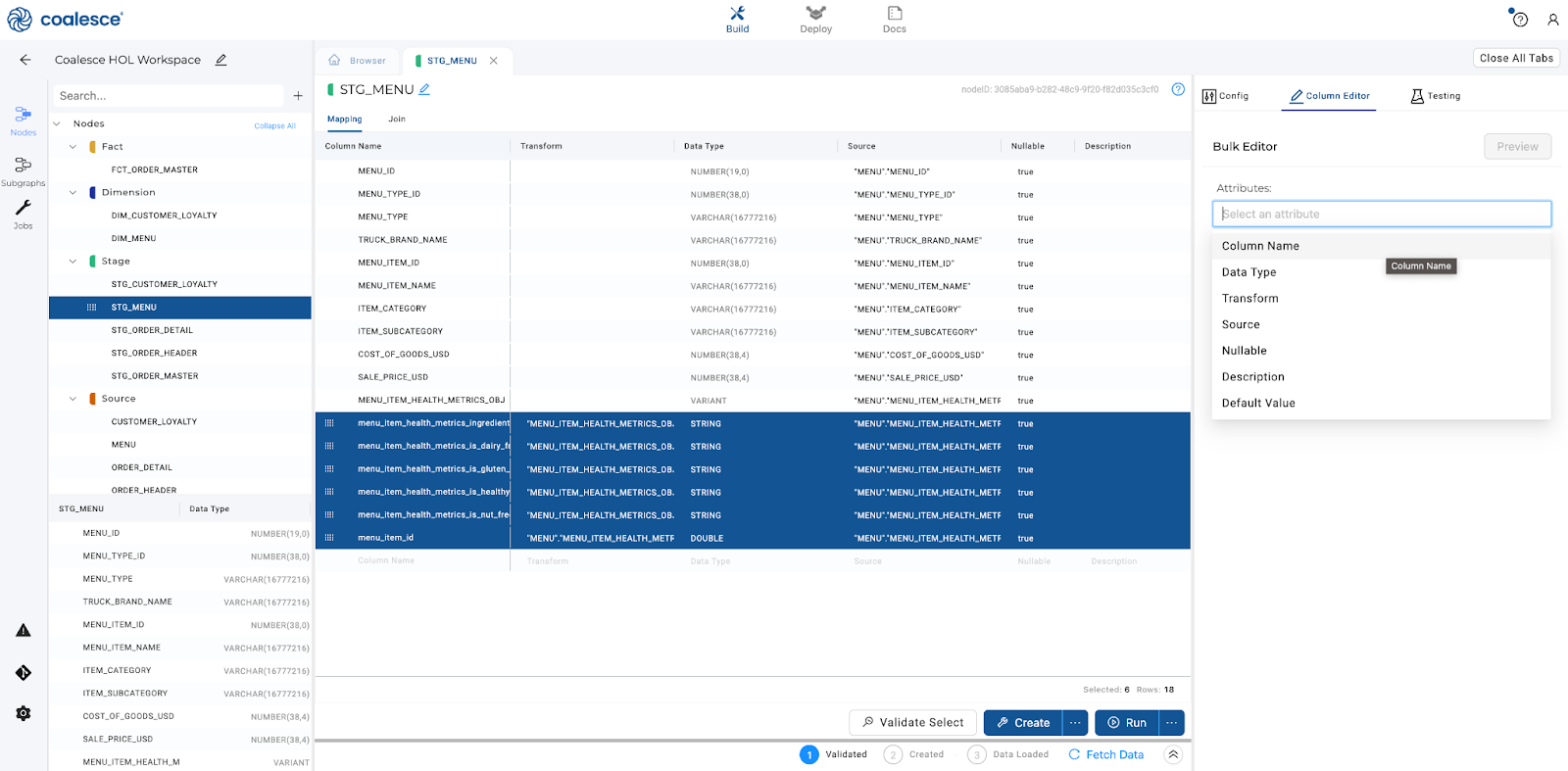

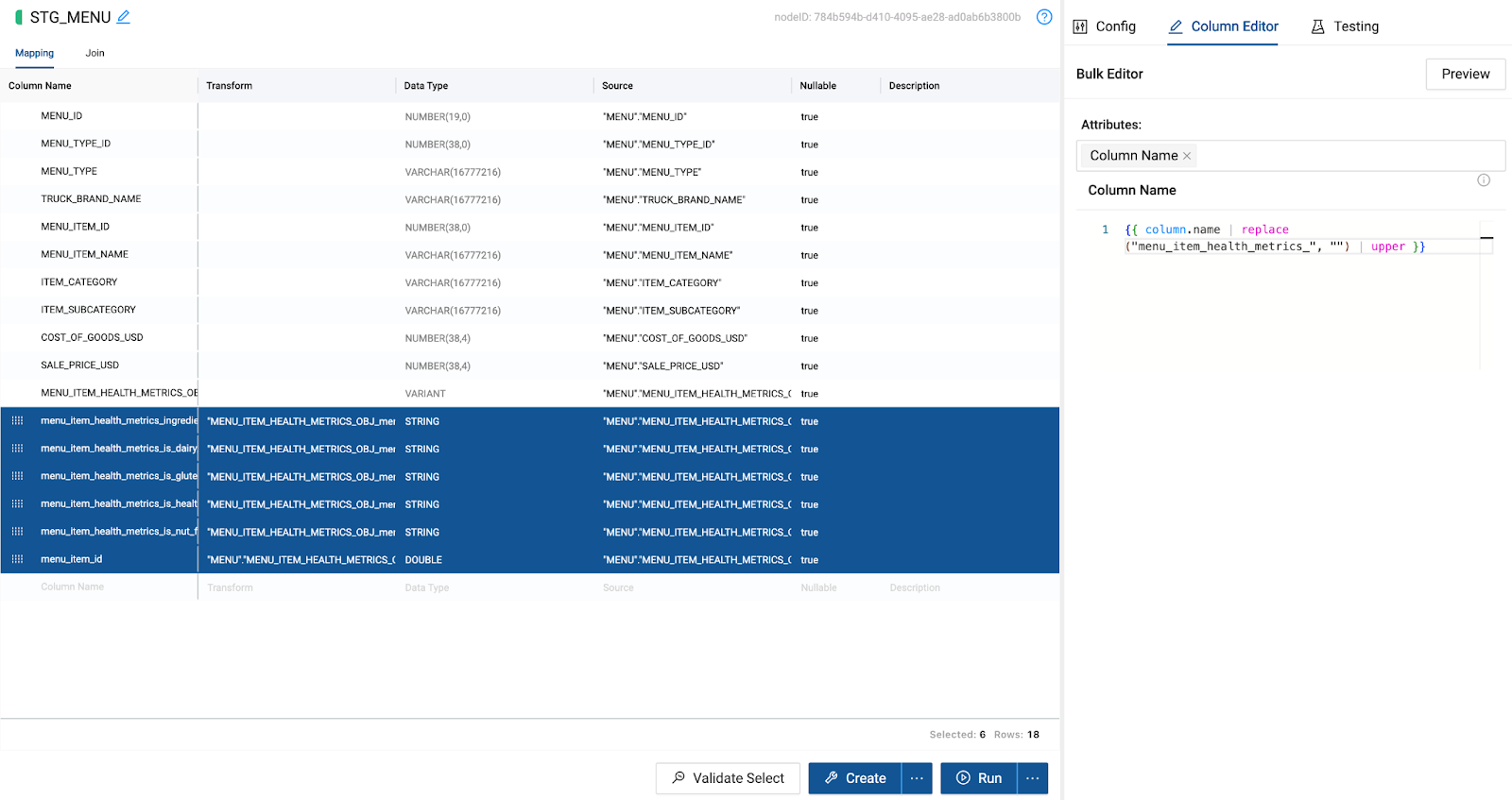

- In the Column Editor on the right side of the screen, select Column Name from the Select an attribute area.

- Within the code editor, write the following code:

{{ column.name | replace("menu_item_health_metrics_", "") | upper }}

- By selecting preview, you can view the changes that will be made to your column. Select Update to make the changes.

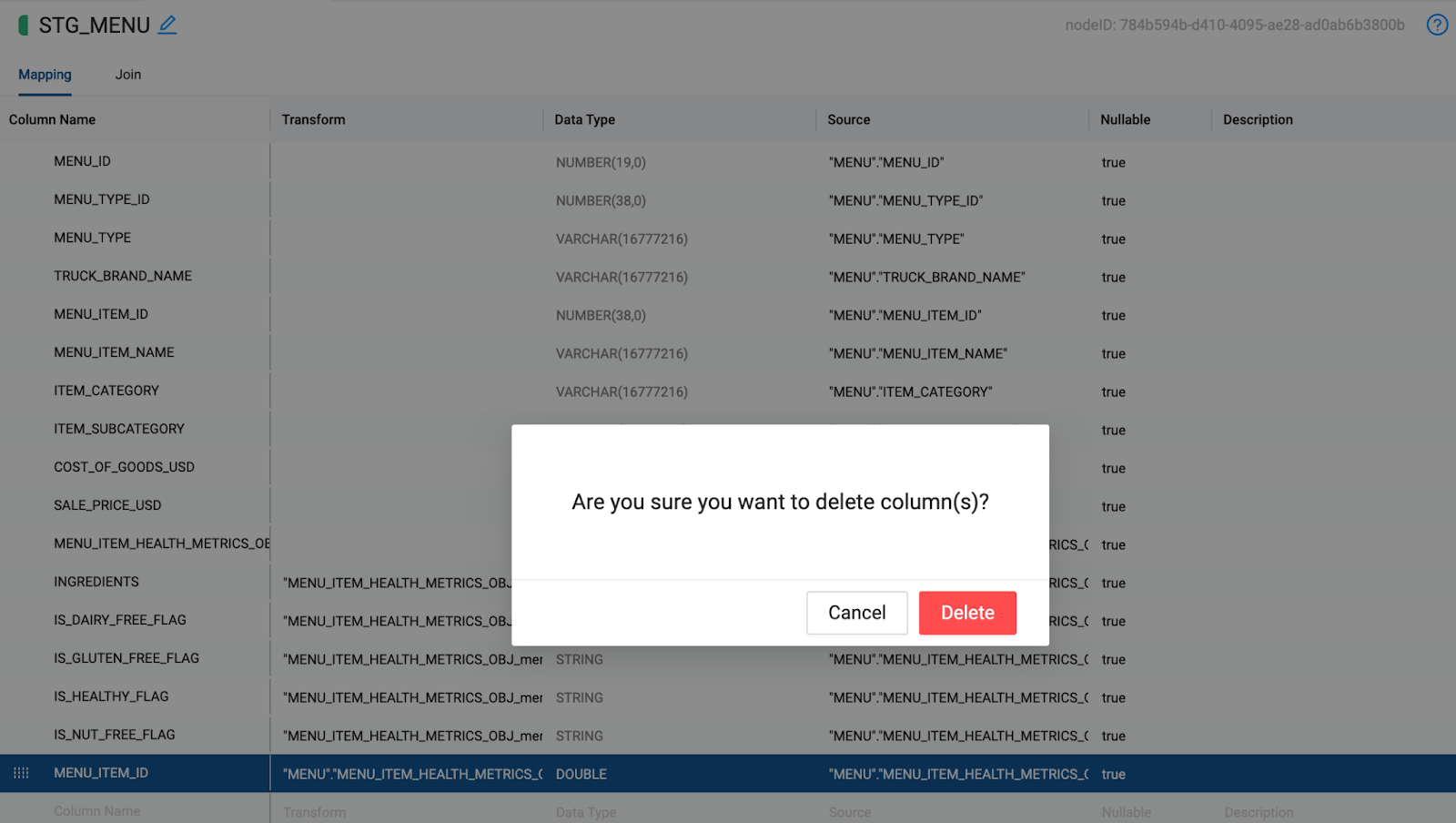

- You will now have two MENU_ITEM_ID columns in the node. Delete the column you just renamed.

- Now that you have derived our mappings from a

JSONcolumn and have bulk edited them to be more understandable, you can propagate those columns to all of the applicable downstream nodes.

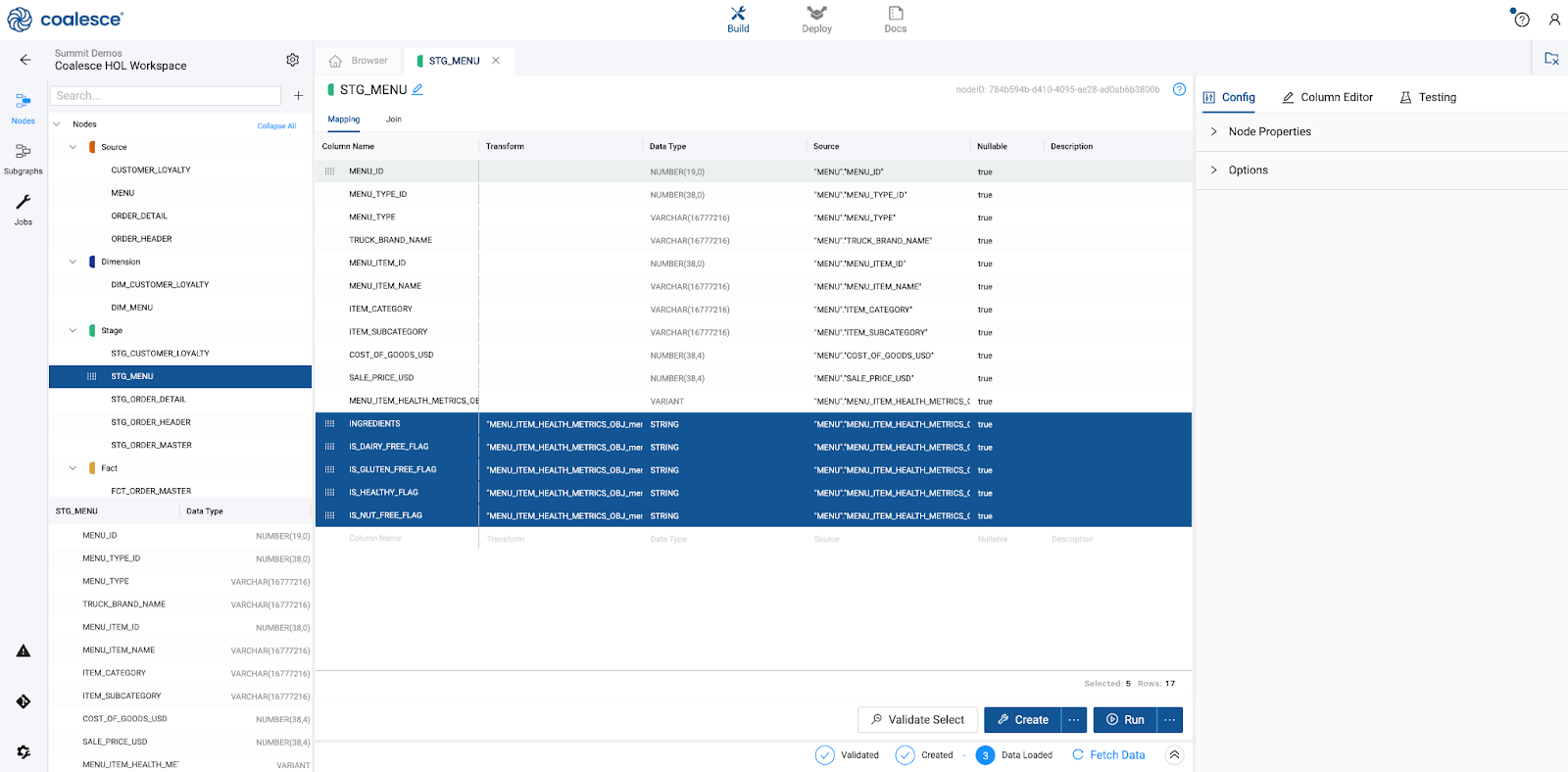

- Select the

ingredientscolumn, hold down shift, and select theIS_NUT_FREE_FLAGcolumn to highlight all of the columns you want to propagate.

- Right click on any of the columns and select View Column Lineage.

- You can see all of the columns highlighted with the

STG_MENUnode. Click on the ellipses next to any of the columns and select Propagate Addition.

- Select the check box next to

DIM_MENUandV_CUSTOMER_ORDER_HISTORYin order to propagate the columns to these nodes.

- Select Preview and then Apply to finalize these changes. You can confirm the warning about making changes without first committing your work to Git.

- Open the DIM_MENU node and update the business key by adding ingredients as a column. Since we added this as a column in the previous section, we need to update the node. Create and run the node.

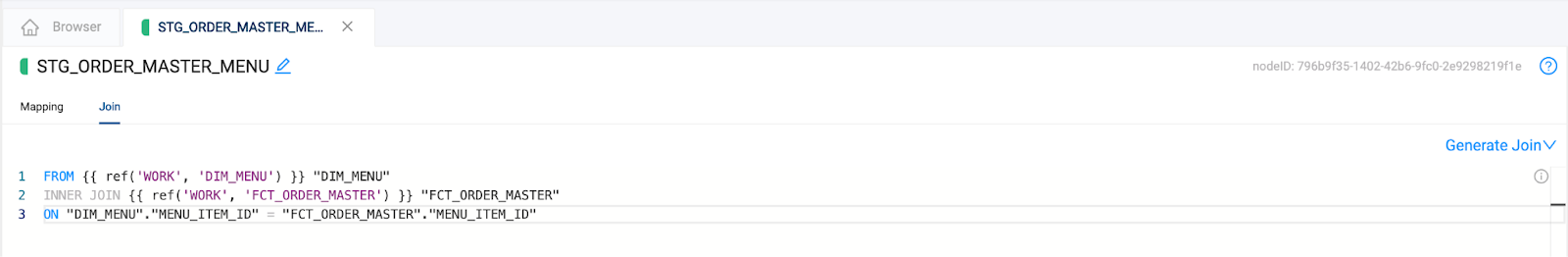

- To finish preparing your data for ML forecasting, we'll perform one more join. Navigate back to the Browser and select the

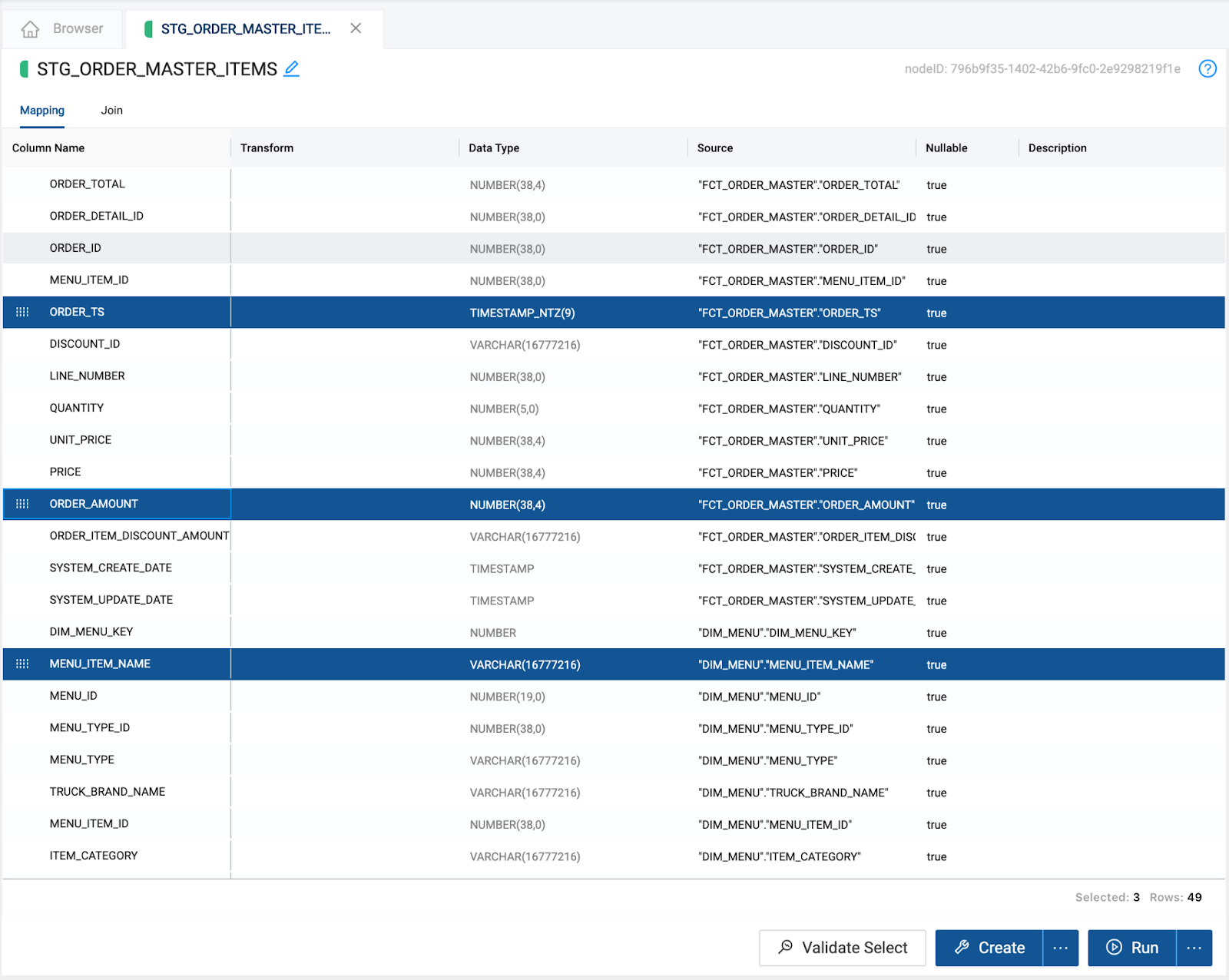

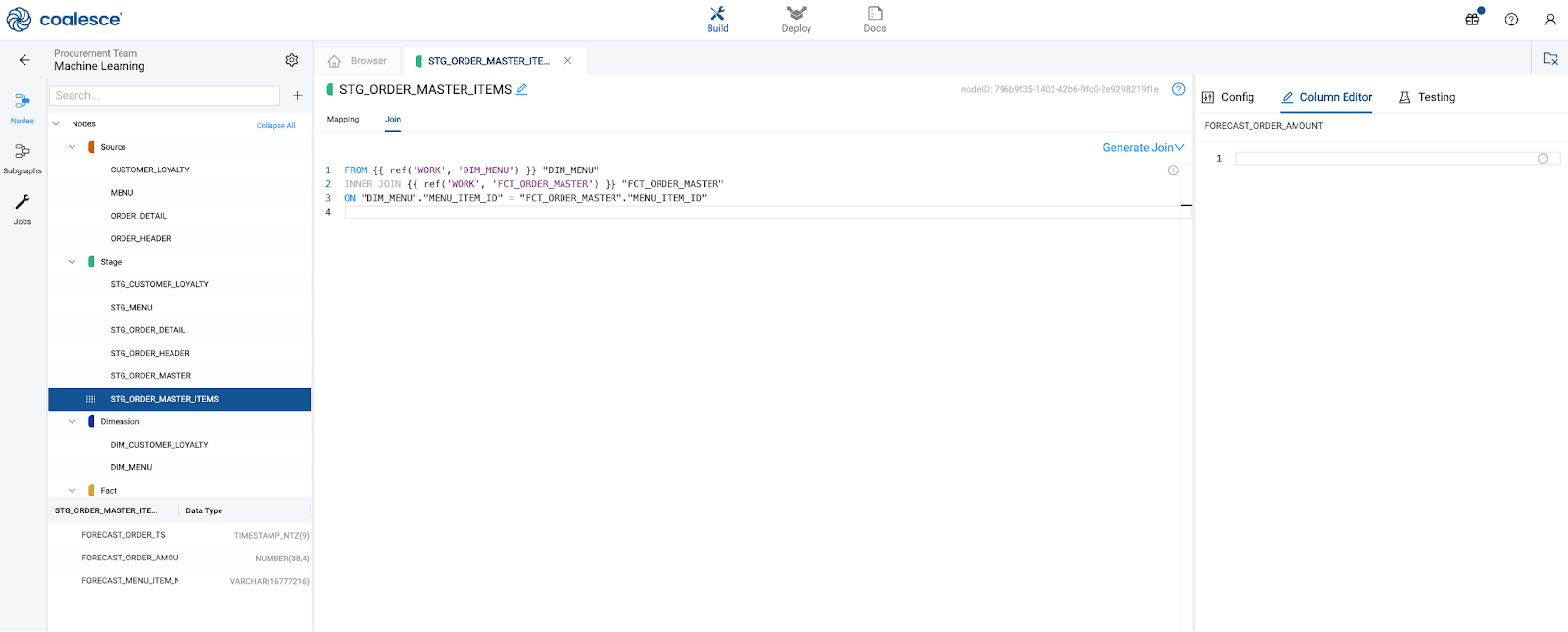

FCT_ORDER_MASTERnode and theDIM_MENUnode by holding down the shift key and clicking on both nodes. Right click on either node and select Join Nodes, and then select Stage. This will bring you to the node editor of your new Stage node.

- Select the Join tab in the Node Editor. This is where you can define the logic to join data from multiple nodes, with the help of a generated Join statement. Add column relationships in the last line of the existing Join statement using the bolded code below:

FROM {{ ref('WORK', 'DIM_MENU') }} "DIM_MENU"

INNER JOIN {{ ref('WORK', 'FCT_ORDER_MASTER') }} "FCT_ORDER_MASTER"

ON "DIM_MENU"."MENU_ITEM_ID" = "FCT_ORDER_MASTER"."MENU_ITEM_ID"

- You can rename the node by clicking on the pencil icon next to the node name and give the node a name of your choice. Rename the node

STG_ORDER_MASTER_ITEMS.

- Back in the mapping grid, delete one of the duplicate

MENU_ITEM_IDcolumns, as well as all theSYSTEM_columns and then create and run the node.

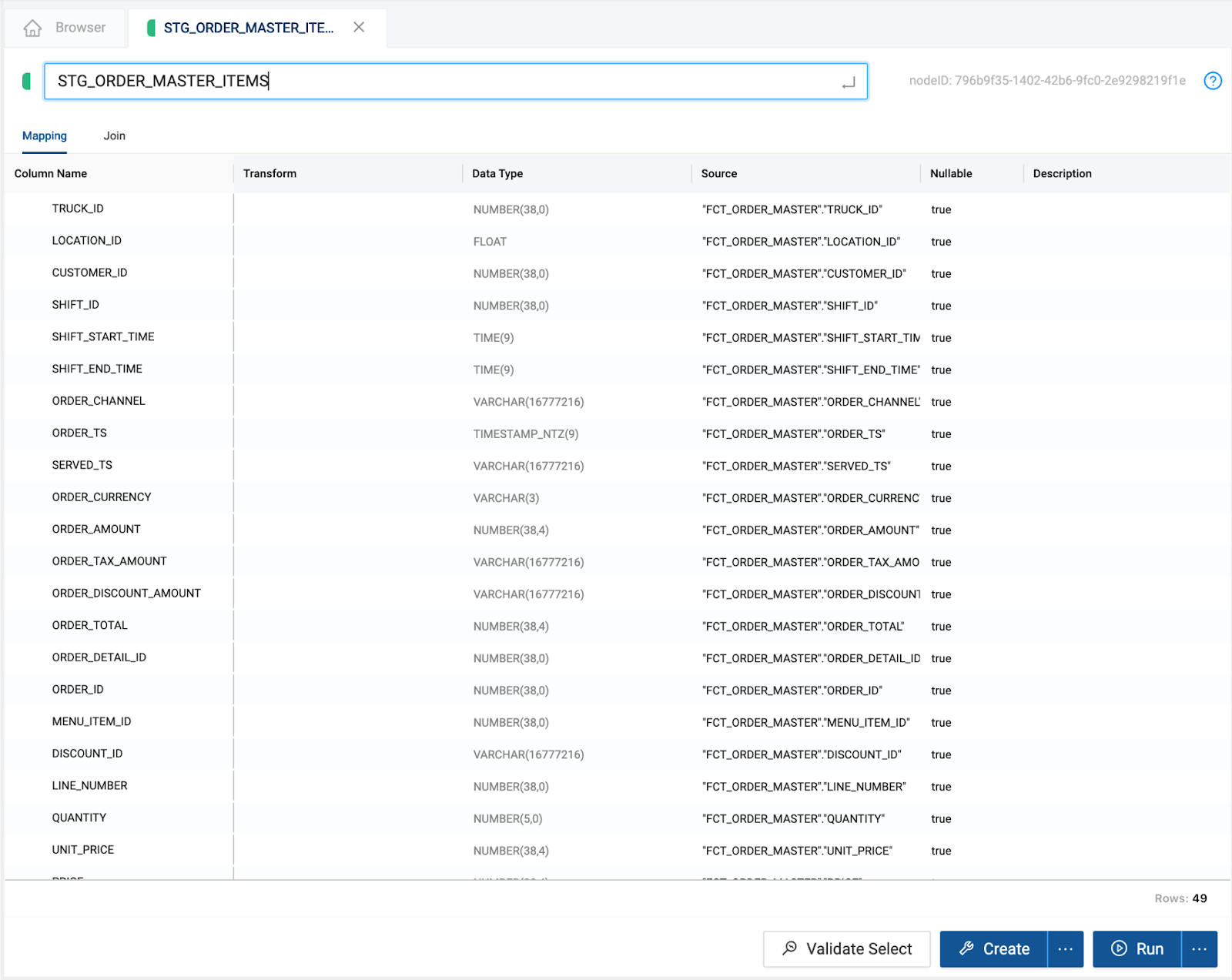

Now we will prepare the data for our ML forecast by selecting the columns we want to work with and applying single column transformations to that data.

The ML Forecast node we will use later in the lab will rely on three columns to produce a forecast:

- Series column

- Timestamp column

- Target column

We will structure our STG_ORDER_MASTER_ITEMS node so that it only contains the columns we will need for our ML Forecast Node.

MENU_ITEM_NAME-> Series columnORDER_AMOUNT-> Target columnORDER_TS-> Timestamp column

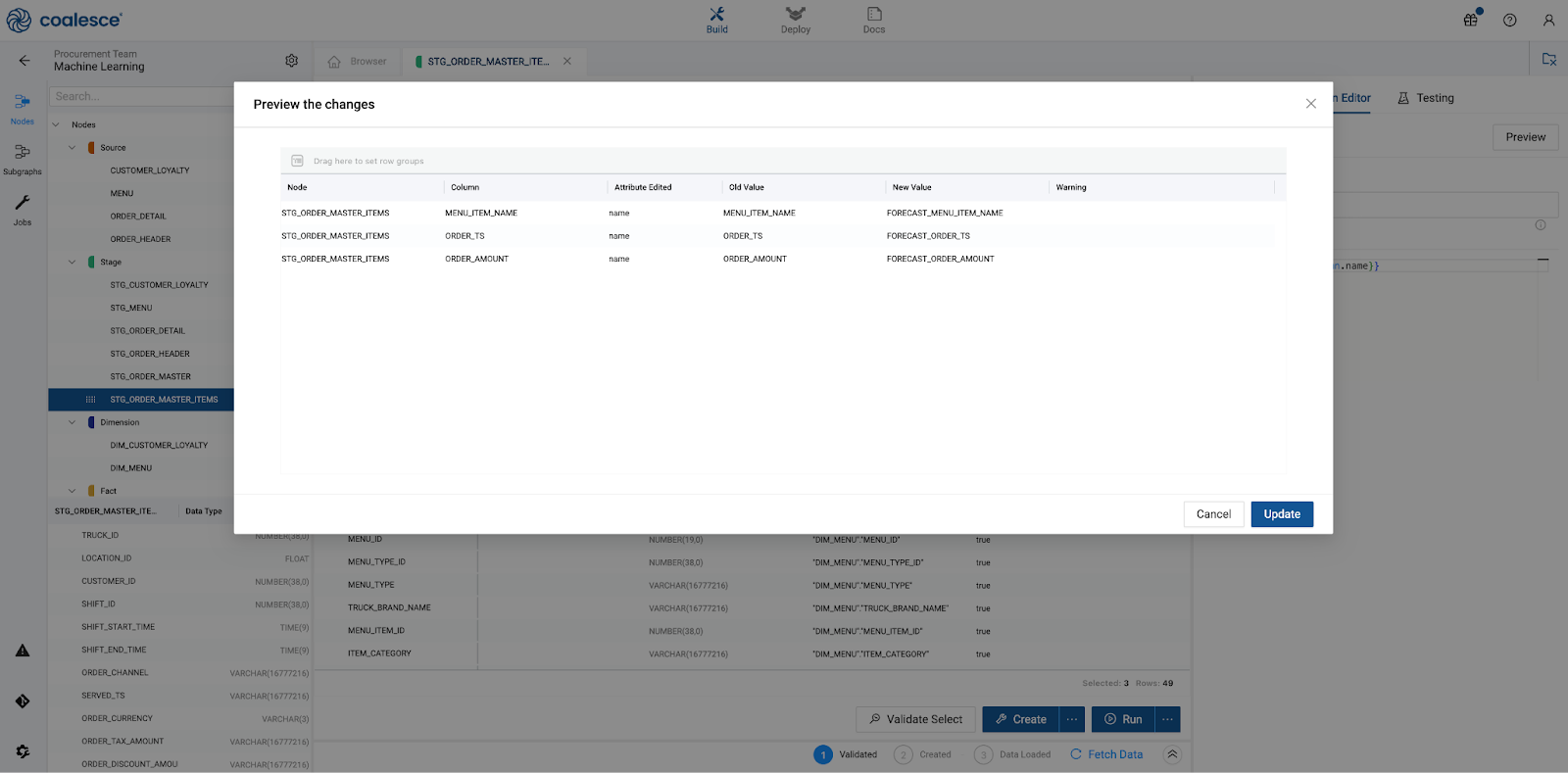

- To structure the node with the columns that we need, the first thing we will do is rename the columns we want to keep. Select the

ORDER_AMOUNT,ORDER_TS, andMENU_ITEM_NAMEcolumns, and right click on either of the columns and select, Bulk Edit.

- In the Bulk Editor, select the Column Name attribute and copy and paste the code below to apply a

FORECASTprefix to each of the columns we want to keep. Select Preview -> Update to rename the columns.

FORECAST_{{column.name}}

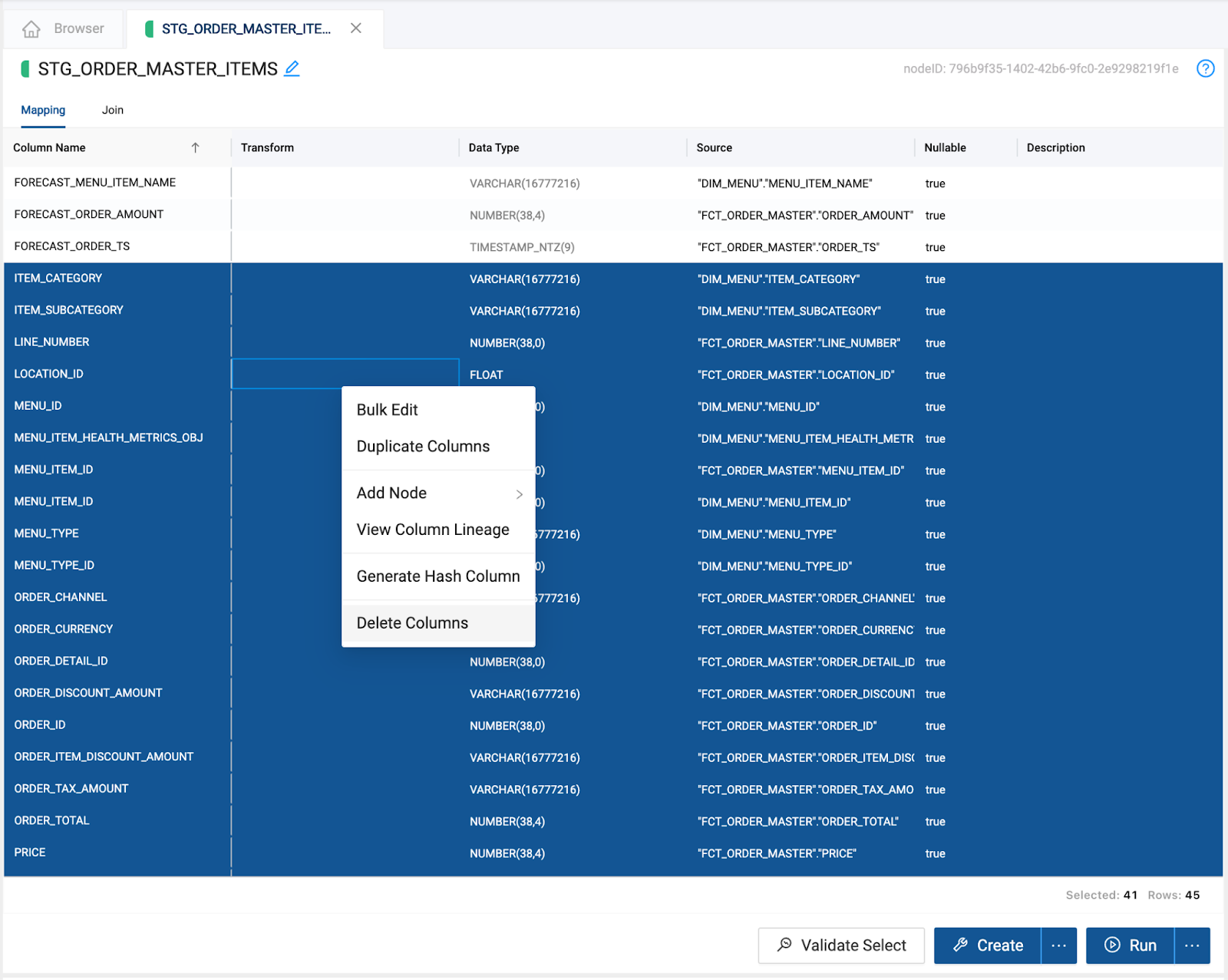

- Click on the Column Name column header in the mapping grid to sort the column names in alphabetical order. Using the shift key, select all of the columns that do not contain a

FORECAST_prefix, right click on them, and delete them.

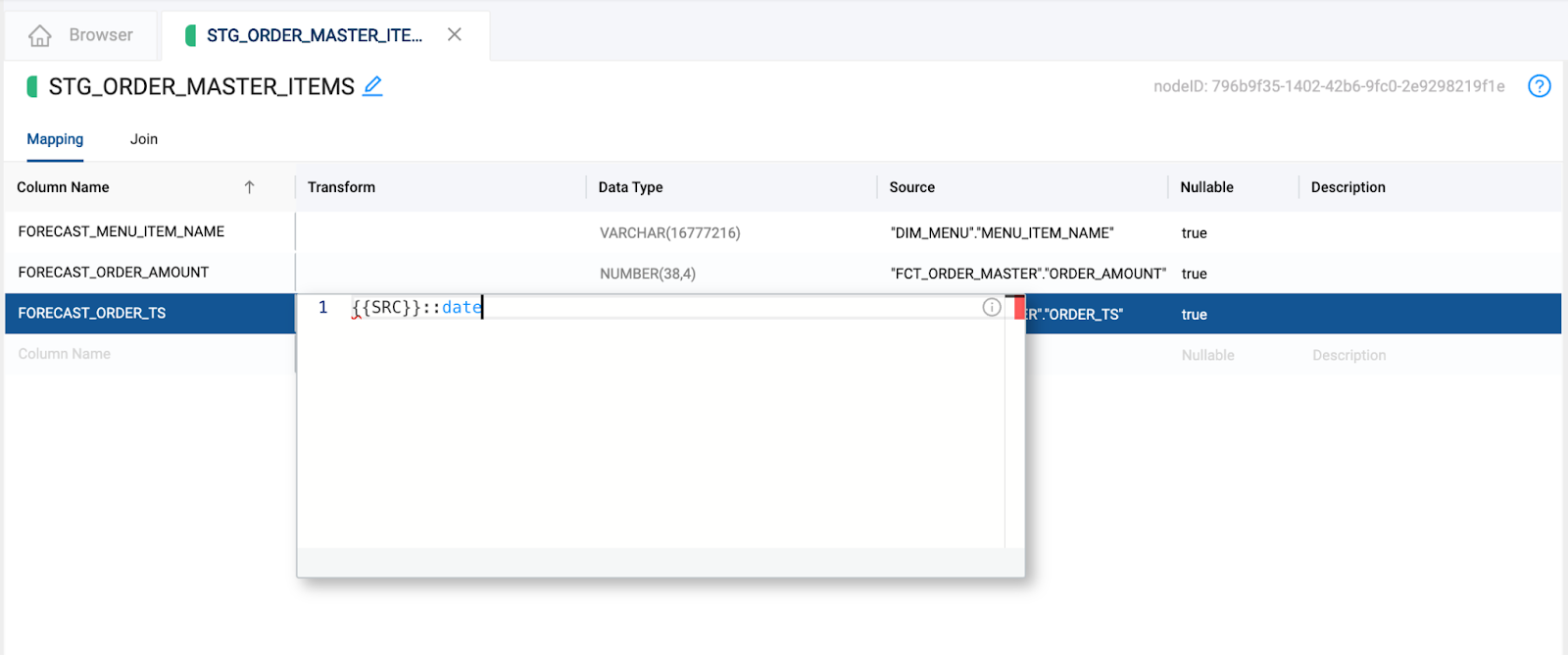

- With the columns we need for our forecast in this node, we will now apply some column transformations. Currently the Timestamp column records values down to the second interval. For this lab, we want to predict order values at the day level. Let's add this transformation.

Select the FORECAST_ORDER_TS column and click into the transform field in the mapping grid. You can cast a timestamp as a date, removing the seconds, minutes, and hours, by using the transformation below:

{{SRC}}::date

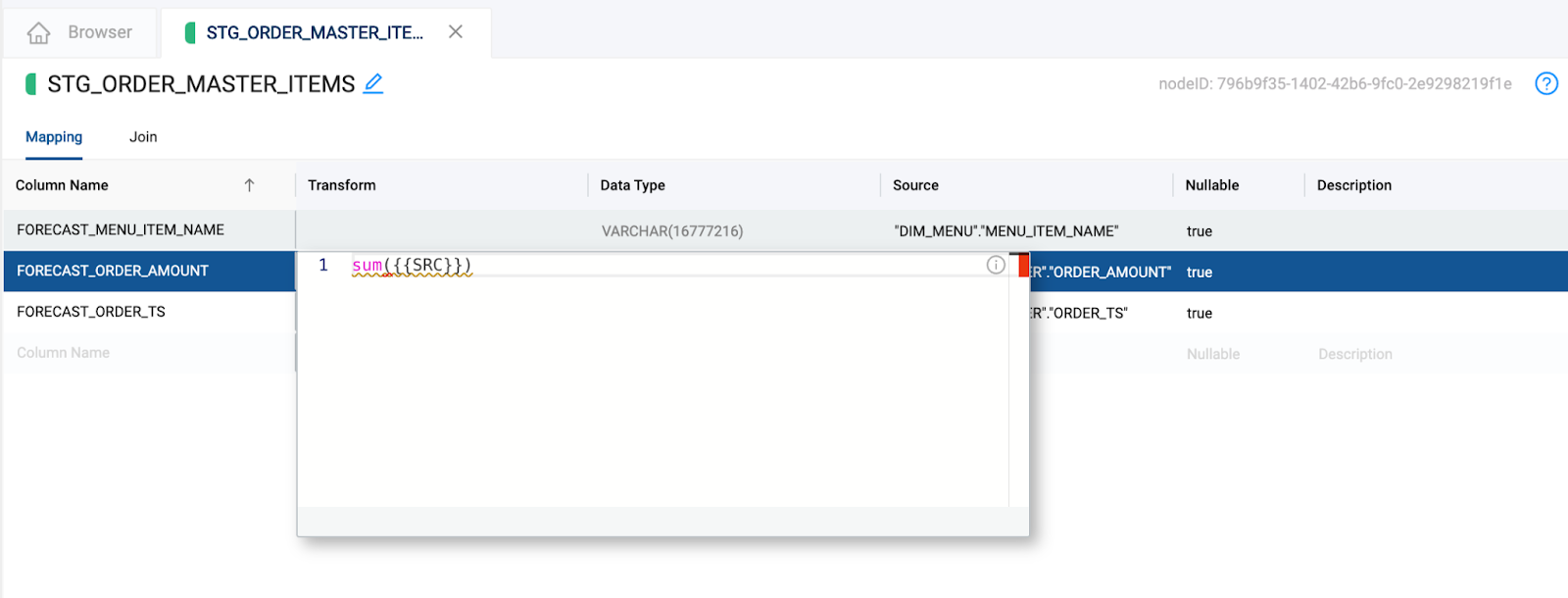

- Next, we need to aggregate our sales at the day level. We can use a sum function for this. You can use the transformation here to sum the

ORDER_AMOUNTcolumn.

sum({{SRC}})

- We will leave the

MENU_ITEM_NAMEcolumn as it is. However, because we have included an aggregate function, we will need to use aGROUP BYin the node. Navigate to theJOINtab so we can finish preparing this node.

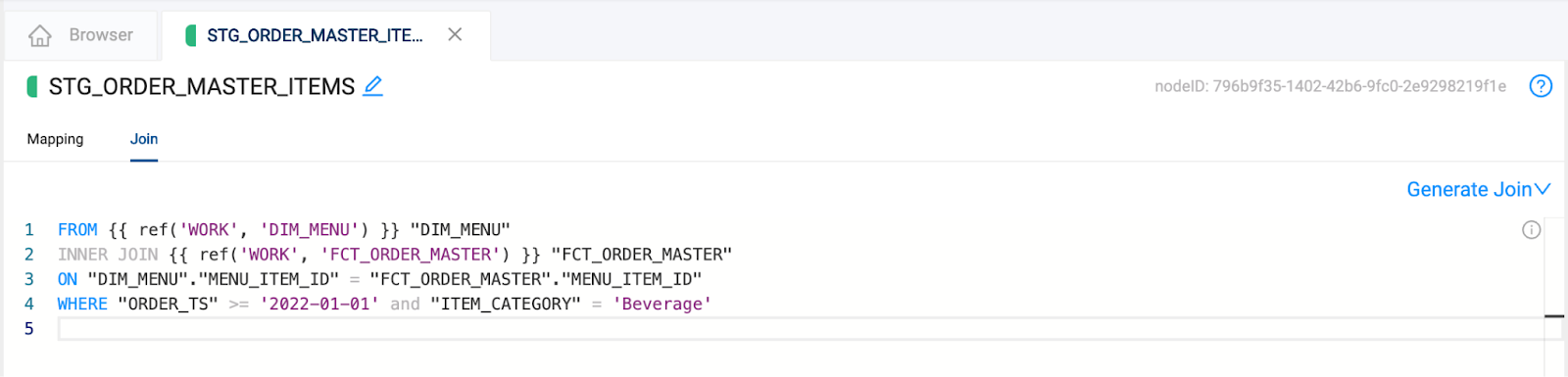

- The first thing we will do is add a filter to this node, as the dataset we are working with is quite large and has dozens of item names to predict on. We will filter the dataset to allow our ML Forecast node to run faster.

To do this, within the editor, hit the enter key and add a new line to the editor. Then, type WHERE to add a filter clause.

For the conditions in our filter, we will first filter where the ORDER_TS column is greater than or equal to 2022-01-01. We will also add a filter where the ITEM_CATEGORY is equal to Beverage. You can copy and paste the code for this filter below:

WHERE "ORDER_TS" >= '2022-01-01' and "ITEM_CATEGORY" = 'Beverage'

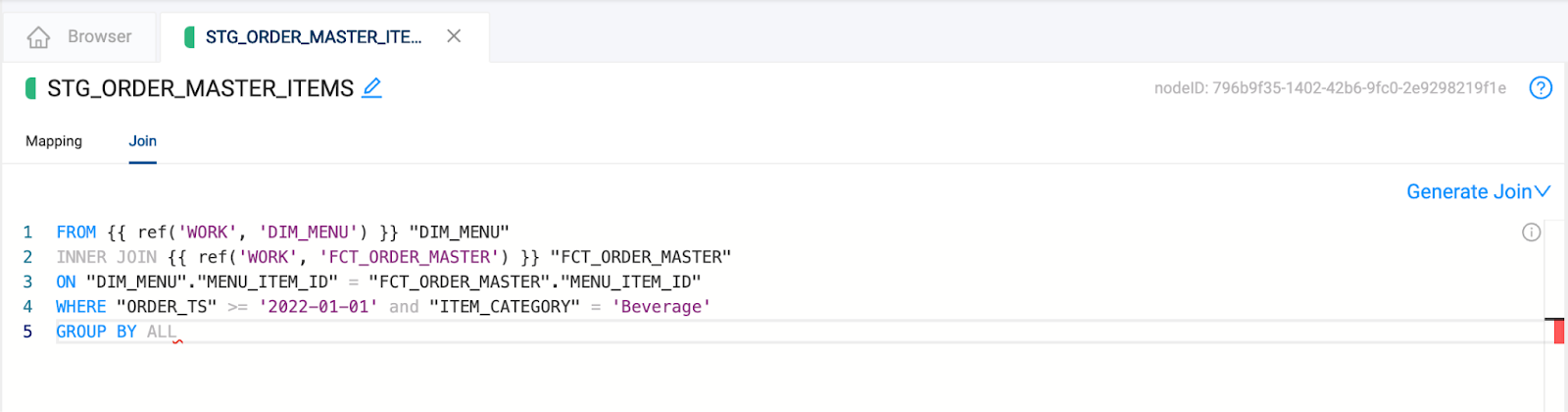

- Staying in the Join tab, we will add a

GROUP BYstatement. Within the editor, add a new line by hitting the enter key. Next, typeGROUP BYwithin the editor, followed by the two non-aggregated columns,MENU_ITEM_NAMEandORDER_TS. You can copy the code for this below:

GROUP BY ALL

- Create and Run the node to populate it with data for the next section of the lab guide.

As the final step of building out this data pipeline for operationalizing the ML Forecast Node, we will add in the ML Forecast Node to the pipeline.

- Navigate back to the Browser to see your data pipeline. Select the

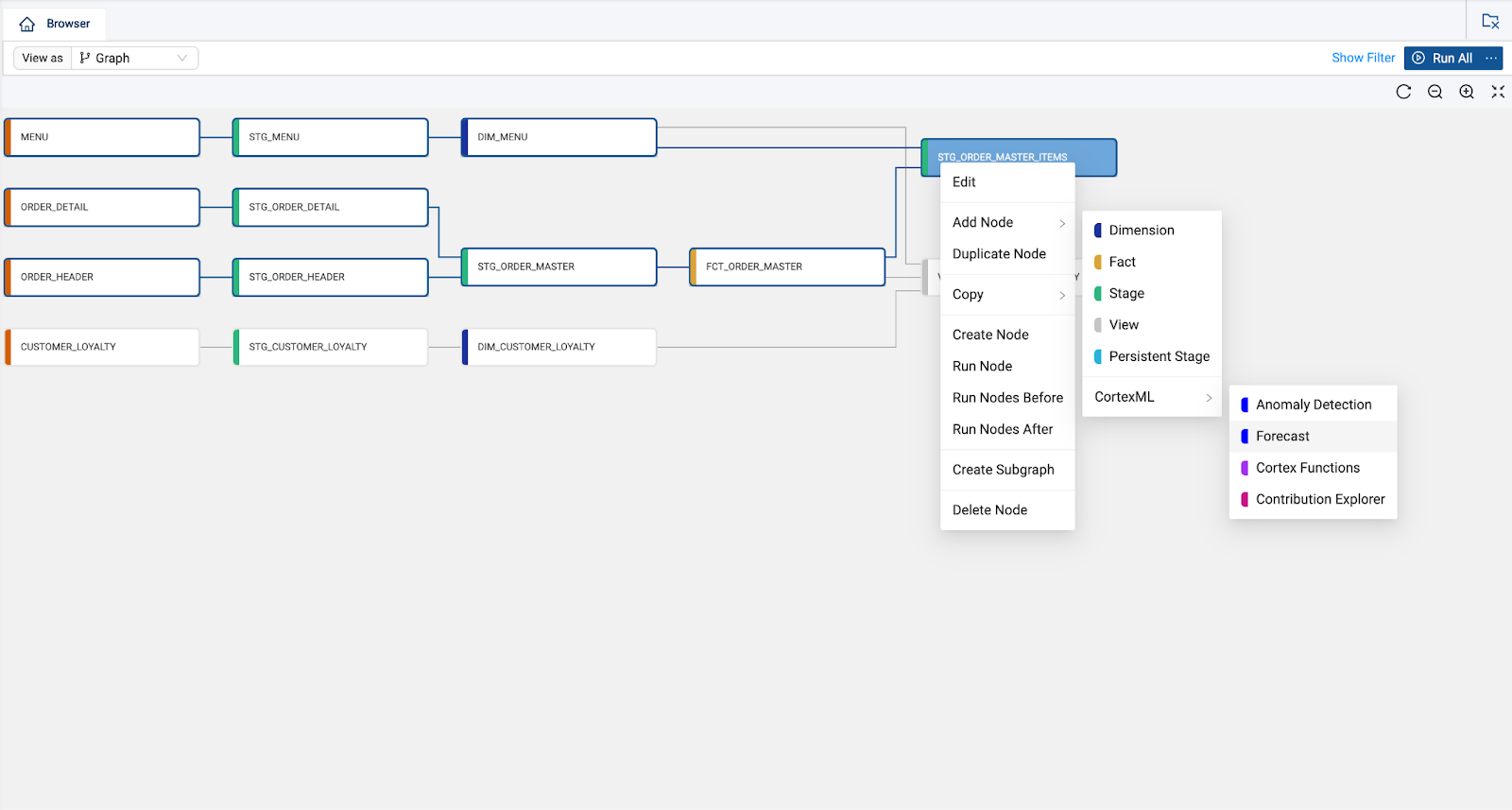

STG_ORDER_MASTER_ITEMSnode that we have been working with and right click on it. Select Add Node -> CortexML -> Forecast.

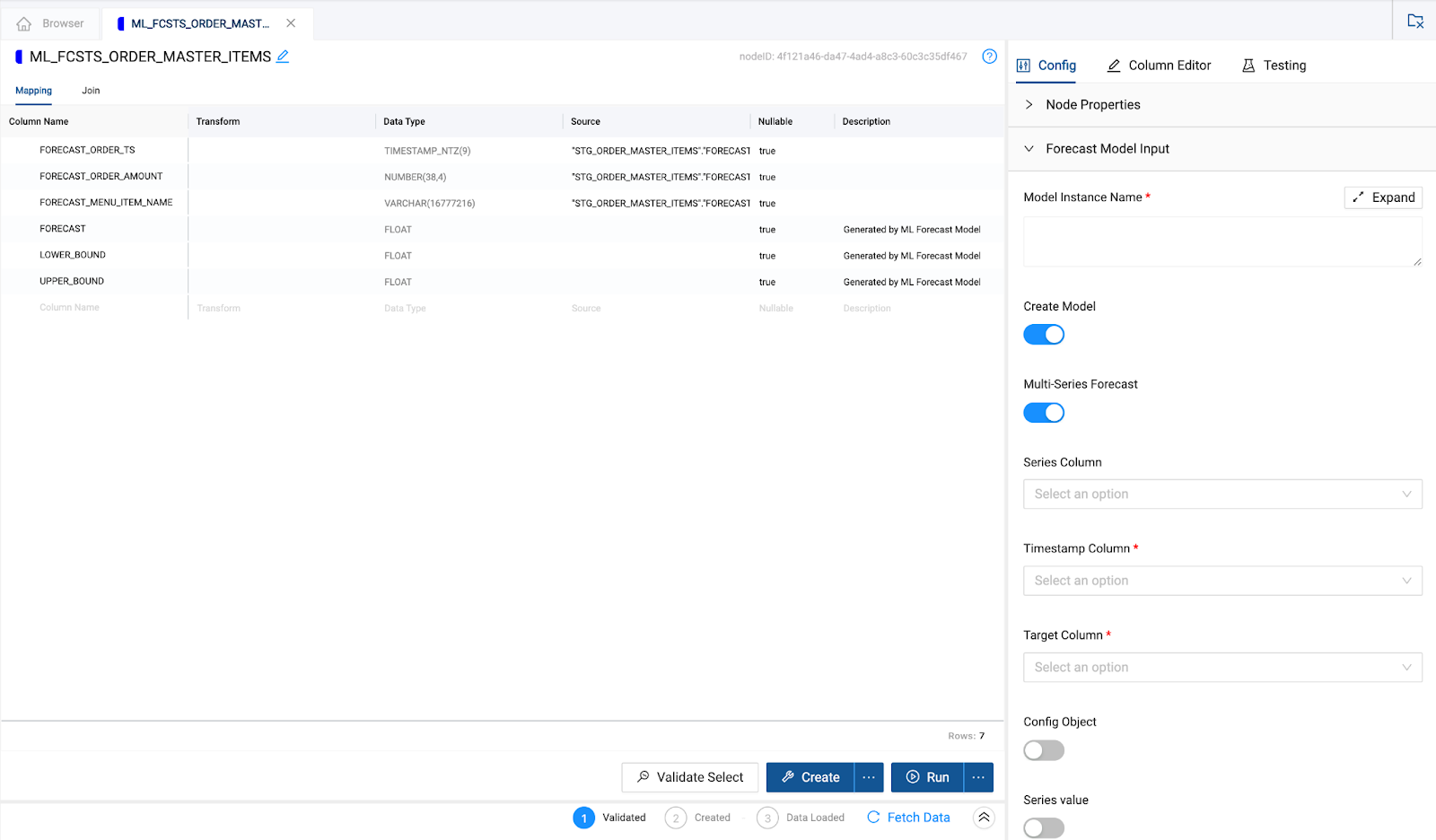

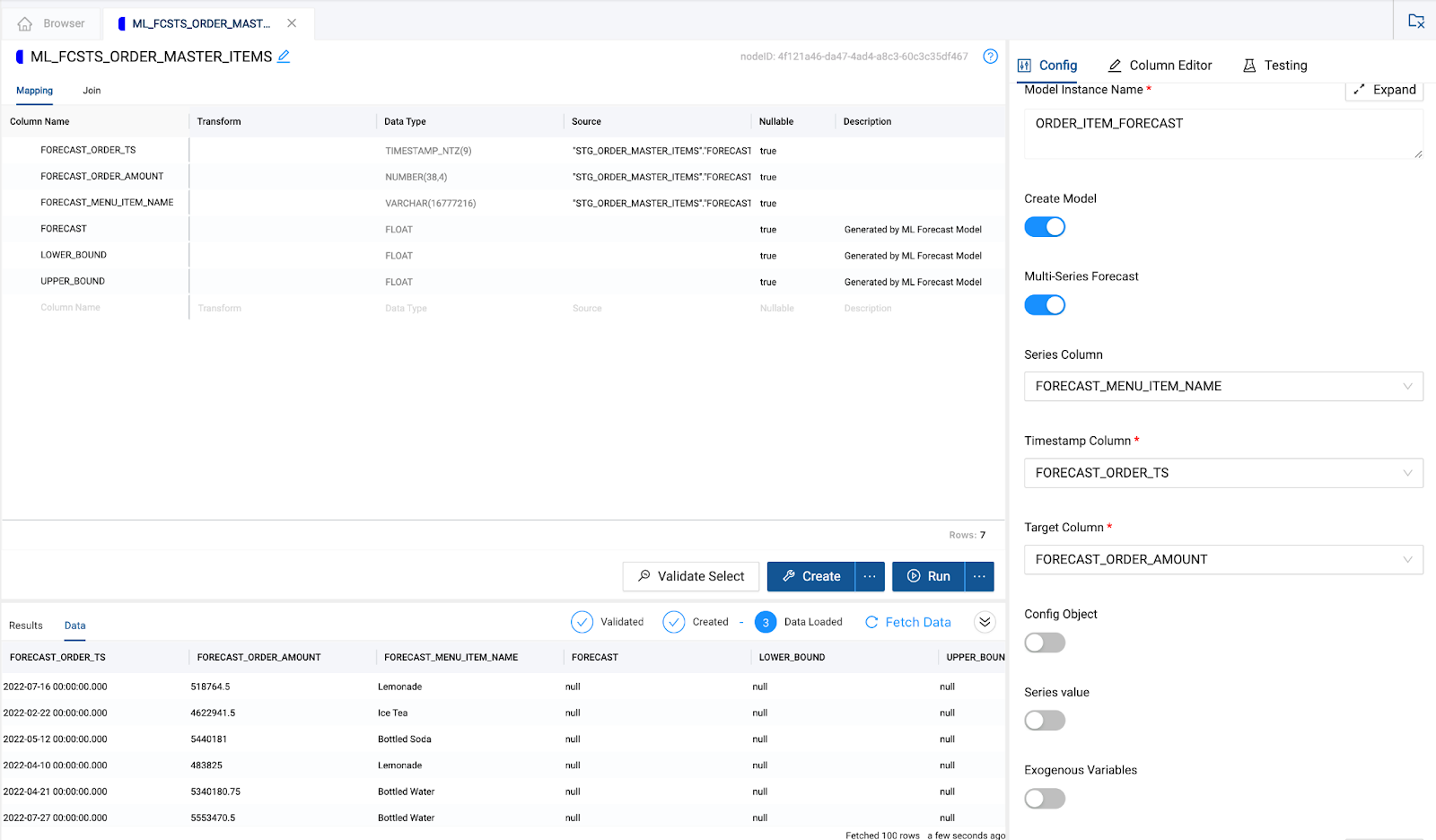

- Within the node, there will be three additional columns that have been added,

FORECAST,LOWER_BOUND, andUPPER_BOUND. These are generated automatically by the ML Forecast node and will be used to forecast the values of the configured node.

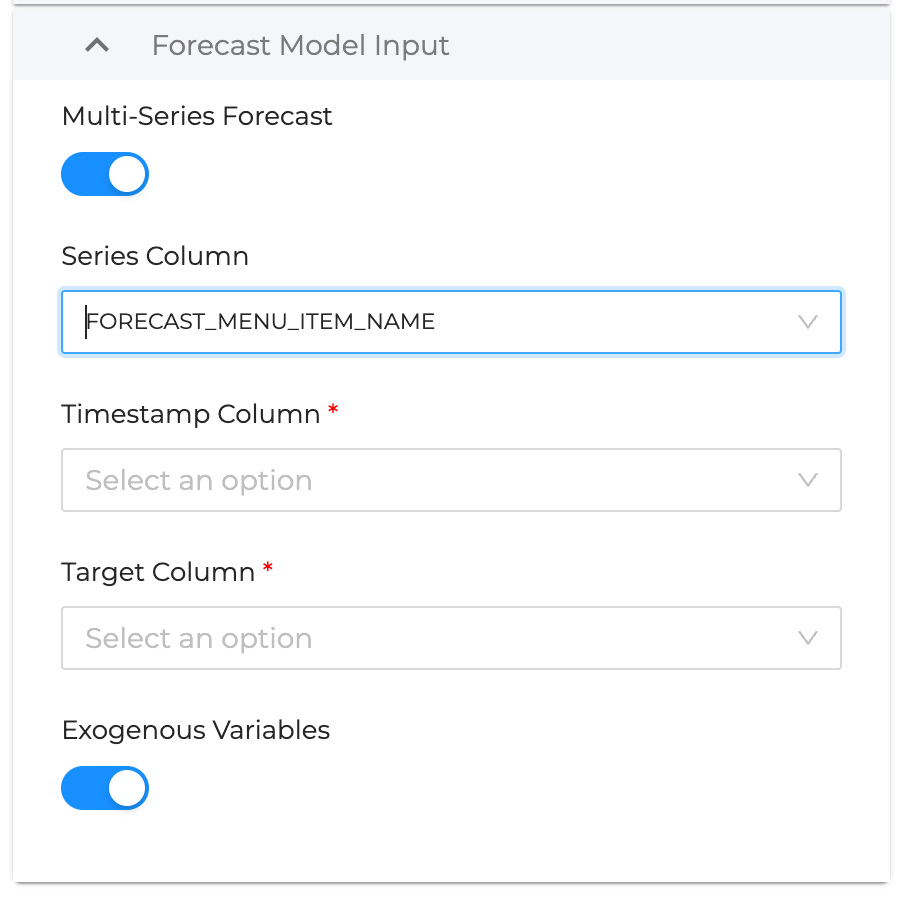

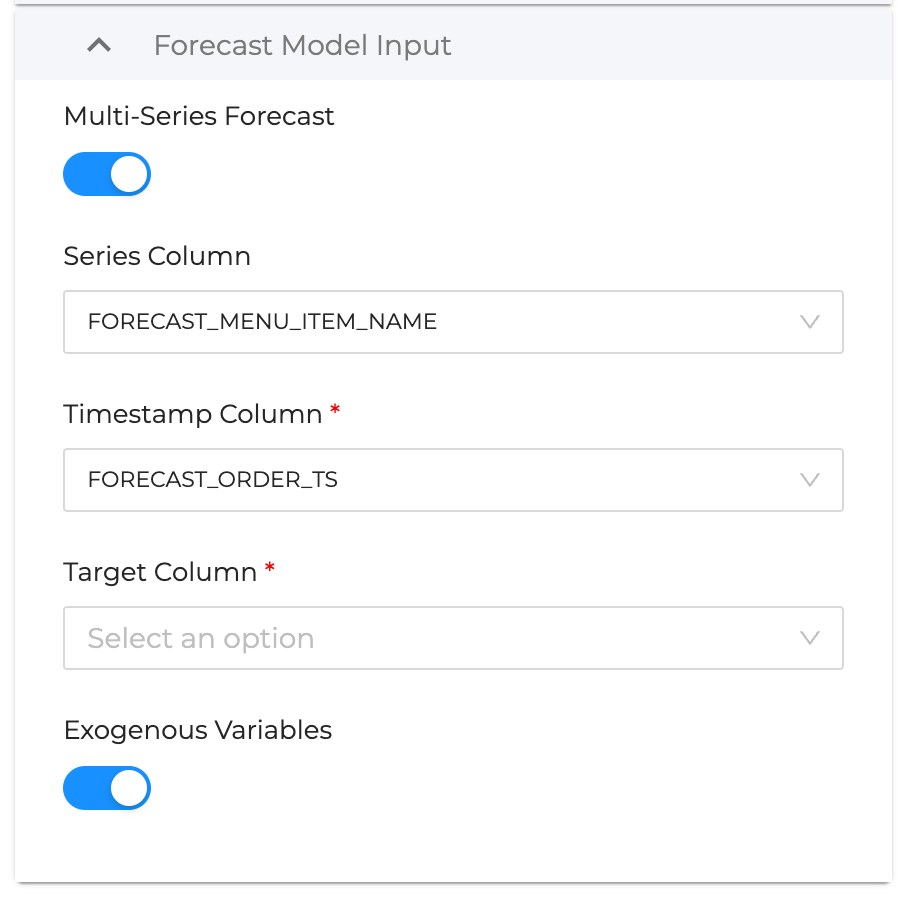

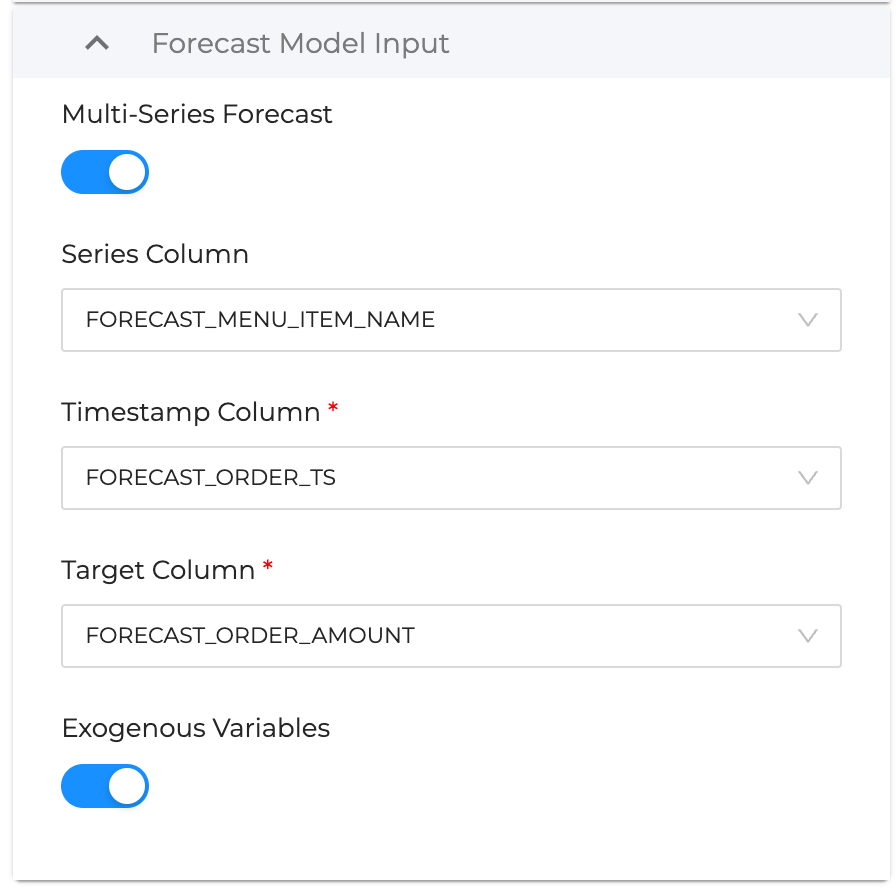

Within the config section, select the Forecast Model Input dropdown. This is where we will configure the node to forecast the values we want to predict. Since there are multiple menu items that we will be predicting order amounts for, we will leave the Multi-Series Forecast toggle to on.

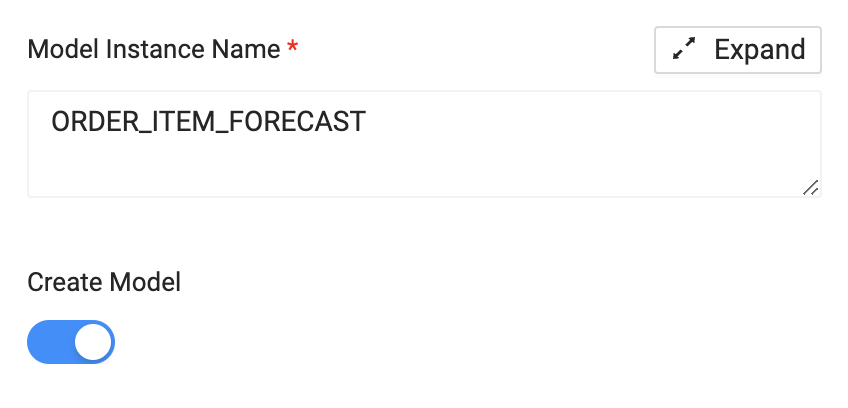

- For the Model Instance Name, you can name the model any meaningful name you want, but for the sake of this lab, we will call this model

ORDER_ITEM_FORECAST.

- For the Series Column input, we will use the

FORECAST_MENU_ITEM_NAMEcolumn. This Series Column is the column that is used as the series for predicting values for.

- For the Timestamp Column, we will use the

FORECAST_ORDER_TScolumn.

- For the Target Column, we will use the

FORECAST_ORDER_AMOUNTcolumn. The Target Column is the column that contains the values we want to predict.

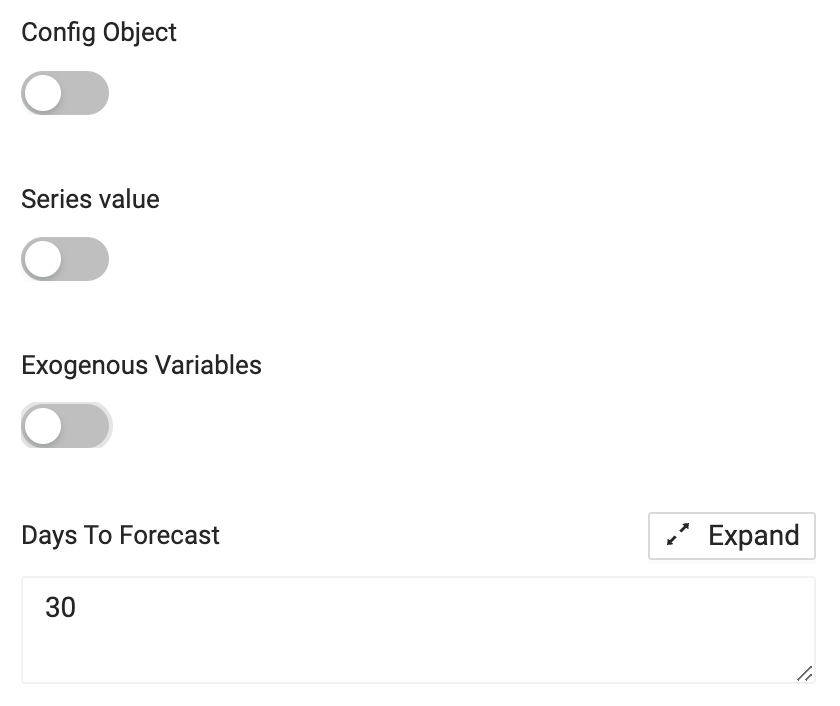

- In this node type, the exogenous variables toggle is turned on. Exogenous Variables are additional features or columns that the model can use to help refine and predict the output for the model. Our node doesn't contain any additional columns that are not being used by the required parameters, so we will toggle this off.

We are presented with a Days to Forecast input that defaults to 30 days. We can update this value to any value of our choosing, but for the sake of this lab guide, will leave the value at 30.

- You have now successfully configured the ML Forecast node. Create and Run the node to populate it within Snowflake.

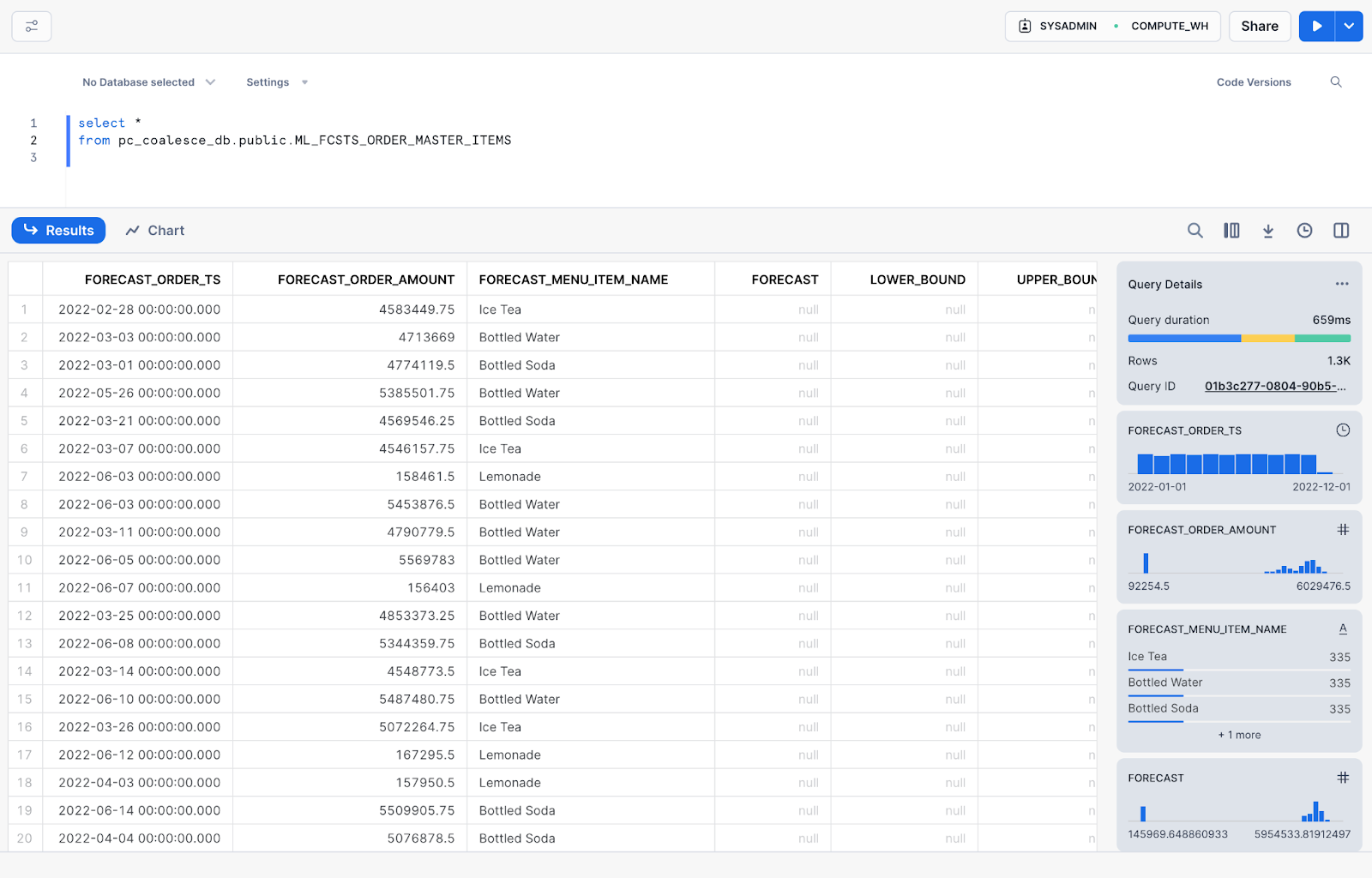

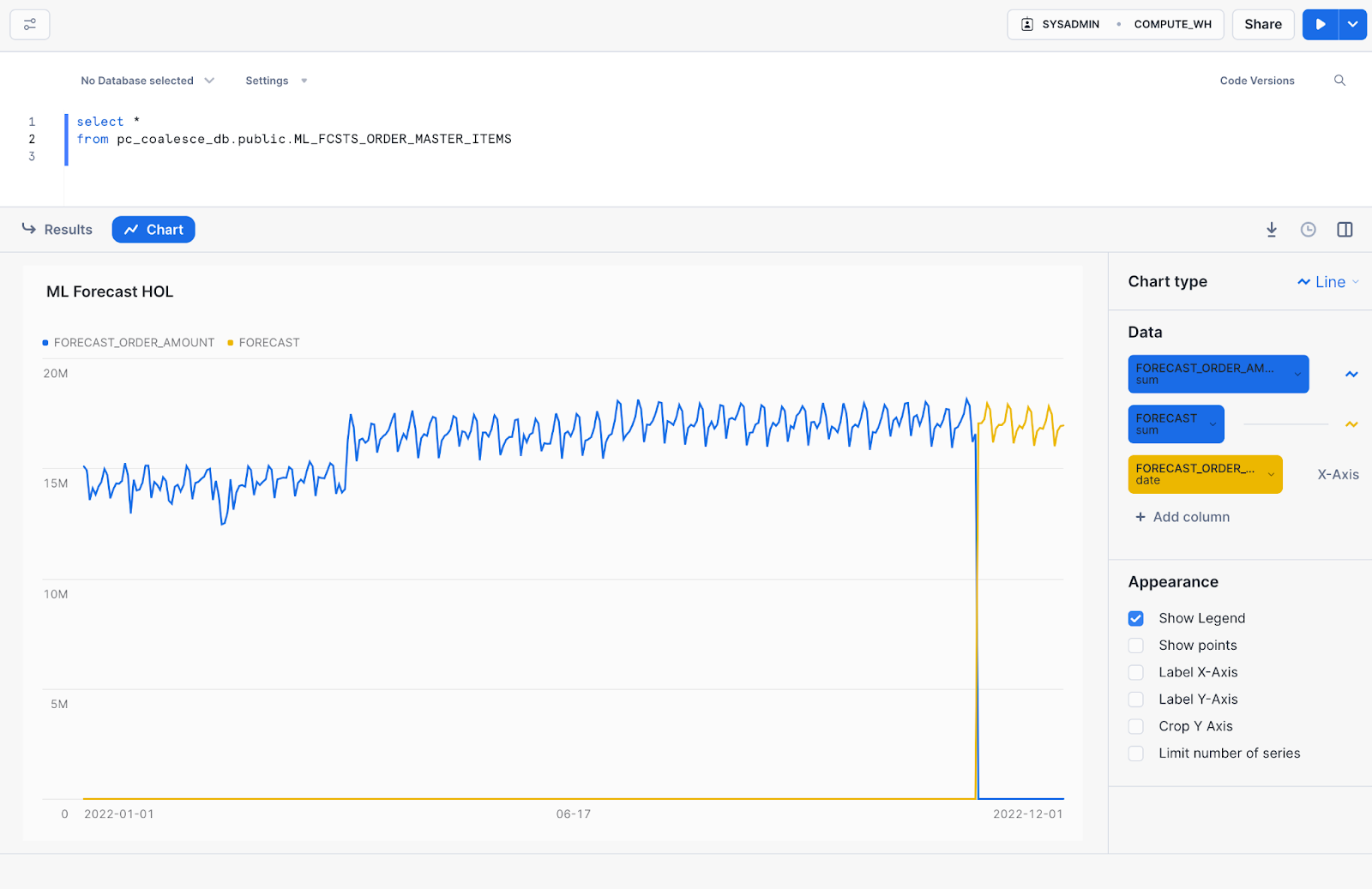

Now that we have configured, created, and run the ML Forecast node within our Snowflake account, we can navigate to Snowflake to see how our forecasted values show up.

- Your node should be created within the

PC_COALESCE_DB.PUBLICdatabase and schema, and is likely calledML_FCTS_ORDER_MASTER_ITEMS, unless you manually changed the name during the last section.

Within Snowflake, run a select statement that queries all of the data within the table. You can copy and paste the code below, assuming you are using the default table name for your ML Forecast node:

select *

from pc_coalesce_db.public.ml_fcsts_order_master_items

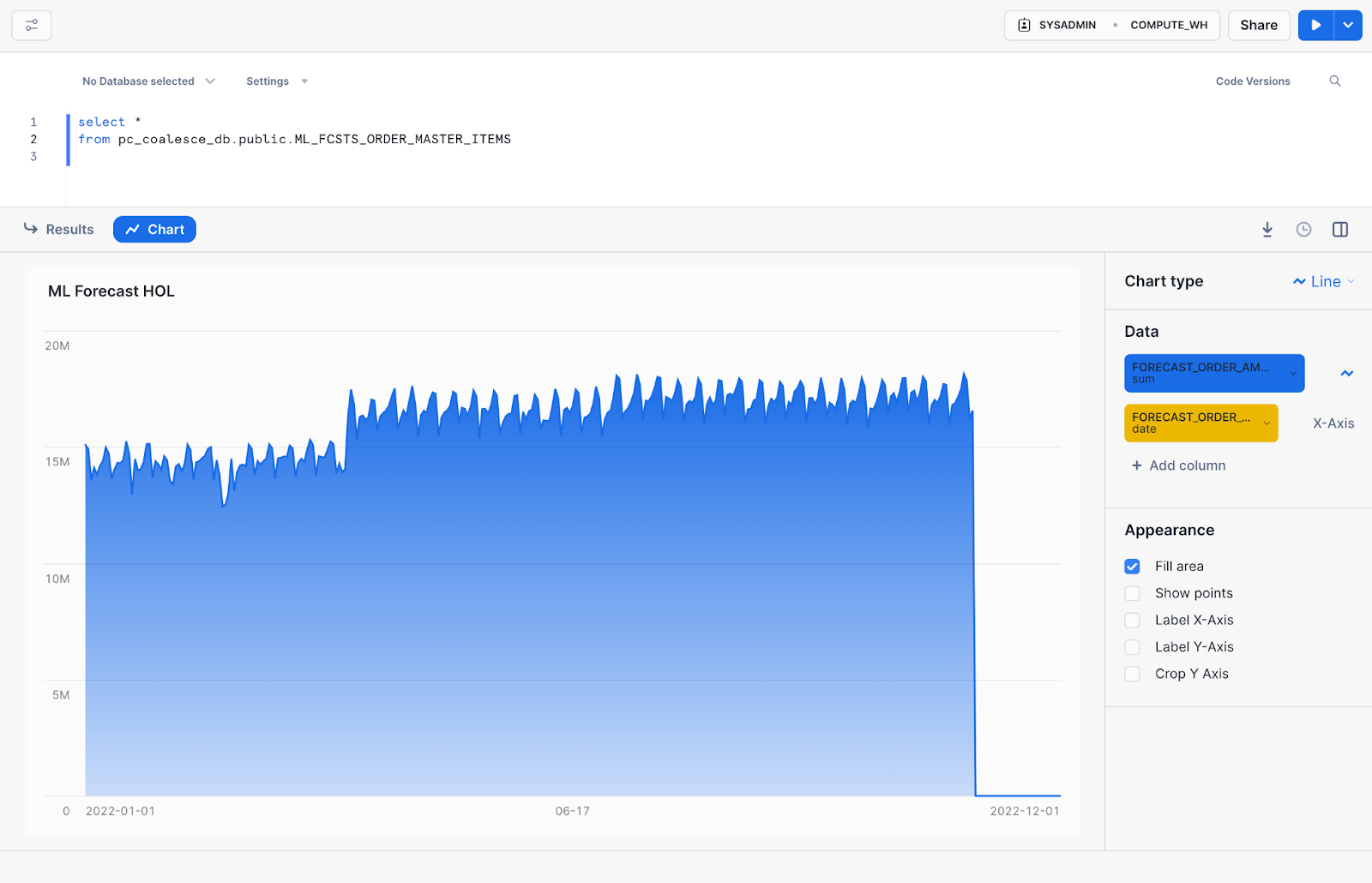

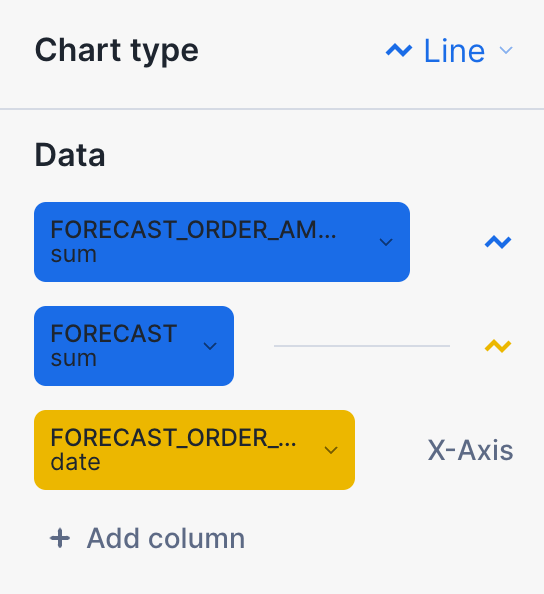

- Next, select the Chart option next to the results of your query.

- If the chart chart type is not a Line, charge the chat to a Line Chart

- The first line (blue line), will denote our historical data that we've already captured and forecasted our values with. Make sure the Blue line has

FORECAST_ORDER_AMOUNTas the column value.

- The X-Axis will be the FORECAST_ORDER_TS column, allowing us to see our order amounts over time

- Finally, select Add column beneath the X-Axis and select FORECAST as the column. This is the column that Coalesce automatically generated to predict the values of our order amounts in the future. This will likely be denoted by a yellow line.

- Now you can see how Coalesce used Snowflake Cortex functions to predict new values of our order amounts for the next 30 days!

Congratulations on completing your lab! You've mastered the basics of Coalesce and are now equipped to venture into our more advanced features. Be sure to reference this exercise if you ever need a refresher.

We encourage you to continue working with Coalesce by using it with your own data and use cases and by using some of the more advanced capabilities not covered in this lab.

What we've covered

- How to navigate the Coalesce interface

- Configure storage locations and mappings

- How to add data sources to your graph

- How to prepare your data for transformations with Stage nodes

- How to join tables

- How to apply transformations to individual and multiple columns at once

- How to configure and understand the ML Forecast node

Continue with your free trial by loading your own sample or production data and exploring more of Coalesce's capabilities with our documentation and resources. For more technical guides on advanced Coalesce and Snowflake features, check out Snowflake Quickstart guides covering Dynamic Tables and Cortex ML functions.

Additional Coalesce Resources

Reach out to our sales team at coalesce.io or by emailing sales@coalesce.io to learn more!